Show code cell source

from faker import Faker

def generate_random_names(num_names):

fake = Faker()

names = [[fake.first_name(), fake.last_name()] for _ in range(num_names)]

return names

def create_table(names):

header = ["Number", "Name"]

table = []

table.append(header)

for idx, name in enumerate(names, start=1):

full_name = " ".join(name)

row = [idx, full_name]

table.append(row)

# Printing the table

for row in table:

print(f"{row[0]:<10} {row[1]:<30}")

# Generate 10 random names and call the function

random_names = generate_random_names(10)

create_table(random_names)

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 1

----> 1 from faker import Faker

3 def generate_random_names(num_names):

4 fake = Faker()

ModuleNotFoundError: No module named 'faker'

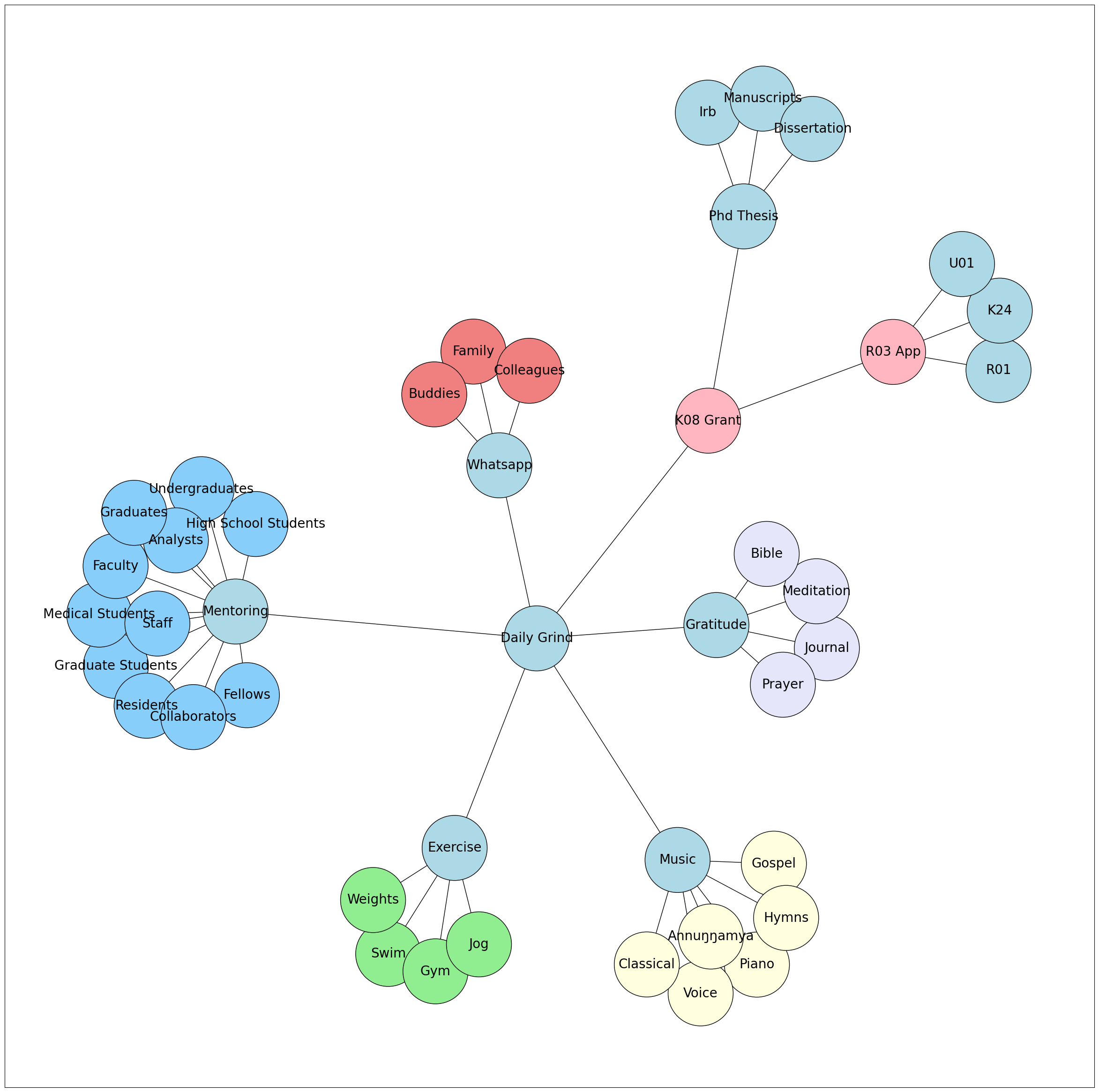

826. graçias 🙏#

beta version of the fenagas webapp is up & running

andrew & fawaz will be first to test it

consider rolling it out to faculty in department

philosophe, elliot, betsy, and with residents

827. fenagas#

let ai say about

fena“annuŋŋamya mu makubo ago butukirivu”ai can’t produce such nuance on its own

but, guided, it can

828. music#

take me to church on apple music

my fave playlist

savor it!

829. boards#

my scores are expired

i need to retake them

will be fun with ai

consider a timeline

and with fenagas,llc?

just might have bandwidth

but its the synthesis of the two

that will be the most fun

830. breakthru#

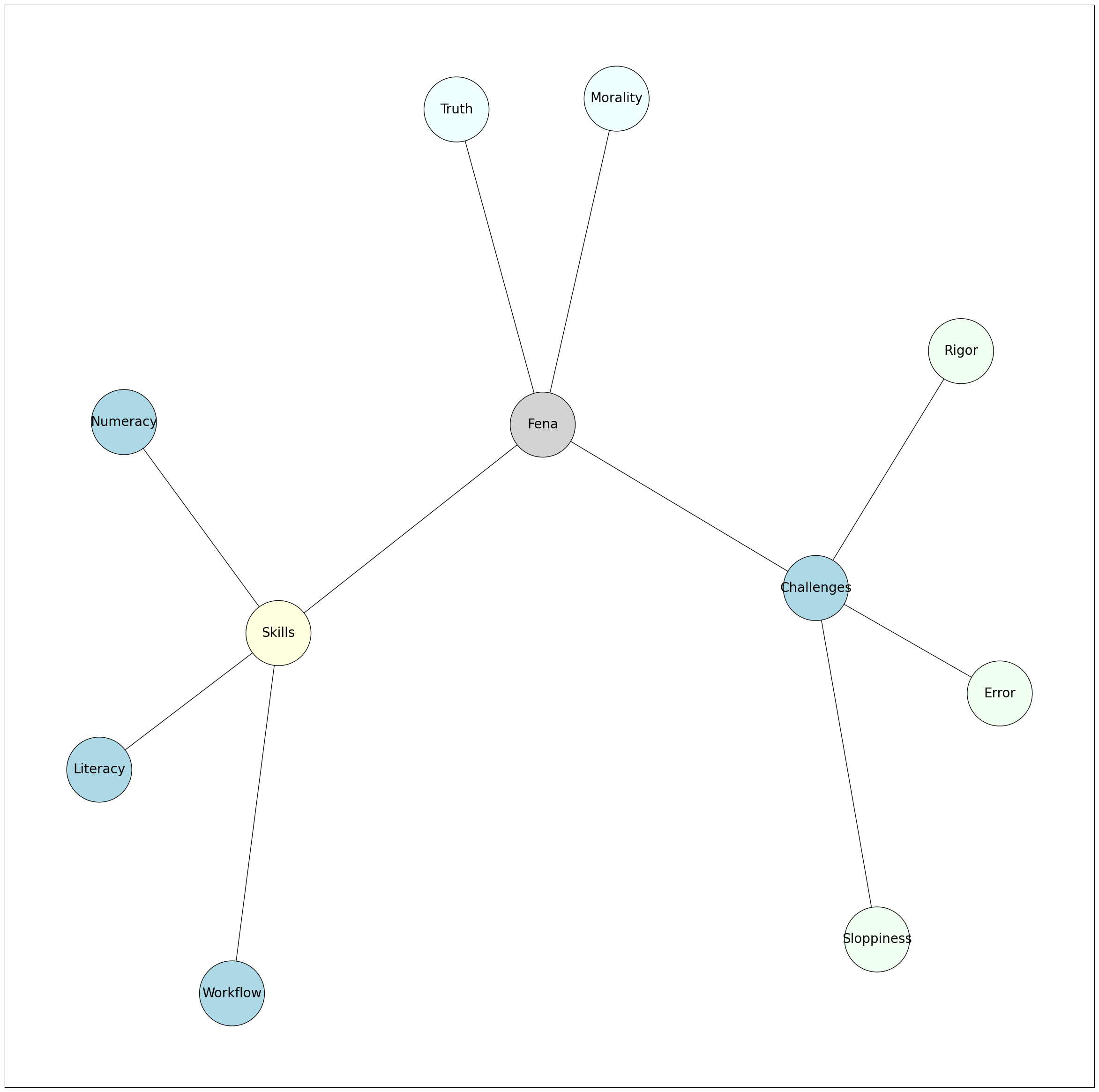

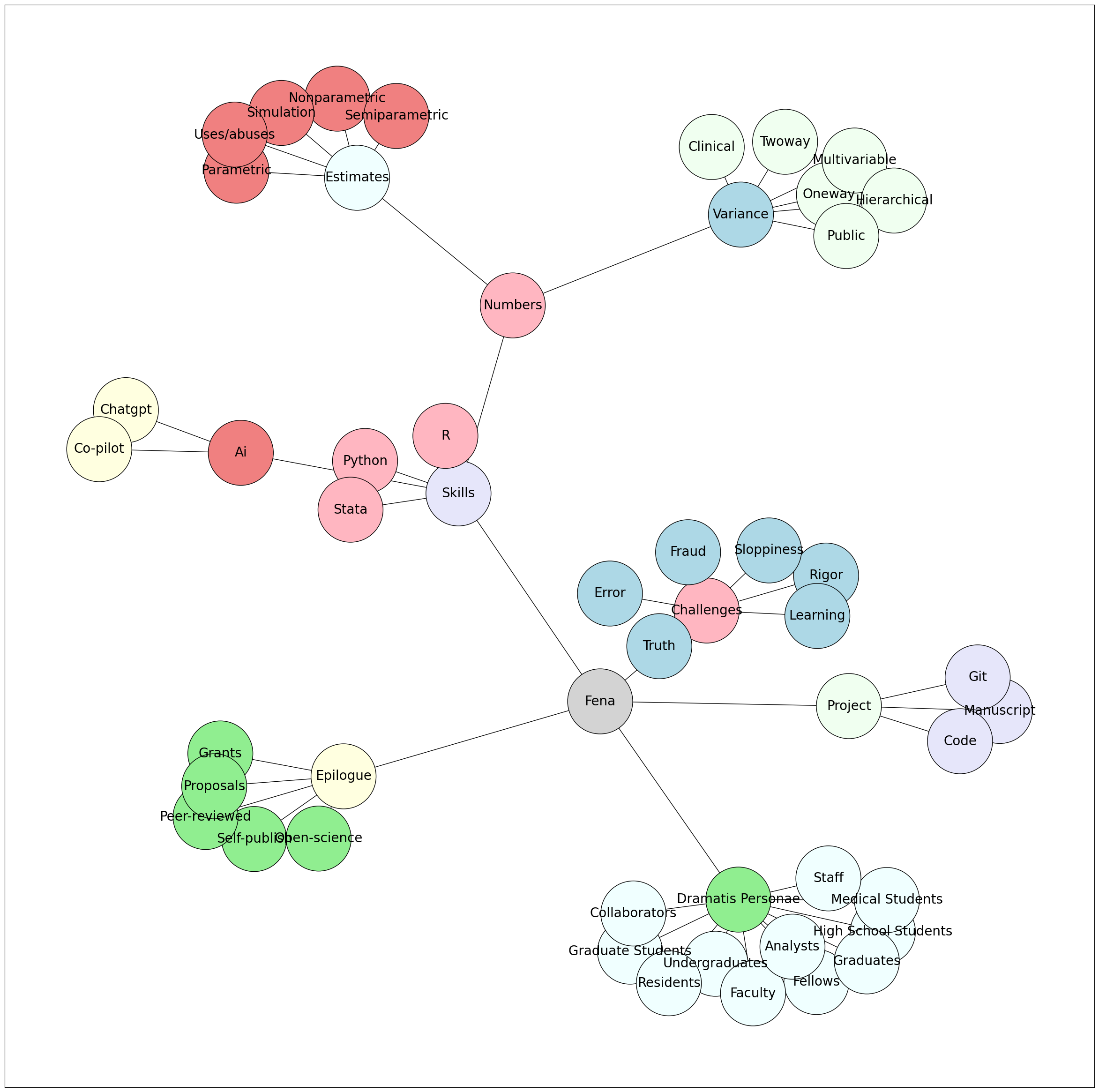

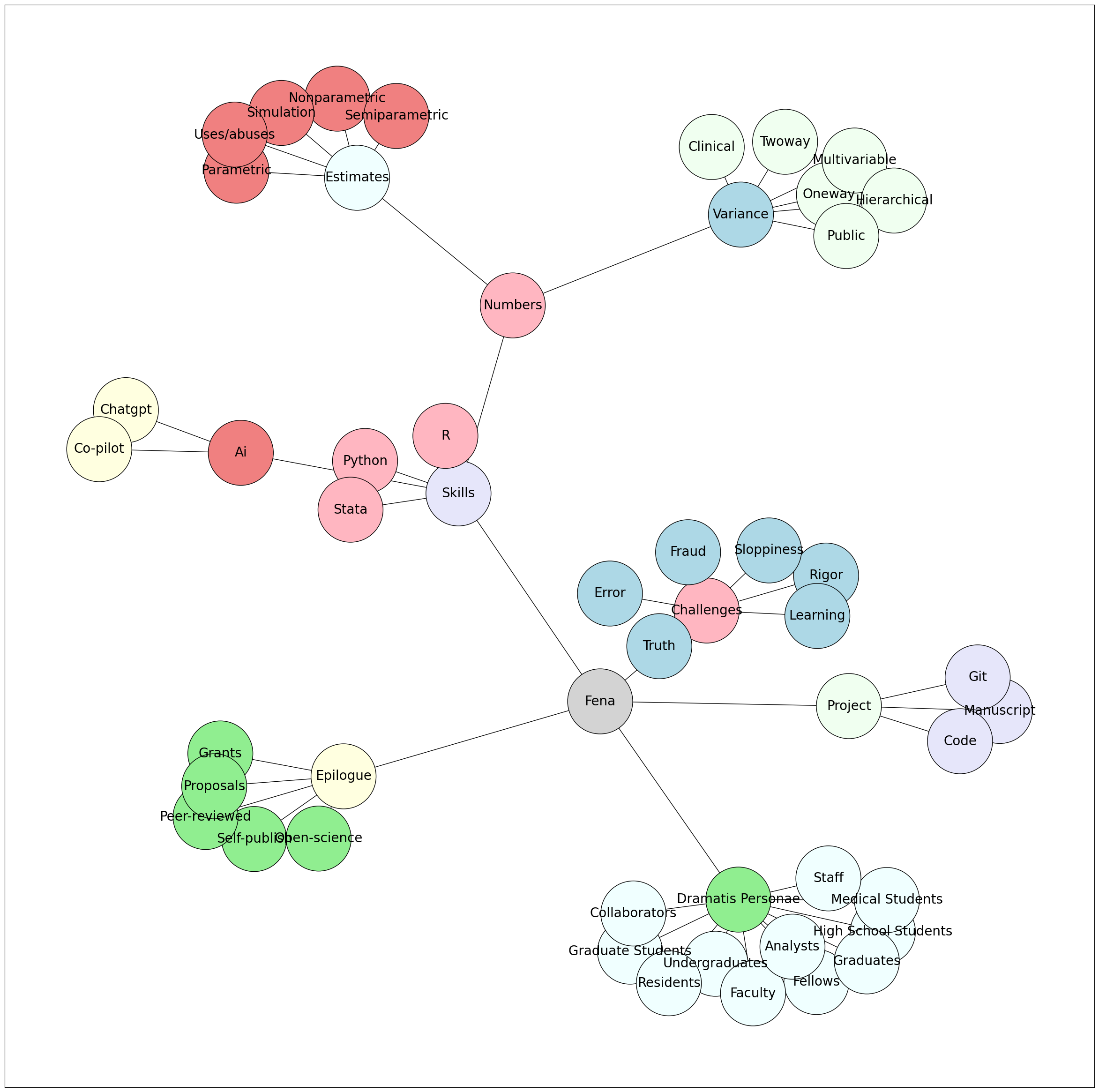

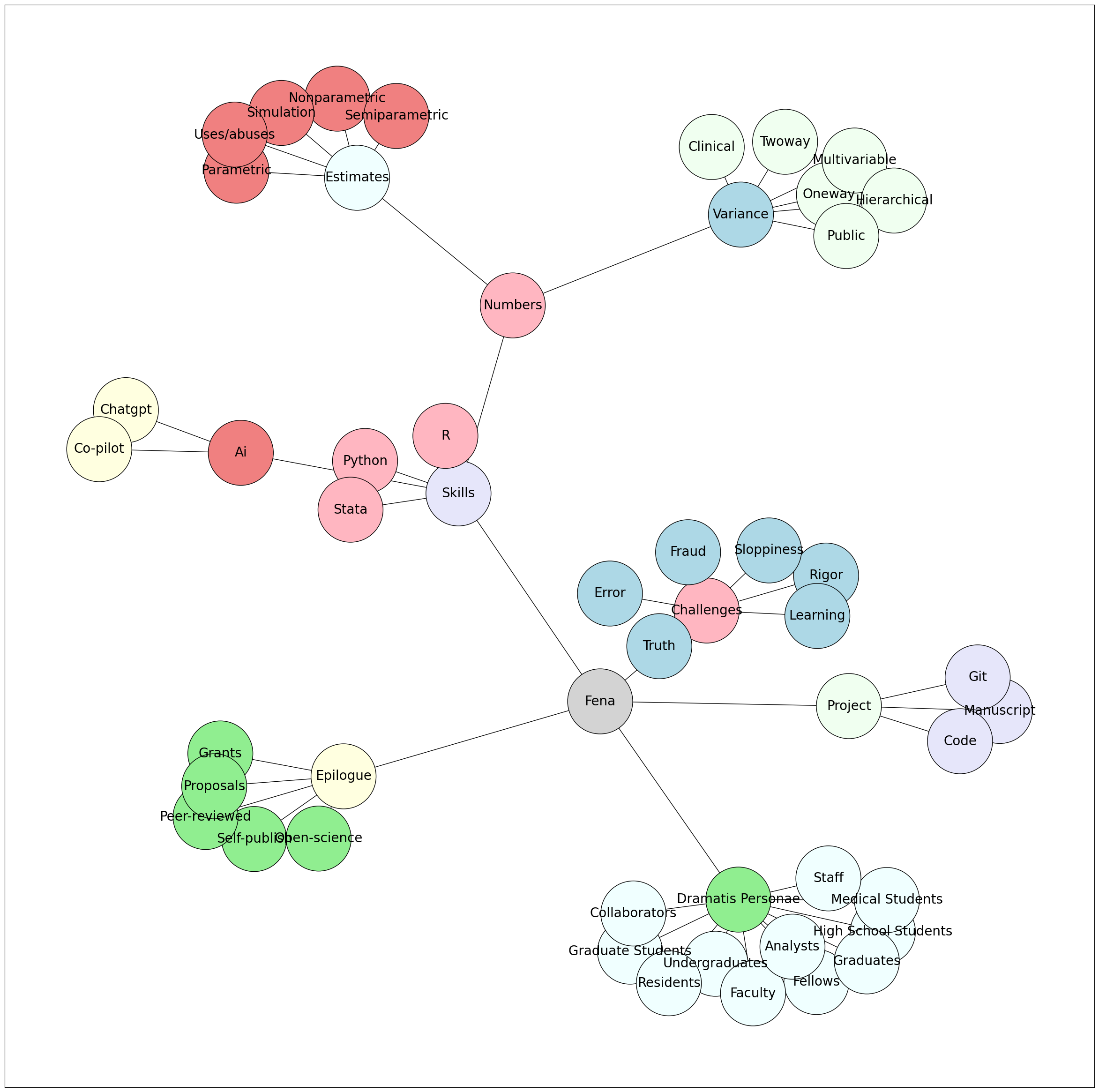

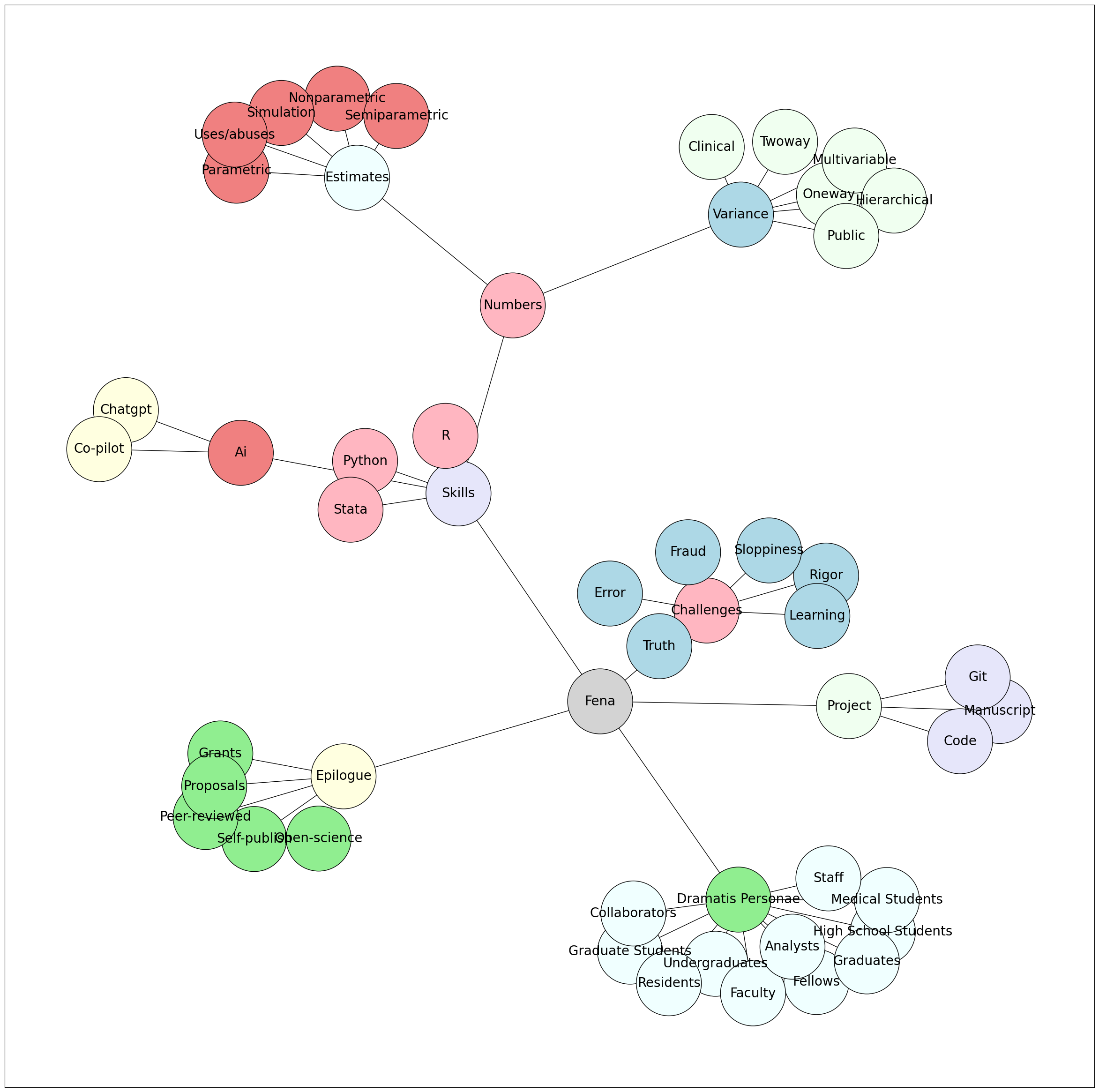

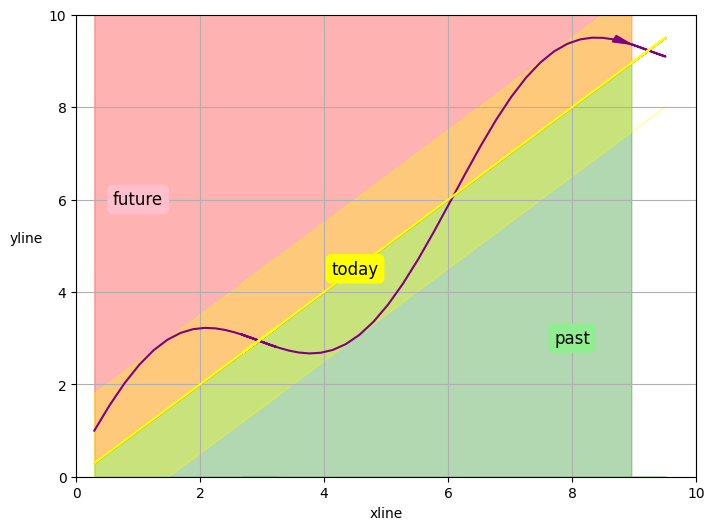

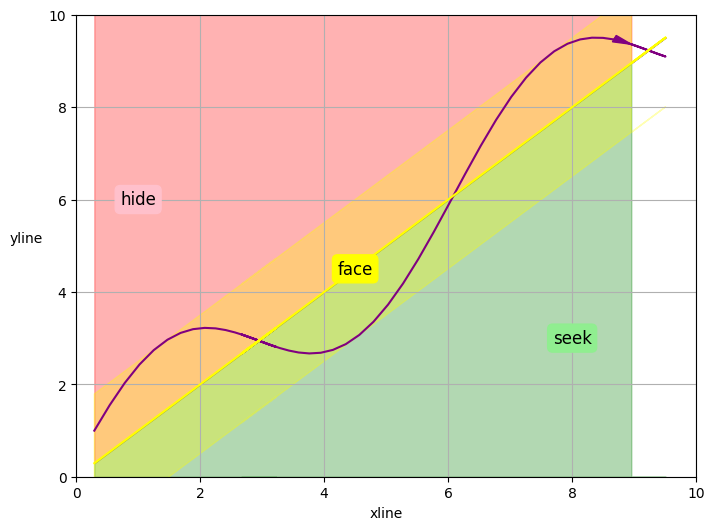

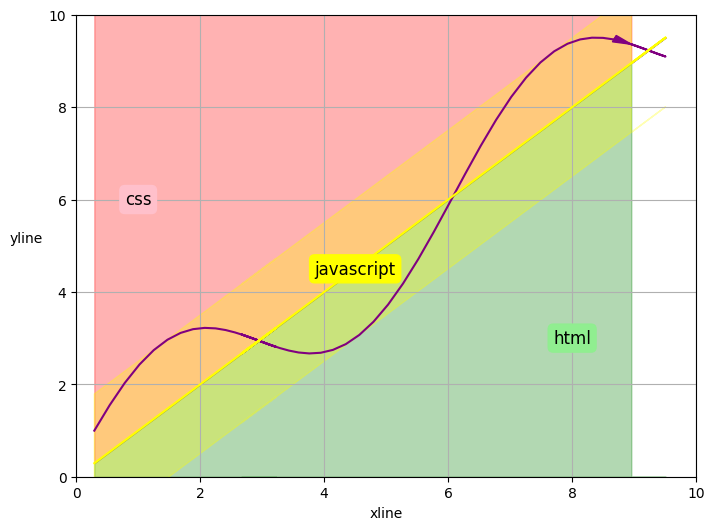

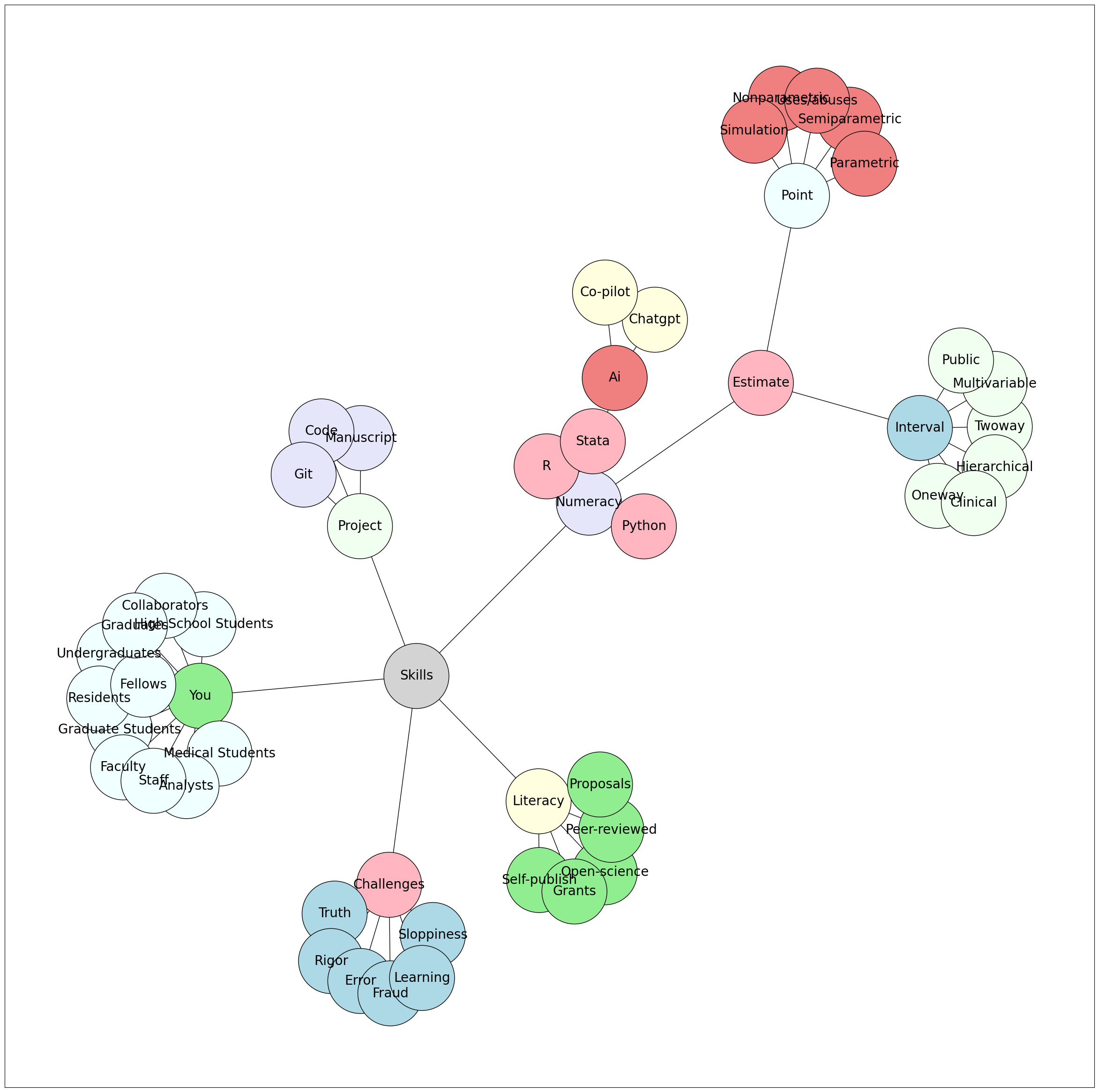

Act I: Hypothesis - Navigating the Realm of Ideas and Concepts

In Act I, we embark on a journey of exploration, where ideas take center stage. We delve into the realm of hypotheses and concepts, laying the foundation for our scientific inquiry. From conceiving research questions to formulating testable propositions, Act I serves as the starting point of our intellectual pursuit. Through manuscripts, code, and Git, we learn to articulate and organize our ideas effectively, setting the stage for robust investigations and insightful discoveries.

Act II: Data - Unveiling the Power of Information

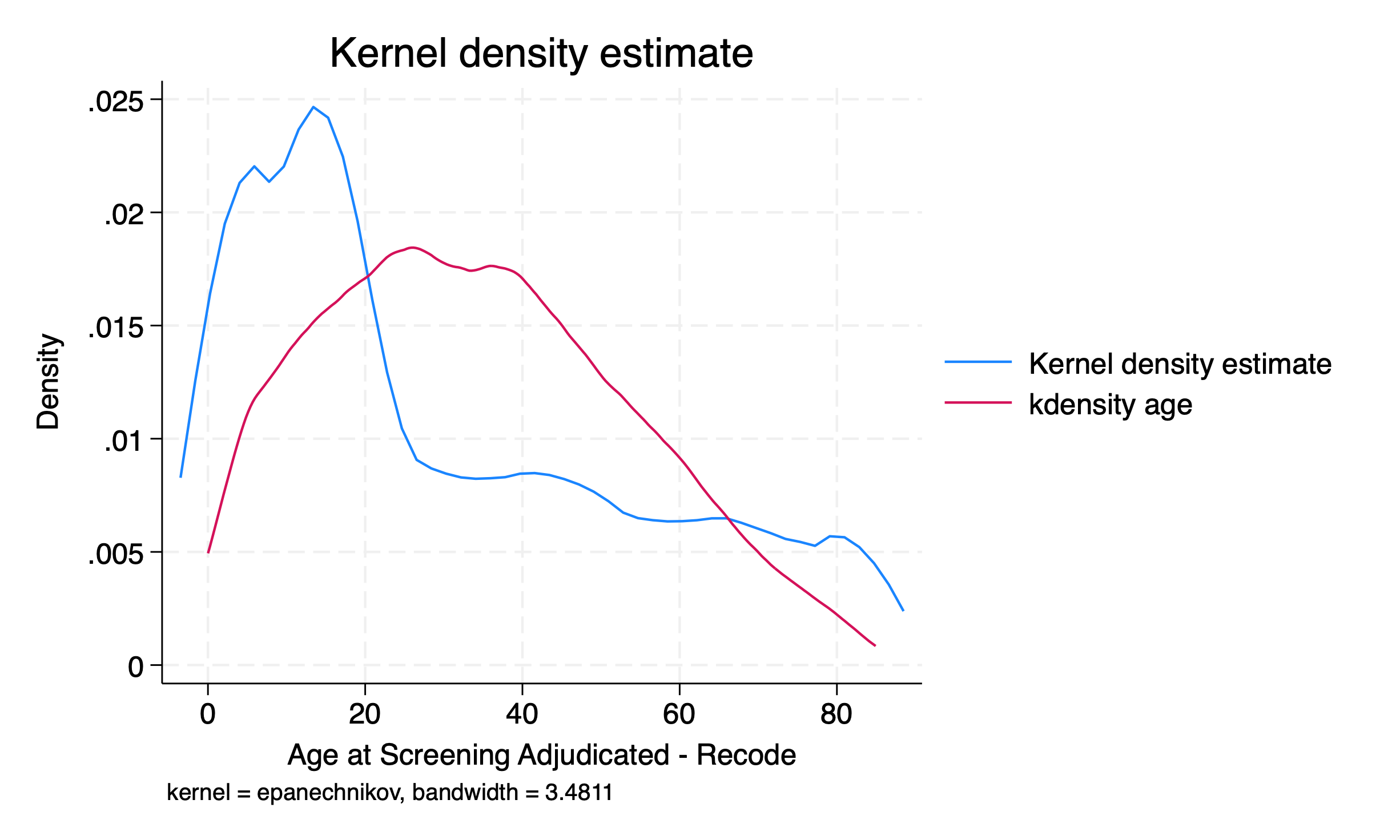

Act II unfolds as we dive into the realm of data, where raw information becomes the fuel for knowledge. Through the lenses of Python, AI, R, and Stata, we explore data collection, processing, and analysis. Act II empowers us to harness the potential of data and unleash its power in extracting meaningful insights. By mastering the tools to handle vast datasets and uncover patterns, Act II equips us to bridge the gap between theoretical hypotheses and empirical evidence.

Act III: Estimates - Seeking Truth through Inference

In Act III, we venture into the world of estimates, where statistical methods guide us in drawing meaningful conclusions. Nonparametric, semiparametric, parametric, and simulation techniques become our allies in the quest for truth. Act III enables us to infer population characteristics from sample data, making informed decisions and drawing reliable generalizations. Understanding the nuances of estimation empowers us to extract valuable information from limited observations, transforming data into actionable knowledge.

Act IV: Variance - Grappling with Uncertainty

Act IV brings us face to face with variance, where uncertainty and variability loom large. In the pursuit of truth, we encounter truth, rigor, error, sloppiness, and the unsettling specter of fraud. Act IV teaches us to navigate the intricacies of uncertainty, recognize the sources of variation, and identify potential pitfalls. By embracing variance, we fortify our methodologies, enhance the rigor of our analyses, and guard against errors and biases that may distort our findings.

Act V: Explanation - Illuminating the “Why” behind the “What”

Act V marks the pinnacle of our journey, where we seek to unravel the mysteries behind observed phenomena. Oneway, Twoway, Multivariable, Hierarchical, Clinical, and Public perspectives converge in a quest for understanding. Act V unfolds the rich tapestry of explanations, exploring causal relationships, uncovering hidden connections, and interpreting complex findings. By delving into the intricacies of explanation, Act V empowers us to communicate our discoveries, inspire new research avenues, and drive positive change in our scientific pursuits.

Epilogue: Embracing the Journey of Knowledge

In the Epilogue, we reflect on our expedition through Fenagas, celebrating the richness of knowledge and the evolution of our understanding. Open Science, Self-publishing, Published works, Grants, Proposals, and the interconnected world of Git & Spoke symbolize the culmination of our endeavors. Epilogue serves as a reminder of the ever-growing landscape of learning and the profound impact our contributions can have. Embracing the spirit of curiosity, we step forward, armed with newfound wisdom, to navigate the boundless seas of knowledge and ignite the flame of discovery in ourselves and others.

831. fenagas#

each paper, manuscript, or project should have its own set of repos

these will necessarily include a mixture of private and public repos

private repos will be used for collaboration

the public repos will be used for publication

fenagas is a private company and recruitor

so it will have its own set of repos as well

but the science and research will have its own repos

832. jerktaco#

oxtail

jerk chicken

sweetchilli-fried whole jerk snapper. is that a thing? quick google says yes.

833. eddie#

Kadi and Mark…

The square root of the number of employees you employ will do most of the work…

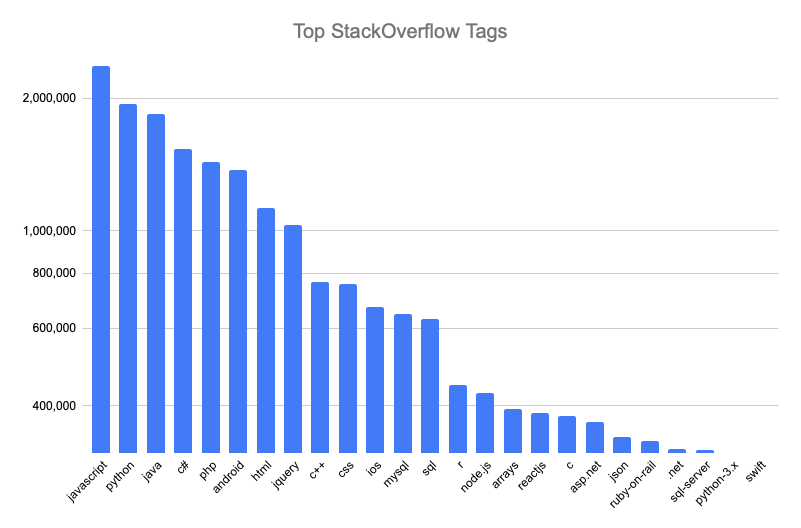

5 classical composers created 95% of the classical music that’s played

and yet if you look at their music, only 5% of their music is what’s played 95% of the time”….

Debate

08/02/2023#

834. fena#

fawaz initally mistook persian and urdu for arabic

and read them out but said they made no sense

then recognized the “middle one” as arabic

with the meaning that is intended

but probably no idiomatic

Show code cell source

data = [

("Eno yaffe ffena.", "Luganda", "Our and by us."),

("Nuestro y por nosotros", "Spanish", "Ours and by us"),

("Le nôtre et par nous", "French", "Ours and by us"),

("Unser und von uns", "German", "Ours and by us"),

("Nostro e da noi", "Italian", "Ours and by us"),

("Nosso e por nós", "Portuguese", "Ours and by us"),

("Ons en door ons", "Dutch", "Ours and by us"),

("Наш и нами", "Russian", "Ours and by us"),

("我们的,由我们提供", "Chinese", "Ours and by us"),

("हमारा और हमसे", "Nepali", "Ours and by us"),

("نا و توسط ما", "Persian", "Ours and by us"),

("私たちのものであり、私たちによって", "Japanese", "Ours and by us"),

("لنا وبواسطتنا", "Arabic", "Ours and by us"),

("שלנו ועל ידינו", "Hebrew", "Ours and by us"),

("Yetu na kwa sisi", "Swahili", "Ours and by us"),

("Yetu futhi ngathi sisi", "Zulu", "Ours and like us"),

("Tiwa ni aṣẹ ati nipa wa", "Yoruba", "Ours and through us"),

("A ka na anyi", "Igbo", "Ours and by us"),

("Korean", "Korean", "Ours and by us"),

("Meidän ja meidän toimesta", "Finnish", "Ours and by us"),

("ኦህድዎና በእኛ", "Amharic", "Ours and by us"),

("Hinqabu fi hinqabu jechuun", "Oromo", "Ours and through us"),

("ምንም ነገርና እኛ በእኛ", "Tigrinya", "Nothing and by us"),

("हमारा और हमसे", "Marathi", "Ours and by us"),

("અમારા અને અમારા દ્વારા", "Gujarati", "Ours and by us"),

("ما و توسط ما", "Urdu", "Ours and by us"),

("우리 것이며, 우리에 의해", "Korean", "Ours and by us"), # New row for Korean

]

def print_table(data):

print(" {:<4} {:<25} {:<15} {:<25} ".format("No.", "Phrase", "Language", "English Translation"))

print("" + "-" * 6 + "" + "-" * 32 + "" + "-" * 17 + "" + "-" * 27 + "")

for idx, (phrase, language, translation) in enumerate(data, 1):

print(" {:<4} {:<25} {:<15} {:<25} ".format(idx, phrase, language, translation))

print_table(data)

No. Phrase Language English Translation

----------------------------------------------------------------------------------

1 Eno yaffe ffena. Luganda Our and by us.

2 Nuestro y por nosotros Spanish Ours and by us

3 Le nôtre et par nous French Ours and by us

4 Unser und von uns German Ours and by us

5 Nostro e da noi Italian Ours and by us

6 Nosso e por nós Portuguese Ours and by us

7 Ons en door ons Dutch Ours and by us

8 Наш и нами Russian Ours and by us

9 我们的,由我们提供 Chinese Ours and by us

10 हमारा और हमसे Nepali Ours and by us

11 نا و توسط ما Persian Ours and by us

12 私たちのものであり、私たちによって Japanese Ours and by us

13 لنا وبواسطتنا Arabic Ours and by us

14 שלנו ועל ידינו Hebrew Ours and by us

15 Yetu na kwa sisi Swahili Ours and by us

16 Yetu futhi ngathi sisi Zulu Ours and like us

17 Tiwa ni aṣẹ ati nipa wa Yoruba Ours and through us

18 A ka na anyi Igbo Ours and by us

19 Korean Korean Ours and by us

20 Meidän ja meidän toimesta Finnish Ours and by us

21 ኦህድዎና በእኛ Amharic Ours and by us

22 Hinqabu fi hinqabu jechuun Oromo Ours and through us

23 ምንም ነገርና እኛ በእኛ Tigrinya Nothing and by us

24 हमारा और हमसे Marathi Ours and by us

25 અમારા અને અમારા દ્વારા Gujarati Ours and by us

26 ما و توسط ما Urdu Ours and by us

27 우리 것이며, 우리에 의해 Korean Ours and by us

835. kind#

The statement you’ve provided suggests that someone who enjoys being surrounded by like-minded individuals (kindred spirits) may not have the energy or drive to compete or challenge themselves against strong adversaries or opponents. This seems to express a perspective on complacency, comfort zones, and personal growth.

However, it’s essential to understand that people have different paths to personal growth and self-fulfillment. While some thrive on challenge and adversity, finding it energizing and invigorating, others might find equal growth in collaboration, mutual understanding, and shared passions.

Realism, as the statement concludes, might refer to understanding and accepting these differences in human nature and personal paths, acknowledging that everyone has their unique journey and definition of success.

836. adversarial networks#

GANs pit two neural networks against each other in a kind of game, where one network (the generator) tries to create fake data that looks real, while the other (the discriminator) tries to tell real from fake.

Supervised Learning, \(Y\): Algorithm is trained on labeled data.

Unsupervised Learning, \(X\): Algorithm is trained on unlabeled data and looks for patterns.

Semi-Supervised Learning, \(\beta\): Uses both labeled and unlabeled data for training.

Reinforcement Learning, \(\epsilon\): Algorithm learns by interacting with an environment and receiving feedback in the form of rewards or penalties.

Transfer Learning, \(z\): Using knowledge gained from one task to aid performance on a related, but different task.

Generative Adversarial Networks, \(\rho\): A subset of unsupervised learning where two networks are trained together in a competitive fashion.

Show code cell source

import pandas as pd

data = {

"Type of ML": ["Supervised", "Unsupervised", "Semi-Supervised", "Reinforcement", "Transfer", "GANs"],

"Pros": [

"Direct feedback, High accuracy with enough data",

"Works with unlabeled data, Can uncover hidden patterns",

"Leverages large amounts of unlabeled data",

"Adapts to dynamic environments, Potential for real-time learning",

"Saves training time, Can leverage pre-trained models",

"Generates new data, Can achieve impressive realism"

],

"Cons": [

"Needs labeled data, Can overfit",

"No feedback, Harder to verify results",

"Needs some labeled data, Combines challenges of both supervised and unsupervised",

"Requires careful reward design, Can be computationally expensive",

"Not always straightforward, Domain differences can be an issue",

"Training can be unstable, May require lots of data and time"

]

}

df = pd.DataFrame(data)

for index, row in df.iterrows():

print(f"Type of ML: {row['Type of ML']}\nPros: {row['Pros']}\nCons: {row['Cons']}\n{'-'*40}")

Type of ML: Supervised

Pros: Direct feedback, High accuracy with enough data

Cons: Needs labeled data, Can overfit

----------------------------------------

Type of ML: Unsupervised

Pros: Works with unlabeled data, Can uncover hidden patterns

Cons: No feedback, Harder to verify results

----------------------------------------

Type of ML: Semi-Supervised

Pros: Leverages large amounts of unlabeled data

Cons: Needs some labeled data, Combines challenges of both supervised and unsupervised

----------------------------------------

Type of ML: Reinforcement

Pros: Adapts to dynamic environments, Potential for real-time learning

Cons: Requires careful reward design, Can be computationally expensive

----------------------------------------

Type of ML: Transfer

Pros: Saves training time, Can leverage pre-trained models

Cons: Not always straightforward, Domain differences can be an issue

----------------------------------------

Type of ML: GANs

Pros: Generates new data, Can achieve impressive realism

Cons: Training can be unstable, May require lots of data and time

----------------------------------------

837. mbappé#

In the world of machine learning, there’s an architecture called Generative Adversarial Networks (GANs). A GAN consists of two neural networks: a generator and a discriminator. The generator creates fake data, while the discriminator evaluates data to determine if it’s real or generated by the generator. These networks are “adversaries”, and they improve through their competition with one another.

Mbappé in Ligue 1 is like the generator in a GAN:

Competitiveness (Lack of a Worthy Adversary): If the discriminator is too weak (akin to the other Ligue 1 teams compared to PSG), then the generator might produce data (or performance) that seems impressive in its context, but might not be as refined as it would be if it faced a stronger discriminator. Just as the EPL could serve as a more challenging discriminator for Mbappé, making him fine-tune his “generation” of skills, a stronger discriminator in a GAN forces the generator to produce higher-quality data.

Exposure to Challenges: If Mbappé were in the EPL (a stronger discriminator), he’d face more frequent and varied challenges, pushing him to adapt and refine his skills, much like a generator improving its data generation when pitted against a robust discriminator.

Star Power & Champions League: Just as Mbappé gets to face high-level competition in the Champions League and play alongside top talents in PSG, a generator can still produce high-quality data when trained with superior techniques or in combination with other skilled “networks”, even if its regular discriminator isn’t top-tier.

Future Moves & Evolution: Over time, a GAN might be fine-tuned or paired with stronger discriminators. Similarly, Mbappé might move to a more competitive league in the future, facing “stronger discriminators” that challenge and refine his game further.

In essence, for optimal growth and refinement, both a soccer player and a GAN benefit from being challenged regularly by worthy adversaries. PSG dominating Ligue 1 without a consistent worthy adversary might not push them to their absolute limits, just as a generator won’t produce its best possible data without a strong discriminator to challenge it.

838. lyrical#

kyrie eleison

lord deliver me

this is my exodus

He leads me beside still waters

He restoreth my soul

When you become a believer

Your spirit is made right

And sometimes, the soul doesn't get to notice

It has a hole in it

Due to things that's happened in the past

Hurt, abuse, molestation

But we wanna speak to you today and tell you

That God wants to heal the hole in your soul

Some people's actions are not because their spirit is wrong

But it's because the past has left a hole in their soul

May this wisdom help you get over your past

And remind you that God wants to heal the hole in your soul

I have my sister Le'Andria here

She's gonna help me share this wisdom

And tell this story

Lord

Deliver me, yeah

'Cause all I seem to do is hurt me

Hurt me, yeah

Lord

Deliver me

'Cause all I seem to do is hurt me

(Yes, sir)

Hurt me, yeah, yeah

(I know we should be finishing but)

(Sing it for me two more times)

Lord

Deliver me, yeah

'Cause all I seem to do is hurt me

(Na-ha)

Hurt me

(One more time)

Yeah

Lord

(Oh)

Deliver me

'Cause all I seem to do is hurt me, yeah

Hurt me, yeah

Whoa, yeah

And my background said

(Whoa-whoa, Lord)

Oh yeah (deliver me)

God rescued me from myself, from my overthinking

If you're listening out there

Just repeat after me if you're struggling with your past

And say it

(Oh, Lord, oh)

Let the Lord know, just say it, oh

(Oh, Lord, Lord)

He wants to restore your soul

He said

(Deliver me)

Hey

If my people, who are called by my name

Will move themselves and pray

(Deliver me)

Seek my face, turn from their wicked ways

I will hear from Heaven

Break it on down

So it is

It is so

Amen

Now when we pray

Wanna end that with a declaration, a decree

So I'm speaking for all of you listening

Starting here, starting now

The things that hurt you in the past won't control your future

Starting now, this is a new day

This is your exodus, you are officially released

Now sing it for me Le'Andria

Yeah

(This is my Exodus)

I'm saying goodbye

(This is my Exodus)

To the old me, yeah

(This is my Exodus)

Oh, oh, oh

(Thank you, Lord)

And I'm saying hello

(Thank you, Lord)

To the brand new me, yeah

(Thank you, Lord)

Yeah, yeah, yeah, yeah

This is

(This is my Exodus)

I declare it

(This is my Exodus)

And I decree

(This is my Exodus)

Oh this is, this day, this day is why I thank you, Lord

(This is my Exodus)

(Thank you, Lord)

Around

(Thank you, Lord)

For you and for me

(Thank you, Lord)

Yeah-hey-hey-yeah

Now, Lord God

(This is my Exodus)

Now, Lord God

(This is my Exodus)

It is my

(This is my Exodus)

The things that sent to break me down

(This is my Exodus)

Hey-hey-hey, hey-hey-hey, hey-hey-hey, hey-yeah

(Thank you, Lord)

(Thank you, Lord)

Every weapon

(Thank you, Lord)

God is you and to me, there for me

Source: Musixmatch

Songwriters: Donald Lawrence / Marshon Lewis / William James Stokes / Robert Woolridge / Desmond Davis

839. counterfeit#

In the context of competitive sports, the concept of “generating fakes” is indeed a fundamental aspect of gameplay. Athletes often use various techniques, such as dummies, side-steps, feints, or deceptive movements, to outwit their opponents and create opportunities for themselves or their teammates. These deceptive maneuvers act as the “generator” in the game, producing fake actions that challenge the opponent’s perception and decision-making.

Just like the generator in a GAN creates fake data to confuse the discriminator, athletes generate fake movements to deceive their opponents and gain an advantage. By presenting a range of possible actions, athletes keep their adversaries guessing and force them to make hasty decisions, potentially leading to mistakes or creating openings for an attack.

The effectiveness of generating fakes lies in the balance between unpredictability and precision. Just as a GAN’s generator must create data that is realistic enough to deceive the discriminator, athletes must execute their fakes with skill and timing to make them convincing and catch their opponents off guard.

Moreover, much like how the discriminator in a GAN becomes stronger by learning from previous encounters, athletes also improve their “discrimination” skills over time by facing various opponents with different playing styles and tactics. The experience of playing against worthy adversaries enhances an athlete’s ability to recognize and respond to deceptive movements, making them more refined in their decision-making and defensive actions.

In summary, generating fakes in competitive sports is a crucial aspect that parallels the dynamics of Generative Adversarial Networks. Just as a GAN benefits from facing a strong discriminator to refine its data generation, athletes grow and excel when regularly challenged by worthy adversaries who can test their ability to produce deceptive movements and refine their gameplay to the highest level.

840. music#

Composers in music, much like athletes in competitive sports and Generative Adversarial Networks (GANs), utilize the element of surprise and expectation to create captivating and emotionally engaging compositions. They play with the listener’s anticipation, offering moments of tension and resolution, which add depth and excitement to the musical experience.

In a musical composition, composers establish patterns, melodic motifs, and harmonic progressions that the listener subconsciously starts to expect. These expectations are the “discriminator” in this analogy, as they act as a reference point against which the composer can generate moments of tension and surprise, similar to the generator’s role in a GAN.

When a composer introduces a musical phrase that deviates from what the listener expects, it creates tension. This deviation can be through unexpected harmonies, dissonant intervals, rhythmic variations, or sudden changes in dynamics. This is akin to the “fake data” generated by the GAN’s generator or the deceptive movements used by athletes to outwit their opponents.

Just as a GAN’s discriminator learns from previous encounters to recognize fake data better, listeners’ musical discrimination skills improve over time as they become more familiar with different compositions and musical styles. As a result, composers must continually innovate and challenge the listener’s expectations to keep the music engaging and fresh.

The resolution in music, which ultimately satisfies the listener’s expectations, is the equivalent of a GAN’s generator producing data that appears realistic enough to deceive the discriminator successfully. Composers craft resolutions that give a sense of closure and fulfillment by returning to familiar themes, tonal centers, or melodic patterns.

A well-composed musical piece strikes a balance between unexpected twists and satisfying resolutions. Too many surprises without resolution can leave listeners disoriented and unsatisfied, just as a GAN’s generator may produce meaningless or unrealistic data. On the other hand, predictability without any element of surprise can result in boredom, both in music and in the world of sports.

Let’s illustrate this concept with a simple Python code snippet representing a musical script in the form of sheet music:

pip install music21

In this simple musical script, the notes and chords create an expected melody and progression in the key of C major. By introducing new harmonies or rhythms at strategic points, the composer can generate tension and surprise in the music, capturing the listener’s attention. Ultimately, the music will return to familiar notes and chords, resolving the tension and providing a satisfying conclusion.

In conclusion, just as GANs and competitive sports benefit from generating fakes and challenging adversaries, composers in music use the listener’s expectations and create tension through deviations, only to resolve it with familiar elements, creating a rich and engaging musical experience.

Show code cell source

!pip install music21

import os

from music21 import *

from IPython.display import Image, display

# Set the path to the MuseScore executable

musescore_path = '/Applications/MuseScore 4.app/Contents/MacOS/mscore'

us = environment.UserSettings()

us['musicxmlPath'] = musescore_path

# Create a score

score = stream.Score()

# Create a tempo

tempo = tempo.MetronomeMark(number=120)

# Create a key signature (C major)

key_signature = key.KeySignature(0)

# Create a time signature (4/4)

time_signature = meter.TimeSignature('4/4')

# Create a music stream

music_stream = stream.Stream()

# Add the tempo, key signature, and time signature to the music stream

music_stream.append(tempo)

music_stream.append(key_signature)

music_stream.append(time_signature)

# Define a list of note names

notes = ['C', 'D', 'E', 'F', 'G', 'A', 'B', 'C5']

# Create notes and add them to the music stream

for note_name in notes:

new_note = note.Note(note_name, quarterLength=1)

music_stream.append(new_note)

# Define a list of chords

chords = [chord.Chord(['C', 'E', 'G']), chord.Chord(['F', 'A', 'C']), chord.Chord(['G', 'B', 'D'])]

# Add chords to the music stream

for c in chords:

music_stream.append(c)

# Add the music stream to the score

# score.insert(0, music_stream)

# Check the contents of the music_stream

# print(music_stream.show('text'))

# Save the score as MusicXML

musicxml_path = '/users/d/desktop/music21_example.musicxml'

# score.write('musicxml', fp=musicxml_path)

# Define the path for the PNG image

# png_path = '/users/d/desktop/music21_example.png'

# Convert the MusicXML to a PNG image

# conv = converter.subConverters.ConverterMusicXML()

# conv.write(score, 'png', png_path)

# Display the PNG image

# display(Image(filename=png_path))

# Clean up temporary files if desired

# os.remove(musicxml_path)

# os.remove(png_path)

Requirement already satisfied: music21 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (9.1.0)

Requirement already satisfied: chardet in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (5.2.0)

Requirement already satisfied: joblib in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (1.3.1)

Requirement already satisfied: jsonpickle in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (3.0.1)

Requirement already satisfied: matplotlib in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (3.7.2)

Requirement already satisfied: more-itertools in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (10.1.0)

Requirement already satisfied: numpy in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (1.25.2)

Requirement already satisfied: requests in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (2.31.0)

Requirement already satisfied: webcolors>=1.5 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from music21) (1.13)

Requirement already satisfied: contourpy>=1.0.1 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (1.1.0)

Requirement already satisfied: cycler>=0.10 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (0.11.0)

Requirement already satisfied: fonttools>=4.22.0 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (4.42.0)

Requirement already satisfied: kiwisolver>=1.0.1 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (1.4.4)

Requirement already satisfied: packaging>=20.0 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (23.1)

Requirement already satisfied: pillow>=6.2.0 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (10.0.0)

Requirement already satisfied: pyparsing<3.1,>=2.3.1 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (3.0.9)

Requirement already satisfied: python-dateutil>=2.7 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from matplotlib->music21) (2.8.2)

Requirement already satisfied: charset-normalizer<4,>=2 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from requests->music21) (3.2.0)

Requirement already satisfied: idna<4,>=2.5 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from requests->music21) (3.4)

Requirement already satisfied: urllib3<3,>=1.21.1 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from requests->music21) (2.0.4)

Requirement already satisfied: certifi>=2017.4.17 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from requests->music21) (2023.7.22)

Requirement already satisfied: six>=1.5 in /Users/d/Dropbox (Personal)/1f.ἡἔρις,κ/1.ontology/myenv/lib/python3.11/site-packages (from python-dateutil>=2.7->matplotlib->music21) (1.16.0)

{0.0} <music21.tempo.MetronomeMark animato Quarter=120>

{0.0} <music21.key.KeySignature of no sharps or flats>

{0.0} <music21.meter.TimeSignature 4/4>

{0.0} <music21.note.Note C>

{1.0} <music21.note.Note D>

{2.0} <music21.note.Note E>

{3.0} <music21.note.Note F>

{4.0} <music21.note.Note G>

{5.0} <music21.note.Note A>

{6.0} <music21.note.Note B>

{7.0} <music21.note.Note C>

{8.0} <music21.chord.Chord C E G>

{9.0} <music21.chord.Chord F A C>

{10.0} <music21.chord.Chord G B D>

None

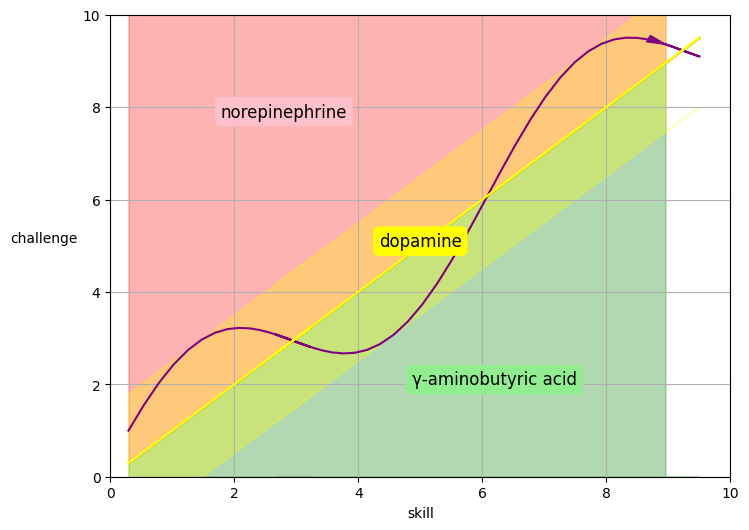

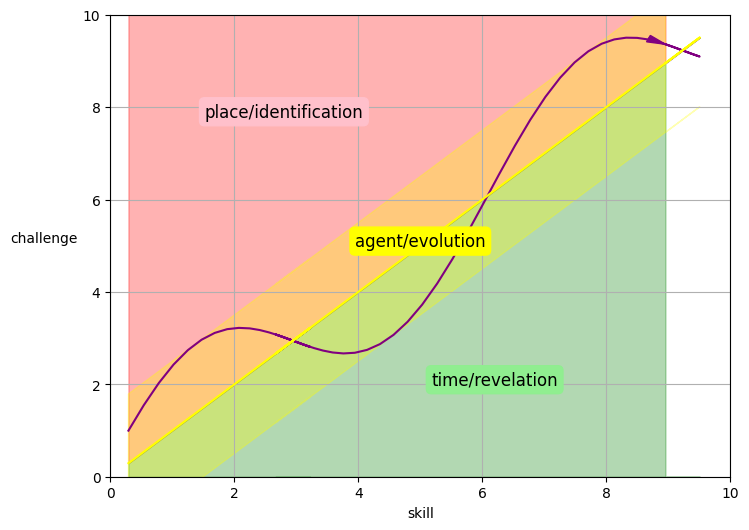

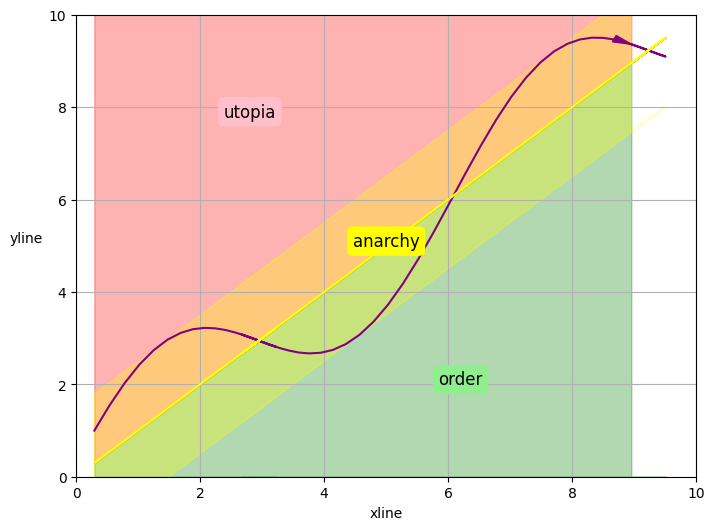

841. learning#

generative adversarial networks

challenge-level, skill-level, and equiping students with the right tools to “level up”

use this approach to create a “learning” GAN for any sort of course but starting with a course on Stata

To design a Stata Programming class with the flexibility to adapt it into Python and R Programming classes, we can organize the content according to the provided headings in the _toc.yml file. We will structure the course into five acts, and each act will contain three to six scenes representing different chapters or topics. Each scene will be a learning module or topic that covers a specific aspect of Stata programming (and later Python and R programming).

Let’s begin by creating the _toc.yml:

Skip to main content

Have any feedback? Please participate in this survey

Logo image

Fenagas

Prologue

Act I

Manuscripts

Code

Git

Act II

Python

AI

R

Stata

Act III

Nonparametric

Semiparametric

Parametric

Simulation

Uses, abuses

Act IV

Truth

Rigor

Error

Sloppiness

Fraud

Learning

Act V

Oneway

Twoway

Multivariable

Hierarchical

Clinical

Public

Epilogue

Open Science

Self publish

Published

Grants

Proposals

Git & Spoke

Automate

Bash

Unix

Courses

Stata Programming

Now, let’s create a brief description for each act and scene:

Act I - Introduction to Research Manuscripts and Version Control

Scene 1 - Understanding Research Manuscripts This scene will provide an overview of research manuscripts, their structure, and the importance of clear documentation in reproducible research.

Scene 2 - Introduction to Code In this scene, we will introduce coding concepts, syntax, and the use of Stata, Python, and R as programming languages for data analysis.

Scene 3 - Version Control with Git Students will learn the fundamentals of version control using Git, a powerful tool for tracking changes in code and collaborating with others.

Act II - Exploring Data Analysis with Python, AI, R, and Stata

Scene 1 - Python for Data Analysis This scene will cover basic data analysis tasks using Python, focusing on data manipulation, visualization, and statistical analysis.

Scene 2 - Introduction to Artificial Intelligence (AI) Students will gain insights into AI concepts and applications, including machine learning, deep learning, and generative adversarial networks (GANs).

Scene 3 - R for Data Science In this scene, we’ll explore R’s capabilities for data analysis, statistical modeling, and creating visualizations.

Scene 4 - Introduction to Stata Students will be introduced to Stata programming, including data management, analysis, and graphing features.

Act III - Advanced Topics in Data Analysis

Scene 1 - Nonparametric Statistics This scene will delve into nonparametric statistical methods and their applications in various research scenarios.

Scene 2 - Semiparametric Statistics Students will learn about semiparametric models and their advantages in handling complex data structures.

Scene 3 - Parametric Modeling This scene will cover parametric statistical models and their assumptions, along with practical implementation in the chosen programming languages.

Scene 4 - Simulation Techniques In this scene, students will learn about simulation methods to replicate observed data and explore “what if” scenarios in their analyses.

Scene 5 - Data Analysis Uses and Abuses We will discuss common mistakes and pitfalls in data analysis, emphasizing the importance of data integrity and robustness.

Act IV - Ensuring Data Quality and Integrity

Scene 1 - Seeking Truth in Research This scene will highlight the importance of truth-seeking in research and the impact of biased results on scientific discoveries.

Scene 2 - Rigorous Research Methods Students will learn about various rigorous research methodologies to ensure valid and reliable findings.

Scene 3 - Identifying and Addressing Errors We will explore different types of errors in research and how to identify and correct them during the data analysis process.

Scene 4 - Preventing Sloppiness in Analysis This scene will discuss best practices to avoid careless mistakes in data analysis that may compromise research outcomes.

Scene 5 - Fraud Detection in Research Students will explore methods and approaches to detect and prevent fraud in clinical and public health research.

Scene 6 - Learning from Data Drawing inspiration from Generative Adversarial Networks (GANs), this scene will encourage students to learn from data by simulating expected outcomes based on observed data.

Act V - Advanced Data Visualization and Reporting

Scene 1 - Oneway Plots and Scatterplots This scene will focus on creating oneway plots and scatterplots with jitter and overlapped mean and 95% CI bars to compare variables.

Scene 2 - Twoway Plots and Multivariable Visualization We will cover twoway plots and multivariable visualizations to explore relationships between multiple variables.

Scene 3 - Hierarchical Data Visualization Students will learn techniques for visualizing hierarchical data structures effectively.

Scene 4 - Data Visualization in Clinical Research This scene will demonstrate visualization methods specifically tailored to clinical research scenarios.

Scene 5 - Communicating Research to the Public In this final scene, we will explore effective ways to communicate research findings to the general public.

Epilogue - Advancing Open Science and Professional Development

Scene 1 - Embracing Open Science This scene will emphasize the importance of open science practices and promoting transparency in research.

Scene 2 - Self-publishing and Open Access Students will learn about self-publishing options and the benefits of open access to research outputs.

Scene 3 - Getting Published in Journals This scene will guide students through the process of getting research published in academic journals.

Scene 4 - Grant Writing and Proposal Development We will cover essential aspects of grant writing and proposal development for research funding.

Scene 5 - Git and Collaborative Workflows In this final scene, students will explore advanced Git workflows for collaborative programming projects.

Automate - Bash and Unix Scripting for Automation This optional chapter will introduce students to automation using bash and Unix scripting.

Courses - Explore Other Programming Courses Students will be provided with resources to explore more specialized programming courses beyond Stata, Python, and R.

With this _toc.yml and the organized content for each part and scene, the Stata Programming class can be easily adapted into Python and R Programming classes by modifying the programming language-specific examples and exercises while keeping the underlying concepts and topics consistent.

Based on the provided _toc.yml in the jb-book format, here is the redesigned Stata Programming class:

Please note that the content of each chapter may need to be written separately in the corresponding .ipynb or .md files. The organization of the class remains the same with five acts, each containing multiple scenes (chapters) covering different topics in Stata programming. You can add content and explanations in the respective files according to the intended class structure.

Sure, here’s the redesigned Stata Programming class with all files as .ipynb:

With this format, all chapters are now represented as .ipynb files, making it easier to create, manage, and access the content in Jupyter Notebook format. Please ensure that the content of each .ipynb file is written appropriately to deliver the Stata Programming class effectively.

Sure, here’s the redesigned Stata Programming class with 10 scenes per act:

Root: intro.ipynb

Title: Fenagas

Parts:

- Caption:

Chapters:

- File: prologue.ipynb

- Caption: Act I

Chapters:

- File: content/lessons/l1/act1_1.ipynb

- File: content/lessons/l1/act1_2.ipynb

- File: content/lessons/l1/act1_3.ipynb

- File: content/lessons/l1/act1_4.ipynb

- File: content/lessons/l1/act1_5.ipynb

- File: content/lessons/l1/act1_6.ipynb

- File: content/lessons/l1/act1_7.ipynb

- File: content/lessons/l1/act1_8.ipynb

- File: content/lessons/l1/act1_9.ipynb

- File: content/lessons/l1/act1_10.ipynb

- Caption: Act II

Chapters:

- File: content/lessons/l2/act2_1.ipynb

- File: content/lessons/l2/act2_2.ipynb

- File: content/lessons/l2/act2_3.ipynb

- File: content/lessons/l2/act2_4.ipynb

- File: content/lessons/l2/act2_5.ipynb

- File: content/lessons/l2/act2_6.ipynb

- File: content/lessons/l2/act2_7.ipynb

- File: content/lessons/l2/act2_8.ipynb

- File: content/lessons/l2/act2_9.ipynb

- File: content/lessons/l2/act2_10.ipynb

- Caption: Act III

Chapters:

- File: content/lessons/l3/act3_1.ipynb

- File: content/lessons/l3/act3_2.ipynb

- File: content/lessons/l3/act3_3.ipynb

- File: content/lessons/l3/act3_4.ipynb

- File: content/lessons/l3/act3_5.ipynb

- File: content/lessons/l3/act3_6.ipynb

- File: content/lessons/l3/act3_7.ipynb

- File: content/lessons/l3/act3_8.ipynb

- File: content/lessons/l3/act3_9.ipynb

- File: content/lessons/l3/act3_10.ipynb

- Caption: Act IV

Chapters:

- File: content/lessons/l4/act4_1.ipynb

- File: content/lessons/l4/act4_2.ipynb

- File: content/lessons/l4/act4_3.ipynb

- File: content/lessons/l4/act4_4.ipynb

- File: content/lessons/l4/act4_5.ipynb

- File: content/lessons/l4/act4_6.ipynb

- File: content/lessons/l4/act4_7.ipynb

- File: content/lessons/l4/act4_8.ipynb

- File: content/lessons/l4/act4_9.ipynb

- File: content/lessons/l4/act4_10.ipynb

- Caption: Act V

Chapters:

- File: content/lessons/l5/act5_1.ipynb

- File: content/lessons/l5/act5_2.ipynb

- File: content/lessons/l5/act5_3.ipynb

- File: content/lessons/l5/act5_4.ipynb

- File: content/lessons/l5/act5_5.ipynb

- File: content/lessons/l5/act5_6.ipynb

- File: content/lessons/l5/act5_7.ipynb

- File: content/lessons/l5/act5_8.ipynb

- File: content/lessons/l5/act5_9.ipynb

- File: content/lessons/l5/act5_10.ipynb

- Caption: Epilogue

Chapters:

- File: content/lessons/l6/epi_1.ipynb

- File: content/lessons/l6/epi_2.ipynb

- File: content/lessons/l6/epi_3.ipynb

- File: content/lessons/l6/epi_4.ipynb

- File: content/lessons/l6/epi_5.ipynb

- File: content/lessons/l6/epi_6.ipynb

- File: content/lessons/l6/epi_7.ipynb

- File: content/lessons/l6/epi_8.ipynb

- File: content/lessons/l6/epi_9.ipynb

- File: content/lessons/l6/epi_10.ipynb

- Caption: Git & Spoke

Chapters:

- File: content/lessons/l7/act7_1.ipynb

- File: content/lessons/l7/act7_2.ipynb

- File: content/lessons/l7/act7_3.ipynb

- File: content/lessons/l7/act7_4.ipynb

- File: content/lessons/l7/act7_5.ipynb

- File: content/lessons/l7/act7_6.ipynb

- File: content/lessons/l7/act7_7.ipynb

- File: content/lessons/l7/act7_8.ipynb

- File: content/lessons/l7/act7_9.ipynb

- File: content/lessons/l7/act7_10.ipynb

- Caption: Feedback

Chapters:

- File: content/lessons/1_survey9.ipynb

- Caption: About Fena

Chapters:

- File: content/lessons/about/cite.ipynb

- File: content/lessons/about/changelog.ipynb

- Caption: Courses

Chapters:

- URL: https://publichealth.jhu.edu/courses

Title: Stata Programming

- File: content/dramatispersonae/high_school_students/high_school_students.ipynb

- File: content/dramatispersonae/undergraduates/undergraduates.ipynb

- File: content/dramatispersonae/graduate_students/graduate_students.ipynb

- File: content/dramatispersonae/medical_students/medical_students.ipynb

Sure! Below is a bash script named stataclass.sh that creates the folder structure and places the .ipynb files in the appropriate folders:

#!/bin/bash

# Create folders

mkdir -p content/lessons/l1

mkdir -p content/lessons/l2

mkdir -p content/lessons/l3

mkdir -p content/lessons/l4

mkdir -p content/lessons/l5

mkdir -p content/lessons/l6

mkdir -p content/lessons/l7

mkdir -p content/dramatispersonae/high_school_students

mkdir -p content/dramatispersonae/undergraduates

mkdir -p content/dramatispersonae/graduate_students

mkdir -p content/dramatispersonae/medical_students

mkdir -p content/dramatispersonae/residents

mkdir -p content/dramatispersonae/fellows

mkdir -p content/dramatispersonae/faculty

mkdir -p content/dramatispersonae/analysts

mkdir -p content/dramatispersonae/staff

mkdir -p content/dramatispersonae/collaborators

mkdir -p content/dramatispersonae/graduates

mkdir -p content/dramatispersonae/high_school_students/calvin_mathews

mkdir -p content/dramatispersonae/medical_students/andrew_arking

mkdir -p content/lessons/l6

mkdir -p content/lessons/l7

# Place the .ipynb files in appropriate folders

cp prologue.ipynb content/

cp content/lessons/l1/act1_1.ipynb content/lessons/l1/

cp content/lessons/l1/act1_2.ipynb content/lessons/l1/

cp content/lessons/l1/act1_3.ipynb content/lessons/l1/

cp content/lessons/l1/act1_4.ipynb content/lessons/l1/

cp content/lessons/l1/act1_5.ipynb content/lessons/l1/

cp content/lessons/l1/act1_6.ipynb content/lessons/l1/

cp content/lessons/l1/act1_7.ipynb content/lessons/l1/

cp content/lessons/l1/act1_8.ipynb content/lessons/l1/

cp content/lessons/l1/act1_9.ipynb content/lessons/l1/

cp content/lessons/l1/act1_10.ipynb content/lessons/l1/

# Continue copying .ipynb files to other folders as required...

echo "Folder structure and files have been created successfully."

This script will create all the necessary folders and copy the .ipynb files to their respective locations, as specified in the _toc.yml format. Note that you’ll need to manually complete the script by copying the remaining .ipynb files to the corresponding folders based on the _toc.yml. Save the script and run it to create the desired folder structure and files.

Absolutely! You are right; there is a more elegant and efficient way to create the folders and files using a bash loop. Here’s the updated version of the stataclass.sh script using your suggestion:

#!/bin/bash

# Create folders

mkdir -p content/lessons/l{1..7}

mkdir -p content/dramatispersonae/{high_school_students,undergraduates,graduate_students,medical_students,residents,fellows,faculty,analysts,staff,collaborators,graduates}

mkdir -p content/dramatispersonae/high_school_students/calvin_mathews

mkdir -p content/dramatispersonae/medical_students/andrew_arking

# Place the .ipynb files in appropriate folders

cp prologue.ipynb content/

cp content/lessons/l1/act1_{1..10}.ipynb content/lessons/l1/

# Continue copying .ipynb files to other folders as required...

echo "Folder structure and files have been created successfully."

This script uses brace expansion in the mkdir command to create the necessary folders in a more concise way. It also utilizes the bash loop with the array to create the .ipynb files for the dramatispersonae folder. It is both shorter and more readable, making it a more elegant solution. Just make sure to continue copying the remaining .ipynb files to the corresponding folders as specified in the _toc.yml format.

842. act1#

give students a tool to learn how to learn

a template of an entire manuscript.ipynb file

the python, ai, r, and stata programming scripts that support the manuscript.ipynb file

step-by-step instructions on creating a github account, a public, and private repository

push content to the public repository and use gh-pages to publish the content

843. streamline#

#!/bin/bash

# Change the working directory to the desired location

cd ~/dropbox/1f.ἡἔρις,κ/1.ontology

# Uncomment the following line if you need to create the "three40" directory

# nano three40.sh & paste the contents of the three40.sh file

# chmod +x three40.sh

# mkdir three40

# cd three40

# nano _toc.yml & paste the contents of the _toc.yml file

# Create the root folder

# mkdir -p three40

# Create the "intro.ipynb" file inside the "root" folder

touch three40/intro.ipynb

# Function to read the chapters from the YAML file using pure bash

get_chapters_from_yaml() {

local part="$1"

local toc_file="_toc.yml"

local lines

local in_part=false

while read -r line; do

if [[ "$line" == *"$part"* ]]; then

in_part=true

elif [[ "$line" == *"- File: "* ]]; then

if "$in_part"; then

echo "$line" | awk -F': ' '{print $2}' | tr -d ' '

fi

elif [[ "$line" == *"-"* ]]; then

in_part=false

fi

done < "$toc_file"

}

# Create parts and chapters based on the _toc.yml structure

parts=(

"Act I"

"Act II"

"Act III"

"Act IV"

"Act V"

"Epilogue"

"Git & Spoke"

"Courses"

)

# Loop through parts and create chapters inside each part folder

for part in "${parts[@]}"; do

part_folder="three40/$part"

mkdir -p "$part_folder"

# Get the chapters for the current part from the _toc.yml

chapters=($(get_chapters_from_yaml "$part"))

# Create chapter files inside the part folder

for chapter in "${chapters[@]}"; do

touch "$part_folder/$chapter"

done

done

# Create folders for dramatispersonae

files=(

"high_school_students/high_school_students.ipynb"

"undergraduates/undergraduates.ipynb"

"graduate_students/graduate_students.ipynb"

"medical_students/medical_students.ipynb"

"residents/residents.ipynb"

"fellows/fellows.ipynb"

"faculty/faculty.ipynb"

"analysts/analysts.ipynb"

"staff/staff.ipynb"

"collaborators/collaborators.ipynb"

"graduates/graduates.ipynb"

"high_school_students/calvin_mathews/calvin_mathews.ipynb"

"medical_students/andrew_arking/andrew_arking.ipynb"

"medical_students/andrew_arking/andrew_arking_1.ipynb"

"collaborators/fawaz_al_ammary/fawaz_al_ammary.ipynb"

"collaborators/fawaz_al_ammary/fawaz_al_ammary_1.ipynb"

)

# Loop through the file paths and create the corresponding directories

for file_path in "${files[@]}"; do

# Remove the common prefix "content/dramatispersonae/" from the file path

dir_path=${file_path#content/dramatispersonae/}

# Create the directory

mkdir -p "three40/content/dramatispersonae/$dir_path"

done

echo "Folder structure has been created successfully."

Root: intro.ipynb

Title: Fenagas

Parts:

- Caption:

Chapters:

- File: prologue.ipynb

- Caption: Act I

Chapters:

- File: content/lessons/l1/act1_1.ipynb

- File: content/lessons/l1/act1_2.ipynb

- File: content/lessons/l1/act1_3.ipynb

- File: content/lessons/l1/act1_4.ipynb

- File: content/lessons/l1/act1_5.ipynb

- File: content/lessons/l1/act1_6.ipynb

- File: content/lessons/l1/act1_7.ipynb

- File: content/lessons/l1/act1_8.ipynb

- File: content/lessons/l1/act1_9.ipynb

- File: content/lessons/l1/act1_10.ipynb

- Caption: Act II

Chapters:

- File: content/lessons/l2/act2_1.ipynb

- File: content/lessons/l2/act2_2.ipynb

- File: content/lessons/l2/act2_3.ipynb

- File: content/lessons/l2/act2_4.ipynb

- File: content/lessons/l2/act2_5.ipynb

- File: content/lessons/l2/act2_6.ipynb

- File: content/lessons/l2/act2_7.ipynb

- File: content/lessons/l2/act2_8.ipynb

- File: content/lessons/l2/act2_9.ipynb

- File: content/lessons/l2/act2_10.ipynb

- Caption: Act III

Chapters:

- File: content/lessons/l3/act3_1.ipynb

- File: content/lessons/l3/act3_2.ipynb

- File: content/lessons/l3/act3_3.ipynb

- File: content/lessons/l3/act3_4.ipynb

- File: content/lessons/l3/act3_5.ipynb

- File: content/lessons/l3/act3_6.ipynb

- File: content/lessons/l3/act3_7.ipynb

- File: content/lessons/l3/act3_8.ipynb

- File: content/lessons/l3/act3_9.ipynb

- File: content/lessons/l3/act3_10.ipynb

- Caption: Act IV

Chapters:

- File: content/lessons/l4/act4_1.ipynb

- File: content/lessons/l4/act4_2.ipynb

- File: content/lessons/l4/act4_3.ipynb

- File: content/lessons/l4/act4_4.ipynb

- File: content/lessons/l4/act4_5.ipynb

- File: content/lessons/l4/act4_6.ipynb

- File: content/lessons/l4/act4_7.ipynb

- File: content/lessons/l4/act4_8.ipynb

- File: content/lessons/l4/act4_9.ipynb

- File: content/lessons/l4/act4_10.ipynb

- Caption: Act V

Chapters:

- File: content/lessons/l5/act5_1.ipynb

- File: content/lessons/l5/act5_2.ipynb

- File: content/lessons/l5/act5_3.ipynb

- File: content/lessons/l5/act5_4.ipynb

- File: content/lessons/l5/act5_5.ipynb

- File: content/lessons/l5/act5_6.ipynb

- File: content/lessons/l5/act5_7.ipynb

- File: content/lessons/l5/act5_8.ipynb

- File: content/lessons/l5/act5_9.ipynb

- File: content/lessons/l5/act5_10.ipynb

- Caption: Epilogue

Chapters:

- File: content/lessons/l6/epi_1.ipynb

- File: content/lessons/l6/epi_2.ipynb

- File: content/lessons/l6/epi_3.ipynb

- File: content/lessons/l6/epi_4.ipynb

- File: content/lessons/l6/epi_5.ipynb

- File: content/lessons/l6/epi_6.ipynb

- File: content/lessons/l6/epi_7.ipynb

- File: content/lessons/l6/epi_8.ipynb

- File: content/lessons/l6/epi_9.ipynb

- File: content/lessons/l6/epi_10.ipynb

- Caption: Git & Spoke

Chapters:

- File: content/lessons/l7/act7_1.ipynb

- File: content/lessons/l7/act7_2.ipynb

- File: content/lessons/l7/act7_3.ipynb

- File: content/lessons/l7/act7_4.ipynb

- File: content/lessons/l7/act7_5.ipynb

- File: content/lessons/l7/act7_6.ipynb

- File: content/lessons/l7/act7_7.ipynb

- File: content/lessons/l7/act7_8.ipynb

- File: content/lessons/l7/act7_9.ipynb

- File: content/lessons/l7/act7_10.ipynb

- Caption: Courses

Chapters:

- URL: https://publichealth.jhu.edu/courses

Title: Stata Programming

- file: content/dramatispersonae/high_school_students/high_school_students.ipynb

- file: content/dramatispersonae/undergraduates/undergraduates.ipynb

- file: content/dramatispersonae/graduate_students/graduate_students.ipynb

- file: content/dramatispersonae/medical_students/medical_students.ipynb

- file: content/dramatispersonae/residents/residents.ipynb

- file: content/dramatispersonae/fellows/fellows.ipynb

- file: content/dramatispersonae/faculty/faculty.ipynb

- file: content/dramatispersonae/analysts/analysts.ipynb

- file: content/dramatispersonae/staff/staff.ipynb

- file: content/dramatispersonae/collaborators/collaborators.ipynb

- file: content/dramatispersonae/graduates/graduates.ipynb

- file: content/dramatispersonae/high_school_students/calvin_mathews/calvin_mathews.ipynb

- file: content/dramatispersonae/medical_students/andrew_arking/andrew_arking.ipynb

- file: content/dramatispersonae/medical_students/andrew_arking/andrew_arking_1.ipynb

- file: content/dramatispersonae/collaborators/fawaz_al_ammary/fawaz_al_ammary.ipynb

- file: content/dramatispersonae/collaborators/fawaz_al_ammary/fawaz_al_ammary_1.ipynb

844. revolution#

#!/bin/bash

# Step 1: Navigate to the '1f.ἡἔρις,κ' directory in the 'dropbox' folder

cd ~/dropbox/1f.ἡἔρις,κ/1.ontology

# Step 2: Create and edit the 'three40.sh' file using 'nano'

nano three40.sh

# Step 3: Add execute permissions to the 'three40.sh' script

chmod +x three40.sh

# Step 4: Run the 'three40.sh' script

./three40.sh

# Step 5: Create the 'three40' directory

mkdir three40

# Step 6: Navigate to the 'three40' directory

cd three40

# Step 7: Create and edit the '_toc.yml' file using 'nano'

nano _toc.yml

three40/

├── intro.ipynb

├── prologue.ipynb

├── content/

│ └── lessons/

│ └── l1/

│ ├── act1_1.ipynb

│ ├── act1_2.ipynb

│ ├── act1_3.ipynb

│ └── ...

│ └── l2/

│ ├── act2_1.ipynb

│ ├── act2_2.ipynb

│ └── ...

│ └── ...

│ └── l7/

│ ├── act7_1.ipynb

│ ├── act7_2.ipynb

│ └── ...

├── dramatispersonae/

│ └── high_school_students/

│ └── ...

│ └── undergraduates/

│ └── ...

│ └── ...

│ └── graduates/

│ └── ...

└── ...

845. yml#

#!/bin/bash

# Change the working directory to the desired location

cd ~/dropbox/1f.ἡἔρις,κ/1.ontology

# Uncomment the following line if you need to create the "three40" directory

# nano three40.sh & paste the contents of the three40.sh file

# chmod +x three40.sh

# mkdir three40

# cd three40

# Create the root folder

mkdir -p three40

# nano three40/_toc.yml & paste the contents of the _toc.yml file

# Create the "intro.ipynb" file inside the "root" folder

touch three40/intro.ipynb

# Function to read the chapters from the YAML file using pure bash

get_chapters_from_yaml() {

local part="$1"

local toc_file="three40/_toc.yml"

local lines

local in_part=false

while read -r line; do

if [[ "$line" == *"$part"* ]]; then

in_part=true

elif [[ "$line" == *"- File: "* ]]; then

if "$in_part"; then

echo "$line" | awk -F': ' '{print $2}' | tr -d ' '

fi

elif [[ "$line" == *"-"* ]]; then

in_part=false

fi

done < "$toc_file"

}

# Create parts and chapters based on the _toc.yml structure

parts=(

"Act I"

"Act II"

"Act III"

"Act IV"

"Act V"

"Epilogue"

"Git & Spoke"

"Courses"

)

# Loop through parts and create chapters inside each part folder

for part in "${parts[@]}"; do

part_folder="three40/$part"

mkdir -p "$part_folder"

# Get the chapters for the current part from the _toc.yml

chapters=($(get_chapters_from_yaml "$part"))

# Create chapter files inside the part folder

for chapter in "${chapters[@]}"; do

touch "$part_folder/$chapter.ipynb"

done

done

echo "Folder structure has been created successfully."

846. iteration~30#

846.1. structure#

Based on the provided information and incorporating the details under the “dramatispersonae” folder, the entire “three40/” directory structure will look like this:

three40/

├── intro.ipynb

├── prologue.ipynb

├── Act I/

│ ├── act1_1.ipynb

│ ├── act1_2.ipynb

│ ├── act1_3.ipynb

│ └── ...

├── Act II/

│ ├── act2_1.ipynb

│ ├── act2_2.ipynb

│ └── ...

├── Act III/

│ ├── act3_1.ipynb

│ ├── act3_2.ipynb

│ ├── act3_3.ipynb

│ ├── act3_4.ipynb

│ └── act3_5.ipynb

├── Act IV/

│ ├── act4_1.ipynb

│ ├── act4_2.ipynb

│ ├── act4_3.ipynb

│ ├── act4_4.ipynb

│ ├── act4_5.ipynb

│ └── act4_6.ipynb

├── Act V/

│ ├── act5_1.ipynb

│ ├── act5_2.ipynb

│ ├── act5_3.ipynb

│ ├── act5_4.ipynb

│ ├── act5_5.ipynb

│ └── act5_6.ipynb

├── Epilogue/

│ ├── epi_1.ipynb

│ ├── epi_2.ipynb

│ ├── epi_3.ipynb

│ ├── epi_4.ipynb

│ ├── epi_5.ipynb

│ ├── epi_6.ipynb

│ ├── epi_7.ipynb

│ └── epi_8.ipynb

├── Gas & Spoke/

│ ├── gas_1.ipynb

│ ├── gas_2.ipynb

│ └── gas_3.ipynb

└── dramatispersonae/

├── high_school_students/

│ ├── high_school_students_1/

│ │ └── ...

│ ├── high_school_students_2/

│ │ └── ...

│ ├── high_school_students_3/

│ │ └── ...

│ ├── high_school_students_4/

│ │ └── ...

│ └── high_school_students_5/

│ └── ...

├── undergraduates/

│ ├── undergraduates_1/

│ │ └── ...

│ ├── undergraduates_2/

│ │ └── ...

│ ├── undergraduates_3/

│ │ └── ...

│ ├── undergraduates_4/

│ │ └── ...

│ └── undergraduates_5/

│ └── ...

├── graduates/

│ ├── graduates_1/

│ │ └── ...

│ ├── graduates_2/

│ │ └── ...

│ ├── graduates_3/

│ │ └── ...

│ ├── graduates_4/

│ │ └── ...

│ └── graduates_5/

│ └── ...

├── medical_students/

│ ├── medical_students_1/

│ │ └── ...

│ ├── medical_students_2/

│ │ └── ...

│ ├── medical_students_3/

│ │ └── ...

│ ├── medical_students_4/

│ │ └── ...

│ └── medical_students_5/

│ └── ...

├── residents/

│ ├── residents_1/

│ │ └── ...

│ ├── residents_2/

│ │ └── ...

│ ├── residents_3/

│ │ └── ...

│ ├── residents_4/

│ │ └── ...

│ └── residents_5/

│ └── ...

├── fellows/

│ ├── fellows_1/

│ │ └── ...

│ ├── fellows_2/

│ │ └── ...

│ ├── fellows_3/

│ │ └── ...

│ ├── fellows_4/

│ │ └── ...

│ └── fellows_5/

│ └── ...

├── faculty/

│ ├── faculty_1/

│ │ └── ...

│ ├── faculty_2/

│ │ └── ...

│ ├── faculty_3/

│ │ └── ...

│ ├── faculty_4/

│ │ └── ...

│ └── faculty_5/

│ └── ...

├── analysts/

│ ├── analysts_1/

│ │ └── ...

│ ├── analysts_2/

│ │ └── ...

│ ├── analysts_3/

│ │ └── ...

│ ├── analysts_4/

│ │ └── ...

│ └── analysts_5/

│ └── ...

├── staff/

│ ├── staff_1/

│ │ └── ...

│ ├── staff_2/

│ │ └── ...

│ ├── staff_3/

│ │ └── ...

│ ├── staff_4/

│ │ └── ...

│ └── staff_5/

│ └── ...

└── collaborators/

├── collaborators_1/

│ └── ...

├── collaborators_2/

│ └── ...

├── collaborators_3/

│ └── ...

├── collaborators_4/

│ └── ...

└── collaborators_5/

└── ...

Note: The ellipsis (…) is used to represent additional files and directories that may be present but are not explicitly shown in the provided structure.

846.2. script#

#!/bin/bash

# Change the working directory to the desired location

cd ~/dropbox/1f.ἡἔρις,κ/1.ontology

# Uncomment the following line if you need to create the "three40" directory

# nano three40.sh & paste the contents of the three40.sh file

# chmod +x three40.sh

# mkdir three40

# cd three40

# Create the root folder

# mkdir -p three40

# nano three40/_toc.yml & paste the contents of the _toc.yml file

# Create the "intro.ipynb" file inside the "root" folder

touch three40/intro.ipynb

# Function to read the chapters from the YAML file using pure bash

get_chapters_from_yaml() {

local part="$1"

local toc_file="three40/_toc.yml"

local lines

local in_part=false

while read -r line; do

if [[ "$line" == *"$part"* ]]; then

in_part=true

elif [[ "$line" == *"- File: "* ]]; then

if "$in_part"; then

echo "$line" | awk -F': ' '{print $2}' | tr -d ' '

fi

elif [[ "$line" == *"-"* ]]; then

in_part=false

fi

done < "$toc_file"

}

# Create parts and chapters based on the _toc.yml structure

parts=(

"Root"

"Act I"

"Act II"

"Act III"

"Act IV"

"Act V"

"Epilogue"

"Git & Spoke"

"Courses"

)

# Loop through parts and create chapters inside each part folder

for part in "${parts[@]}"; do

part_folder="three40/$part"

mkdir -p "$part_folder"

if [[ "$part" == "Root" ]]; then

# Create the "prologue.ipynb" file inside the "Root" folder

touch "$part_folder/prologue.ipynb"

else

# Get the chapters for the current part from the _toc.yml

chapters=($(get_chapters_from_yaml "$part"))

# Create chapter files inside the part folder

for chapter in "${chapters[@]}"; do

# Extract the act number and create the act folder

act=$(echo "$chapter" | cut -d '/' -f 3)

act_folder="$part_folder/Act $act"

mkdir -p "$act_folder"

# Create the chapter file inside the act folder

touch "$act_folder/$(basename "$chapter" .ipynb).ipynb"

done

fi

done

# Create the "dramatispersonae" folder and its subdirectories with loop

dramatispersonae_folders=(

"high_school_students"

"undergraduates"

"graduates"

"medical_students"

"residents"

"fellows"

"faculty"

"analysts"

"staff"

"collaborators"

)

for folder in "${dramatispersonae_folders[@]}"; do

mkdir -p "three40/dramatispersonae/$folder"

touch "three40/dramatispersonae/$folder/$folder.ipynb"

done

# Create additional .ipynb files inside specific subdirectories

touch "three40/dramatispersonae/high_school_students/calvin_mathews/calvin_mathews.ipynb"

touch "three40/dramatispersonae/medical_students/andrew_arking/andrew_arking.ipynb"

touch "three40/dramatispersonae/medical_students/andrew_arking/andrew_arking_1.ipynb"

touch "three40/dramatispersonae/collaborators/fawaz_al_ammary/fawaz_al_ammary.ipynb"

touch "three40/dramatispersonae/collaborators/fawaz_al_ammary/fawaz_al_ammary_1.ipynb"

echo "Folder structure has been created successfully."

846.3. _toc.yaml#

format: jb-book

root: intro.ipynb

title: Play

parts:

- caption:

chapters:

- file: prologue.ipynb

- caption: Act I

chapters:

- file: Act I/act1_1.ipynb

- file: Act I/act1_2.ipynb

- file: Act I/act1_3.ipynb

- caption: Act II

chapters:

- file: Act II/act2_1.ipynb

- file: Act II/act2_2.ipynb

- file: Act II/act2_3.ipynb

- file: Act II/act2_4.ipynb

- caption: Act III

chapters:

- file: Act III/act3_1.ipynb

- file: Act III/act3_2.ipynb

- file: Act III/act3_3.ipynb

- file: Act III/act3_4.ipynb

- file: Act III/act3_5.ipynb

- caption: Act IV

chapters:

- file: Act IV/act4_1.ipynb

- file: Act IV/act4_2.ipynb

- file: Act IV/act4_3.ipynb

- file: Act IV/act4_4.ipynb

- file: Act IV/act4_5.ipynb

- file: Act IV/act4_6.ipynb

- caption: Act V

chapters:

- file: Act V/act5_1.ipynb

- file: Act V/act5_2.ipynb

- file: Act V/act5_3.ipynb

- file: Act V/act5_4.ipynb

- file: Act V/act5_5.ipynb

- file: Act V/act5_6.ipynb

- caption: Epilogue

chapters:

- file: Epilogue/epi_1.ipynb

- file: Epilogue/epi_2.ipynb

- file: Epilogue/epi_3.ipynb

- file: Epilogue/epi_4.ipynb

- file: Epilogue/epi_5.ipynb

- file: Epilogue/epi_6.ipynb

- file: Epilogue/epi_7.ipynb

- file: Epilogue/epi_8.ipynb

- caption: Gas & Spoke

chapters:

- file: Gas & Spoke/gas_1.ipynb

- file: Gas & Spoke/gas_2.ipynb

- file: Gas & Spoke/gas_3.ipynb

- caption: Courses

chapters:

- url: https://publichealth.jhu.edu/courses

title: Stata Programming

- file: dramatis_personae/high_school_students/high_school_students.ipynb

- file: dramatis_personae/high_school_students/high_school_students_1.ipynb

- file: dramatis_personae/high_school_students/high_school_students_2.ipynb

- file: dramatis_personae/high_school_students/high_school_students_3.ipynb

- file: dramatis_personae/high_school_students/high_school_students_4.ipynb

- file: dramatis_personae/high_school_students/high_school_students_5.ipynb

- file: dramatis_personae/under_grads/under_grads.ipynb

- file: dramatis_personae/under_grads/under_grads_1.ipynb

- file: dramatis_personae/under_grads/under_grads_2.ipynb

- file: dramatis_personae/under_grads/under_grads_3.ipynb

- file: dramatis_personae/under_grads/under_grads_4.ipynb

- file: dramatis_personae/under_grads/under_grads_5.ipynb

- file: dramatis_personae/grad_students/grad_students.ipynb

- file: dramatis_personae/grad_students_1/grad_students_1.ipynb

- file: dramatis_personae/grad_students_2/grad_students_2.ipynb

- file: dramatis_personae/grad_students_3/grad_students_3.ipynb

- file: dramatis_personae/grad_students_4/grad_students_4.ipynb

- file: dramatis_personae/grad_students_5/grad_students_5.ipynb

- file: dramatis_personae/medical_students/medical_students.ipynb

- file: dramatis_personae/medical_students/medical_students_1.ipynb

- file: dramatis_personae/medical_students/medical_students_2.ipynb

- file: dramatis_personae/medical_students/medical_students_3.ipynb

- file: dramatis_personae/medical_students/medical_students_4.ipynb

- file: dramatis_personae/medical_students/medical_students_5.ipynb

- file: dramatis_personae/residents/residents.ipynb

- file: dramatis_personae/residents/residents_1.ipynb

- file: dramatis_personae/residents/residents_2.ipynb

- file: dramatis_personae/residents/residents_3.ipynb

- file: dramatis_personae/residents/residents_4.ipynb

- file: dramatis_personae/residents/residents_5.ipynb

- file: dramatis_personae/fellows/fellows.ipynb

- file: dramatis_personae/fellows/fellows_1.ipynb

- file: dramatis_personae/fellows/fellows_2.ipynb

- file: dramatis_personae/fellows/fellows_3.ipynb

- file: dramatis_personae/fellows/fellows_4.ipynb

- file: dramatis_personae/fellows/fellows_5.ipynb

- file: dramatis_personae/faculty/faculty.ipynb

- file: dramatis_personae/faculty/faculty_1.ipynb

- file: dramatis_personae/faculty/faculty_2.ipynb

- file: dramatis_personae/faculty/faculty_3.ipynb

- file: dramatis_personae/faculty/faculty_4.ipynb

- file: dramatis_personae/faculty/faculty_5.ipynb

- file: dramatis_personae/analysts/analysts.ipynb

- file: dramatis_personae/analysts/analysts_1.ipynb

- file: dramatis_personae/analysts/analysts_2.ipynb

- file: dramatis_personae/analysts/analysts_3.ipynb

- file: dramatis_personae/analysts/analysts_4.ipynb

- file: dramatis_personae/analysts/analysts_5.ipynb

- file: dramatis_personae/staff/staff.ipynb

- file: dramatis_personae/staff/staff_1.ipynb

- file: dramatis_personae/staff/staff_2.ipynb

- file: dramatis_personae/staff/staff_3.ipynb

- file: dramatis_personae/staff/staff_4.ipynb

- file: dramatis_personae/staff/staff_5.ipynb

- file: dramatis_personae/collaborators/collaborators.ipynb

- file: dramatis_personae/collaborators/collaborators_1.ipynb

- file: dramatis_personae/collaborators/collaborators_2.ipynb

- file: dramatis_personae/collaborators/collaborators_3.ipynb

- file: dramatis_personae/collaborators/collaborators_4.ipynb

- file: dramatis_personae/collaborators/collaborators_5.ipynb

- file: dramatis_personae/graduates/graduates.ipynb

- file: dramatis_personae/graduates/graduates_1.ipynb

- file: dramatis_personae/graduates/graduates_2.ipynb

- file: dramatis_personae/graduates/graduates_3.ipynb

- file: dramatis_personae/graduates/graduates_4.ipynb

- file: dramatis_personae/graduates/graduates_5.ipynb

847. in-a-nutshell#

do i just codify the entire 07/01/2006 - 07/02/2023?

the entire 17 years of my jhu life?

if so then this revolution will be televised!

not another soul will be lost to the abyss of the unknowable

let them find other ways to get lost, other lifetasks to complete

848. notfancybutworks#

#!/bin/bash

# Change the working directory to the desired location

cd ~/dropbox/1f.ἡἔρις,κ/1.ontology

# Create the "three40" directory

mkdir -p three40

# Create the "Root" folder and the "intro.ipynb" file inside it

mkdir -p "three40/Root"

touch "three40/Root/intro.ipynb"

# Create the "prologue.ipynb" file in the "three40" directory

touch "three40/prologue.ipynb"

# Create "Act I" folder and its subfiles

mkdir -p "three40/Act I"

touch "three40/Act I/act1_1.ipynb"

touch "three40/Act I/act1_2.ipynb"

touch "three40/Act I/act1_3.ipynb"

# Create "Act II" folder and its subfiles

mkdir -p "three40/Act II"

touch "three40/Act II/act2_1.ipynb"

touch "three40/Act II/act2_2.ipynb"

touch "three40/Act II/act2_3.ipynb"

touch "three40/Act II/act2_4.ipynb"

# Create "Act III" folder and its subfiles

mkdir -p "three40/Act III"

touch "three40/Act III/act3_1.ipynb"

touch "three40/Act III/act3_2.ipynb"

touch "three40/Act III/act3_3.ipynb"

touch "three40/Act III/act3_4.ipynb"

touch "three40/Act III/act3_5.ipynb"

# Create "Act IV" folder and its subfiles

mkdir -p "three40/Act IV"

touch "three40/Act IV/act4_1.ipynb"

touch "three40/Act IV/act4_2.ipynb"

touch "three40/Act IV/act4_3.ipynb"

touch "three40/Act IV/act4_4.ipynb"

touch "three40/Act IV/act4_5.ipynb"

touch "three40/Act IV/act4_6.ipynb"

# Create "Act V" folder and its subfiles

mkdir -p "three40/Act V"

touch "three40/Act V/act5_1.ipynb"

touch "three40/Act V/act5_2.ipynb"

touch "three40/Act V/act5_3.ipynb"

touch "three40/Act V/act5_4.ipynb"

touch "three40/Act V/act5_5.ipynb"

touch "three40/Act V/act5_6.ipynb"

# Create "Epilogue" folder and its subfiles

mkdir -p "three40/Epilogue"

touch "three40/Epilogue/epi_1.ipynb"

touch "three40/Epilogue/epi_2.ipynb"

touch "three40/Epilogue/epi_3.ipynb"

touch "three40/Epilogue/epi_4.ipynb"

touch "three40/Epilogue/epi_5.ipynb"

touch "three40/Epilogue/epi_6.ipynb"

touch "three40/Epilogue/epi_7.ipynb"

touch "three40/Epilogue/epi_8.ipynb"

# Create "Git & Spoke" folder and its subfiles

mkdir -p "three40/Git & Spoke"

touch "three40/Git & Spoke/gas_1.ipynb"

touch "three40/Git & Spoke/gas_2.ipynb"

touch "three40/Git & Spoke/gas_3.ipynb"

# Create "Courses" folder and its subfiles

mkdir -p "three40/Courses"

touch "three40/Courses/course1.ipynb"

touch "three40/Courses/course2.ipynb"

# Create "dramatispersonae" folder and its subdirectories

mkdir -p "three40/dramatispersonae/high_school_students"

mkdir -p "three40/dramatispersonae/undergraduates"

mkdir -p "three40/dramatispersonae/graduates"

mkdir -p "three40/dramatispersonae/medical_students"

mkdir -p "three40/dramatispersonae/residents"

mkdir -p "three40/dramatispersonae/fellows"

mkdir -p "three40/dramatispersonae/faculty"

mkdir -p "three40/dramatispersonae/analysts"

mkdir -p "three40/dramatispersonae/staff"

mkdir -p "three40/dramatispersonae/collaborators"

# Create "dramatispersonae" subdirectories with suffixes _1 to _5

for branch in high_school_students undergraduates graduates medical_students residents fellows faculty analysts staff collaborators; do

for ((i=1; i<=5; i++)); do

mkdir -p "three40/dramatispersonae/${branch}/${branch}_${i}"

done

done

# Create additional .ipynb files inside specific subdirectories

touch "three40/dramatispersonae/high_school_students/high_school_students.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates.ipynb"

touch "three40/dramatispersonae/graduates/graduates.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students.ipynb"

touch "three40/dramatispersonae/residents/residents.ipynb"

touch "three40/dramatispersonae/fellows/fellows.ipynb"

touch "three40/dramatispersonae/faculty/faculty.ipynb"

touch "three40/dramatispersonae/analysts/analysts.ipynb"

touch "three40/dramatispersonae/staff/staff.ipynb"

touch "three40/dramatispersonae/collaborators/collaborators.ipynb"

touch "three40/dramatispersonae/high_school_students/high_school_students_1.ipynb"

touch "three40/dramatispersonae/high_school_students/high_school_students_2.ipynb"

touch "three40/dramatispersonae/high_school_students/high_school_students_3.ipynb"

touch "three40/dramatispersonae/high_school_students/high_school_students_4.ipynb"

touch "three40/dramatispersonae/high_school_students/high_school_students_5.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates_1.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates_2.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates_3.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates_4.ipynb"

touch "three40/dramatispersonae/undergraduates/undergraduates_5.ipynb"

touch "three40/dramatispersonae/graduates/graduates_1.ipynb"

touch "three40/dramatispersonae/graduates/graduates_2.ipynb"

touch "three40/dramatispersonae/graduates/graduates_3.ipynb"

touch "three40/dramatispersonae/graduates/graduates_4.ipynb"

touch "three40/dramatispersonae/graduates/graduates_5.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students_1.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students_2.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students_3.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students_4.ipynb"

touch "three40/dramatispersonae/medical_students/medical_students_5.ipynb"

touch "three40/dramatispersonae/residents/residents_1.ipynb"

touch "three40/dramatispersonae/residents/residents_2.ipynb"

touch "three40/dramatispersonae/residents/residents_3.ipynb"

touch "three40/dramatispersonae/residents/residents_4.ipynb"

touch "three40/dramatispersonae/residents/residents_5.ipynb"

touch "three40/dramatispersonae/fellows/fellows_1.ipynb"

touch "three40/dramatispersonae/fellows/fellows_2.ipynb"

touch "three40/dramatispersonae/fellows/fellows_3.ipynb"

touch "three40/dramatispersonae/fellows/fellows_4.ipynb"

touch "three40/dramatispersonae/fellows/fellows_5.ipynb"

touch "three40/dramatispersonae/faculty/faculty_1.ipynb"

touch "three40/dramatispersonae/faculty/faculty_2.ipynb"

touch "three40/dramatispersonae/faculty/faculty_3.ipynb"

touch "three40/dramatispersonae/faculty/faculty_4.ipynb"

touch "three40/dramatispersonae/faculty/faculty_5.ipynb"

touch "three40/dramatispersonae/analysts/analysts_1.ipynb"

touch "three40/dramatispersonae/analysts/analysts_2.ipynb"