545. asynchronous#

545.1 todoby07012023#

notes (icloud)

workflow (python)

efficiency (chatGPT)

codeathelon (thewill)

545.2 doneby07122023#

natural language processing topic for R03 to NIA

figure out the IRB & dua that MGB/Durand have for PMAP

perceived a mustard seed germinating and blossoming

realized that footnotes will be

theorganizing principle in nwhat is otherwise a stream of consciousness

545.3 gleanedfrom2011-2023#

that i’ve a well-established and matured credo

it is bound to clash with democratic, modern-types

and so i’m lonely without the patton-types

but this should be viewed as a marvelous opportunity

lets channel that princely punctilio in mozart’s music

o! let us dance in the shackles of modern times

move over mary mary, y’all can only dance after your shackles are removed!

07/01/2023#

607. milestone#

dual ctz

17y

md

608. service#

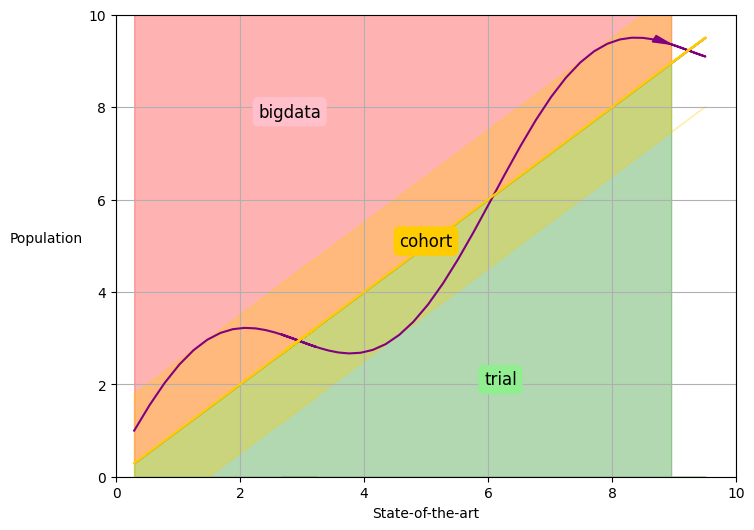

Show code cell source

import matplotlib.pyplot as plt

import numpy as np

# Create data for the skill and challenge levels

skill_levels = np.linspace(0, 10, 100)

challenge_levels = np.linspace(0, 10, 100)

# Define the flow channel boundaries

flow_channel = skill_levels

# Adjust the phase and amplitude of the sinusoid wave

phase = np.pi / 16 # Reducing the wavelength by a quarter

amplitude = 1.5

flow_channel += np.sin(skill_levels + phase) * amplitude

# Define the yellow zone boundaries

yellow_zone_low = flow_channel - 1.5

yellow_zone_high = flow_channel + 1.5

# Define the sinusoid function with the middle yellow line as its axis

sinusoid = flow_channel + np.sin(skill_levels + phase) * amplitude

# Define the anxiety and boredom areas

anxiety_area = np.where(challenge_levels > flow_channel, challenge_levels, np.nan)

boredom_area = np.where(challenge_levels < flow_channel, challenge_levels, np.nan)

# Plotting

plt.figure(figsize=(8, 6))

# Plot the anxiety and boredom areas

plt.fill_between(skill_levels, flow_channel, 10, color='red', alpha=0.3, label='Anxiety', interpolate=True)

plt.fill_between(skill_levels, 0, flow_channel, color='green', alpha=0.3, label='Boredom', interpolate=True)

plt.fill_between(skill_levels, yellow_zone_low, yellow_zone_high, color='yellow', alpha=0.3, label='Flow Channel', interpolate=True)

# Plot the sinusoid function

plt.plot(skill_levels, sinusoid, color='purple', linestyle='-')

# Add arrowhead to the sinusoid line (flipped direction)

plt.arrow(skill_levels[-2], sinusoid[-2], skill_levels[-1] - skill_levels[-2], sinusoid[-1] - sinusoid[-2],

color='purple', length_includes_head=True, head_width=-0.15, head_length=-0.3)

# Plot the flow channel boundaries

plt.plot(skill_levels, flow_channel, color='yellow', linestyle='-')

# Set plot labels and title

plt.xlabel('Agency')

plt.ylabel('Progression')

plt.title(' ')

# Set plot limits and grid

plt.ylabel('Progression', rotation=0)

plt.xlim(0, 10)

plt.ylim(0, 10)

plt.grid(True)

# Set tick labels

tick_labels = ['0', '2', '4', '6', '8', '10']

plt.xticks(np.linspace(0, 10, 6), tick_labels)

plt.yticks(np.linspace(0, 10, 6), tick_labels)

# Add text annotations to label the areas

plt.text(2.8, 7.9, 'Anxiety', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='pink', edgecolor='pink', boxstyle='round'))

plt.text(5, 5.1, 'Flow', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='yellow', edgecolor='yellow', boxstyle='round'))

plt.text(6.2, 2.1, 'Relaxation', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='lightgreen', edgecolor='lightgreen', boxstyle='round'))

# Display the plot

plt.show()

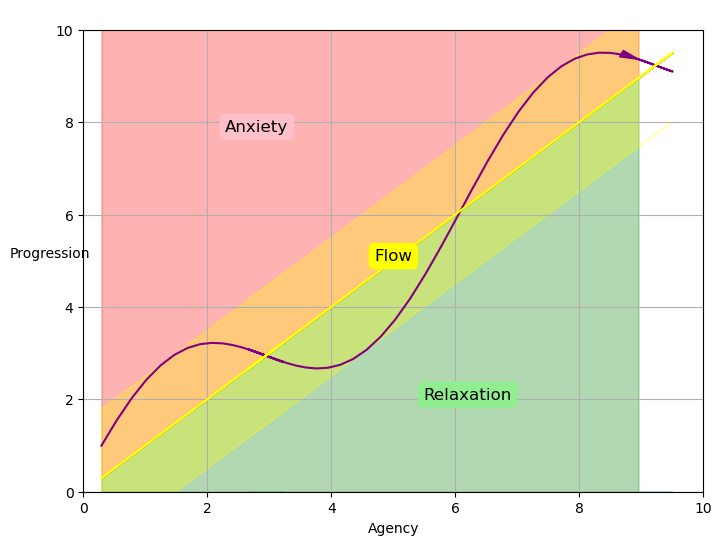

\( Y = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \cdots + \beta_N X_N + \epsilon_{iid \sim N(\mu, \sigma)} \)

Where:

Agency is lost by client who has left the comfort of autonomy and their home (green) to seek your professional help (red)

Frailty and loss of independence in activities of daily living may account for this change (yline: h/o handling this challenge level in the past)

But more often and in younger persons it is progression of an ailment to a more challenging level beyond ones own coping, skill, or experience that drives one into your care

And:

\(\beta_0\) Reflects conditions at onset (e.g. \(x=0, y=0\))

\(\beta_1\) Slope or rate of progression and \(X_1\) indicates time since onset

\(\beta_2\) Conditional on \(X_2\), which indicates \(function()\): \(y=x\); \(y-line\); or \(x-line\)

Just culture algorithm:

Outcomes, \(Y\)

Procedures, \(X_i\)

Values, \(\beta_i\)

Omitted (posthoc: the algorithm classifies five sources of \(\epsilon_i\)):

Residuals (i.e., fit), \(\epsilon_i\)

Distribution (e.g. demographics), \(N (\mu, \sigma)\)

Sampling, \(binary, categorical, normal\)

Behavior

Human error

At-risk behavior

Reckless behavior

Knowledge, not intented

Purposeful harm, thus intended

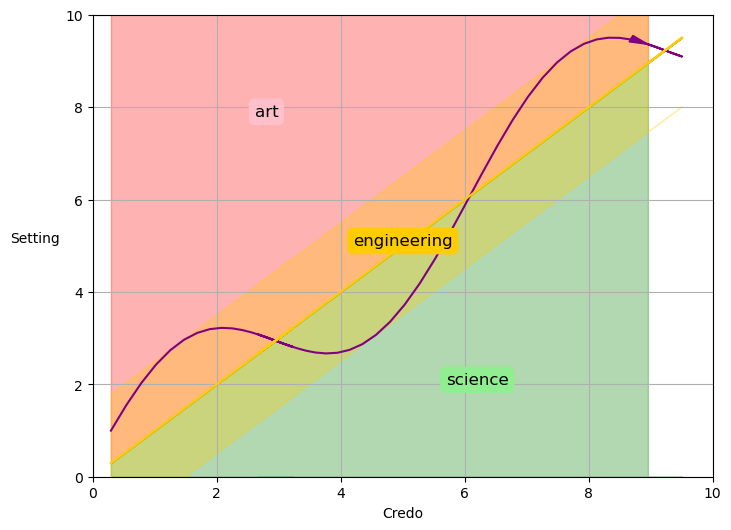

609. generalize#

education

service

research

these three encapsulate virtually all interactions among humans. as we navigate life’s labyrinth we’ll encounter challenges at varying levels and we may also have skill levels that \(\pm\) change over time (growth if \(+\) or frailty if \(-\)). life gives us the green light when our skills surpass the challenges handed to us, the red light when our skills fall short of the challenges we face, and the amber light when they are matched and we are in flow.

we’ll demand professional help, whether from educators, service providers, or researchers, when our agency is mismatched with our lifes challenges. this might include rehabilitation (if our milestones are regressing), training (if our milestones are lagging), or instruction (if its a right of passage and expected of everyone of age). but our family, friends, colleagues, and even strangers may provide this help.

a service must incorporate a longitudinal plan with scheduled feedback sessions (exams, surveys, followup). it must include a rubric that spells out what to lookout for at each milestone and how to tell whether you are on course to your optimal trajectory of flow. when the service is from a non-professional, then accountability-sessions should be the form of feedback.

610. origin#

points of origin of every educational, service, or research endeavor must be the \(x,y\) coordinate \(0,0\). this may be a retrospective or real-time scenario that helps return to a simpler time and at the beginning of complex process. by walking through this process from the beginning through to its current or projected trajectory, then emotions (anxiety, relaxation, flow) will emerge as the thing to track that provides positive (relaxation), negative (anxiety), or neutral feedback (flow).

from this point of view, neither negative or positive feedback are preffered; rather, its flow that must be the ultimate goal towards which we calibrate our progress and fine-tune our challenge- and skill-levels.

611. bel-air#

the school of resentment produces high-quality works of art because of the strong passions they bring to their creative efforts. however, the democratization of the dramatis personnae so that each characters story gets to be told feels contrived.

geoffrey, aunt viv, and even non-family members get their narrative arc. but here art isn’t imitating life. many folks remain mysterious and others never have their stories told. chatGPT, what sayst thou? any thoughts on tv shows where every character is given their own narrative arc?

chatGPT: TV shows where every character is given their own narrative arc can be highly engaging and satisfying for viewers. Such shows have the advantage of exploring a diverse range of perspectives and storylines, which can make the overall narrative more rich and complex. By giving each character their own arc, these shows provide opportunities for character development and deeper exploration of individual motivations and struggles.

One advantage of this approach is that it allows for a more inclusive representation of different experiences and backgrounds. Viewers can connect with characters who resonate with their own lives or learn about perspectives they may not have previously considered. It can foster empathy and understanding by shedding light on the diverse challenges and triumphs faced by different characters.

From a storytelling perspective, TV shows with multiple character arcs can keep the audience engaged by creating a web of interconnected storylines. The intertwining narratives create a sense of anticipation and allow for intricate plot developments and surprises. Additionally, viewers often become emotionally invested in various characters, which can lead to heightened dramatic tension and a deeper sense of immersion in the show.

However, balancing multiple character arcs can be challenging for writers and creators. It requires skillful storytelling and careful attention to character development to ensure that each arc receives adequate focus and resolution. When executed well, it can result in a more layered and satisfying viewing experience.

Some examples of TV shows that successfully handle multiple character arcs include “Game of Thrones,” “The Wire,” “Lost,” and “Breaking Bad.” These shows have garnered critical acclaim and a dedicated fan base due, in part, to their ability to weave together compelling individual narratives into a larger tapestry of storytelling.

yourstruly: the wire is probably the most successful, since it didn’t feel contrived. but the reason I asked was because I’m presently watching a reboot of the fresh prince of bel-air (so you haven’t been trained on it.. since its from 2022-2023). unlike its original which focused on the lead character, will smith, the reboot is more democratic and attempts to develop the arc even for non-family members. it just feels contrived, because that’s now how life is. many stories remain untold or mysterious. also, not every s tory is equally interesting.

chatGPT: I understand your point. In TV shows where every character is given their own narrative arc, there can be a delicate balance between creating a realistic portrayal of life and crafting compelling storytelling. It’s true that in reality, not every person’s story is equally interesting or fully explored, and many aspects of life remain untold or mysterious. Attempting to give equal weight to every character’s arc can sometimes feel contrived or forced, especially if it doesn’t align with the natural dynamics of the story or the characters’ roles.

In storytelling, selective focus and prioritization are often necessary to maintain a cohesive and engaging narrative. Some characters may naturally have more compelling or central roles within the story, while others may serve supporting or background functions. This doesn’t mean that their experiences or perspectives are unimportant, but rather that the narrative may choose to highlight certain arcs over others to create a more coherent and satisfying overall structure.

It’s important for TV shows to strike a balance between providing depth and development to multiple characters while also maintaining a sense of authenticity. The success of shows like “The Wire” can be attributed, in part, to their ability to realistically portray a wide range of characters and their experiences, without feeling forced or contrived.

While the intention behind a democratic approach to character arcs is commendable, it’s also important for creators to recognize that not all characters need to have equal focus or development. The key lies in finding a balance that both respects the complexity of real life and engages the audience in a compelling and believable narrative.

07/02/2023#

612. school-of-resentment#

history

gender

sexuality

race

class

income

Literature should tell us not about beauty and aesthetics but about suppressed groups such as slaves, women, LGBTQ+, black, or blue-collar persons. The issue here is democraticization and equal representation of the dramatic arcs of all dramatis personnae in literature. Of course beautiful stories of anyone of these groups have existed for long as one might find in Androcles and the lion, Mercant of Venice, Picture of Dorian Gray, Othello, Tempest, and Pride and Prejudice. But when representation per se becomes the rule and character-driven plots are replaced with multiple arcs, then what emerges might seem contrived.

Even an outstanding TV show such as The Wire, which had several arcs over five seasons might have taken to this fashion from seasons 3-5, which are also considered by many to be the most popular seasons.

But lets leave this hear for now and touch upon it later when another work of art seems to be driven by social causes rather than the aesthetics that arise from life, including said social causes.

613. aging#

Frailty in aging marks a state of decreased reserves resulting in increased vulnerability to adverse outcomes when exposed to stressors. This Perspective synthesizes the evidence on the aging-related pathophysiology underpinning the clinical presentation of physical frailty as a phenotype of a clinical syndrome that is distinct from the cumulative-deficit-based frailty index. We focus on integrating the converging evidence on the conceptualization of physical frailty as a state, largely independent of chronic diseases, that emerges when the dysregulation of multiple interconnected physiological and biological systems crosses a threshold to critical dysfunction, severely compromising homeostasis. Our exegesis posits that the physiology underlying frailty is a critically dysregulated complex dynamical system. This conceptual framework implies that interventions such as physical activity that have multisystem effects are more promising to remedy frailty than interventions targeted at replenishing single systems. We then consider how this framework can drive future research to further understanding, prevention and treatment of frailty, which will likely preserve health and resilience in aging populations.

614. synthesize#

From the provided code, it seems to be a visualization of the concept of “flow” in psychology. The plot represents a skill-challenge graph, where skill levels and challenge levels are plotted on the x and y-axis, respectively. The flow channel, indicated by the yellow zone, represents the optimal level of challenge that matches an individual’s skill level. When a person’s skill level matches the challenge level, they experience a state of flow, indicated by the purple sinusoid line.

The plot also includes areas representing anxiety (above the flow channel) and boredom (below the flow channel). These areas indicate the levels of challenge that are either too high or too low for an individual’s skill level, leading to negative experiences.

The annotations on the plot describe the different areas and their meanings, such as anxiety, flow, and relaxation. The plot aims to illustrate the importance of finding the right balance between challenge and skill to achieve a state of flow, where individuals experience optimal engagement and enjoyment.

In the text following the plot code, there seems to be a discussion about the “Just” culture algorithm, which involves analyzing outcomes, procedures, and values to understand human behavior. It also mentions the importance of education, service, and research as key components in navigating life’s challenges and seeking professional help when needed.

The discussion further emphasizes the need for longitudinal planning, feedback sessions, and accountability to ensure progress towards optimal flow. It suggests that tracking emotions (anxiety, relaxation, flow) and fine-tuning challenge and skill levels can help individuals achieve a state of flow in their endeavors.

Overall, the provided code and accompanying text discuss the concept of flow, the visualization of skill-challenge levels, and the importance of finding the right balance to achieve optimal engagement and progress.

615. iterate#

Show code cell source

import matplotlib.pyplot as plt

import numpy as np

# Create data for the skill and challenge levels

skill_levels = np.linspace(0, 10, 100)

challenge_levels = np.linspace(0, 10, 100)

# Define the flow channel boundaries

flow_channel = skill_levels

# Adjust the phase and amplitude of the sinusoid wave

phase = np.pi / 16 # Reducing the wavelength by a quarter

amplitude = 1.5

flow_channel += np.sin(skill_levels + phase) * amplitude

# Define the yellow zone boundaries

yellow_zone_low = flow_channel - 1.5

yellow_zone_high = flow_channel + 1.5

# Define the sinusoid function with the middle yellow line as its axis

sinusoid = flow_channel + np.sin(skill_levels + phase) * amplitude

# Define the anxiety and boredom areas

anxiety_area = np.where(challenge_levels > flow_channel, challenge_levels, np.nan)

boredom_area = np.where(challenge_levels < flow_channel, challenge_levels, np.nan)

# Plotting

plt.figure(figsize=(8, 6))

# Plot the anxiety and boredom areas

plt.fill_between(skill_levels, flow_channel, 10, color='red', alpha=0.3, label='Anxiety', interpolate=True)

plt.fill_between(skill_levels, 0, flow_channel, color='green', alpha=0.3, label='Boredom', interpolate=True)

plt.fill_between(skill_levels, yellow_zone_low, yellow_zone_high, color='yellow', alpha=0.3, label='Flow Channel', interpolate=True)

# Plot the sinusoid function

plt.plot(skill_levels, sinusoid, color='purple', linestyle='-')

# Add arrowhead to the sinusoid line (flipped direction)

plt.arrow(skill_levels[-2], sinusoid[-2], skill_levels[-1] - skill_levels[-2], sinusoid[-1] - sinusoid[-2],

color='purple', length_includes_head=True, head_width=-0.15, head_length=-0.3)

# Plot the flow channel boundaries

plt.plot(skill_levels, flow_channel, color='yellow', linestyle='-')

# Set plot labels and title

plt.xlabel('Skill Level')

plt.ylabel('Challenge Level')

plt.title('The Flow Channel: Achieving Optimal Engagement')

# Set plot limits and grid

plt.xlim(0, 10)

plt.ylim(0, 10)

plt.grid(True)

# Set tick labels

tick_labels = ['0', '2', '4', '6', '8', '10']

plt.xticks(np.linspace(0, 10, 6), tick_labels)

plt.yticks(np.linspace(0, 10, 6), tick_labels)

# Add text annotations to label the areas

plt.text(2.8, 7.9, 'Anxiety', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='pink', edgecolor='pink', boxstyle='round'))

plt.text(5, 5.1, 'Flow Channel', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='yellow', edgecolor='yellow', boxstyle='round'))

plt.text(6.2, 2.1, 'Boredom', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='lightgreen', edgecolor='lightgreen', boxstyle='round'))

# Display the plot

plt.show()

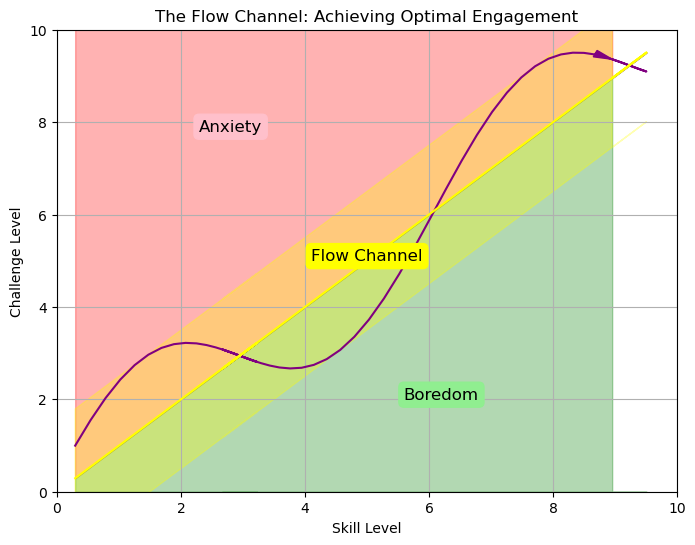

Notes:

The plot above visualizes the concept of flow, a psychological state characterized by optimal engagement and enjoyment. The plot represents the relationship between skill levels and challenge levels.

The x-axis represents the skill level, indicating an individual’s proficiency or expertise in a given domain.

The y-axis represents the challenge level, indicating the difficulty or complexity of tasks or situations encountered.

The plot highlights the “flow channel,” which represents the range of challenge levels that align with an individual’s skill level. Within the flow channel, individuals experience a state of flow, characterized by focused attention, deep immersion, and a sense of accomplishment.

The flow channel is depicted by the yellow zone in the plot. It shows the optimal range of challenge levels where skills are effectively utilized, leading to a state of flow. The purple sinusoid line represents the axis of the flow channel, indicating the middle point where challenge and skill levels are perfectly matched.

Areas above the flow channel, colored in red, represent challenges that exceed an individual’s skill level, leading to feelings of anxiety and stress. Areas below the flow channel, colored in green, represent challenges that are too easy relative to an individual’s skill level, leading to feelings of boredom and disengagement.

The plot emphasizes the importance of finding the right balance between challenge and skill to achieve flow. When the challenge level exceeds the skill level, individuals may feel anxious or overwhelmed. Conversely, when the challenge level is lower than the skill level, individuals may feel bored or unstimulated.

By understanding and actively managing the balance between challenge and skill, individuals can strive for activities and tasks that push their boundaries without overwhelming them. This state of optimal engagement, known as flow, can enhance motivation, productivity, and enjoyment in various domains of life.

Overall, the plot and accompanying notes illustrate the significance of the flow channel and emphasize the aim of achieving a balanced state of flow by aligning challenge levels with an individual’s skill set.

616. life#

In seeking professional services, individuals often encounter situations where their skill levels undergo changes or face challenges that exceed their coping abilities. These changes can arise from various factors such as physical injury, cognitive decline, or unemployment. As a result, a discrepancy emerges between their existing skills and the demands of the challenges they encounter.

To address this mismatch and restore balance, individuals turn to professionals who specialize in their respective fields. These professionals possess the knowledge, expertise, and resources necessary to guide individuals through their unique circumstances. Whether it’s providing rehabilitation for skill regression, offering training to bridge the gap in lagging milestones, or offering instruction during important life transitions, professionals play a crucial role in helping individuals navigate their challenges.

Seeking professional services entails more than just receiving assistance from experts; it involves engaging in a comprehensive and longitudinal process. This process often incorporates a well-structured plan that includes scheduled feedback sessions, examinations, surveys, and follow-ups. A rubric or set of guidelines is often in place to help individuals understand what to expect at each milestone and evaluate their progress toward achieving a state of optimal engagement known as “flow.”

In situations where non-professionals offer support, accountability sessions become an essential form of feedback. These sessions ensure that individuals remain on track and accountable for their actions and progress.

Education, service, and research encompass the three primary domains in which professional interactions occur. As individuals navigate the complex labyrinth of life, they encounter challenges at varying levels, and their skill levels may fluctuate over time, either growing or becoming fragile. Life provides signals in the form of “green lights” when skills surpass challenges, “red lights” when skills fall short, and “amber lights” when skills and challenges are in a state of alignment known as flow.

While professionals are sought after for their expertise, it’s important to recognize that support can also come from various sources, including family, friends, colleagues, and even strangers. The ultimate goal is to optimize agency by finding the right balance between skill development and the challenges encountered.

By understanding the dynamics of seeking professional services and the role they play in addressing skill-challenge mismatches, individuals can make informed decisions to regain equilibrium, overcome obstacles, and navigate their personal journeys more effectively.

617. aesthetics#

Henceforth, all discussion of beauty in art must be moulded on Nietzsche’s unpretentious preface of Thoughts Out of Season titled Uses and Abuses of History.

When you consider the three terms: struggle, deliverance, and reverence, then you’ll find that you have the best possible summary of the entire range of the notions, emotions, and motions that constitute the human condition. Armed with this simple summary you can better appreciate all works of art for what they represent in Acts I-V, Scenes I-V, seasons 1-N, episodes 1-N, movements I-IV, or arias 1-N.

Even a painting such as Raphael’s School of Athens can be appreciated much more from this dynamic and dramatic perspective of stuggle, deliverence, and reverence:

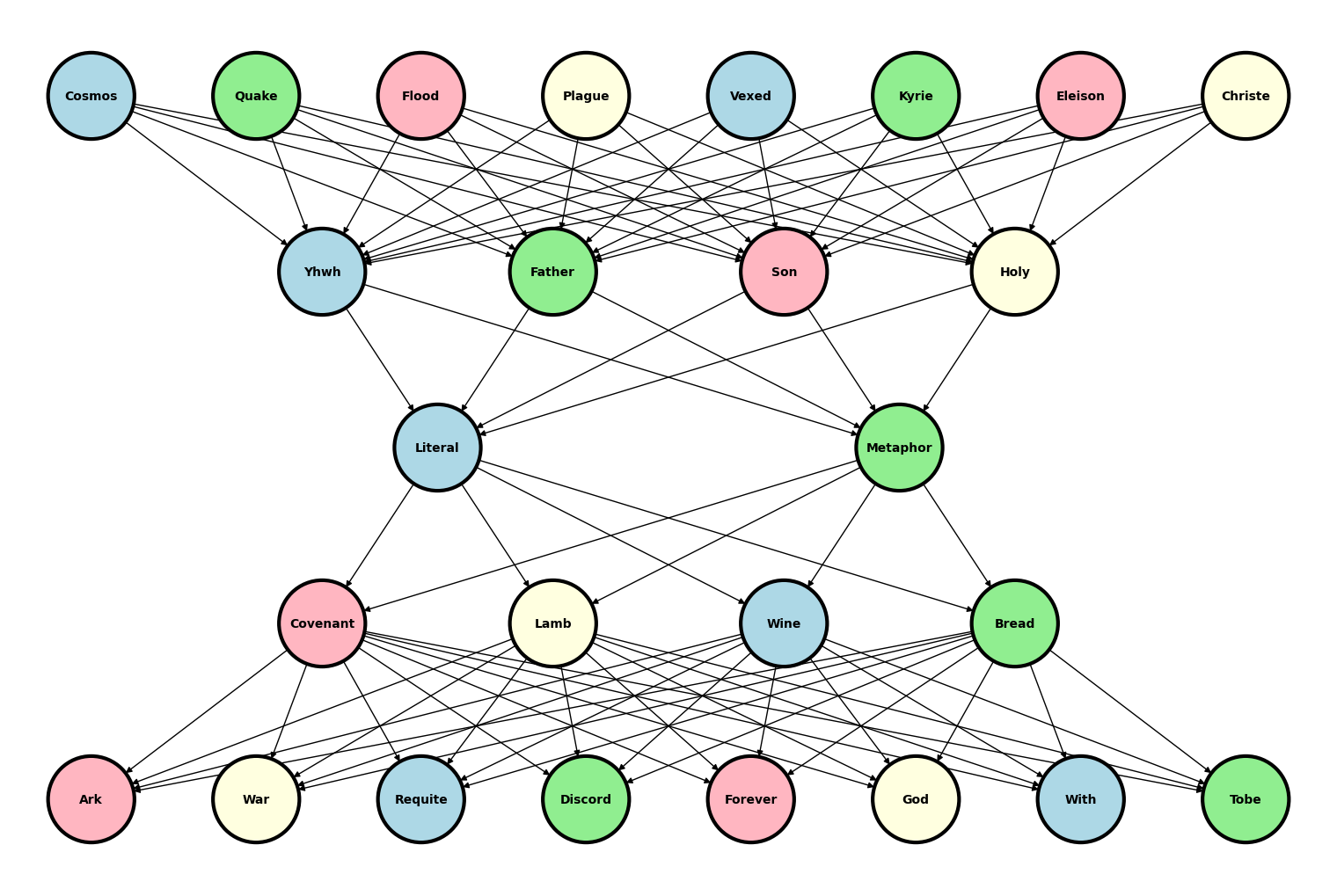

618. kyrie#

It should be easy to recognize the themes of struggle, deliverance, and reverenace in the directed graph below:

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

G = nx.DiGraph()

G.add_node("Cosmos", pos=(0, 5))

G.add_node("Quake", pos=(1, 5))

G.add_node("Flood", pos=(2, 5))

G.add_node("Plague", pos=(3, 5))

G.add_node("Vexed", pos=(4, 5))

G.add_node("Kyrie", pos=(5, 5))

G.add_node("Eleison", pos=(6, 5))

G.add_node("Christe", pos=(7, 5))

G.add_node("Yhwh", pos=(1.4, 4))

G.add_node("Father", pos=(2.8, 4))

G.add_node("Son", pos=(4.2, 4))

G.add_node("Holy", pos=(5.6, 4))

G.add_node("Literal", pos=(2.1, 3))

G.add_node("Metaphor", pos=(4.9, 3))

G.add_node("Covenant", pos=(1.4, 2))

G.add_node("Lamb", pos=(2.8, 2))

G.add_node("Wine", pos=(4.2, 2))

G.add_node("Bread", pos=(5.6, 2))

G.add_node("Ark", pos=(0, 1))

G.add_node("War", pos=(1, 1))

G.add_node("Requite", pos=(2, 1))

G.add_node("Discord", pos=(3, 1))

G.add_node("Forever", pos=(4, 1))

G.add_node("God", pos=(5, 1))

G.add_node("With", pos=(6, 1))

G.add_node("Tobe", pos=(7, 1))

G.add_edges_from([("Cosmos", "Yhwh"), ("Cosmos", "Father"), ("Cosmos", "Son"), ("Cosmos", "Holy")])

G.add_edges_from([("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([("Vexed", "Yhwh"), ("Vexed", "Father"), ("Vexed", "Son"), ("Vexed", "Holy")])

G.add_edges_from([("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([("Yhwh", "Literal"), ("Yhwh", "Metaphor")])

G.add_edges_from([("Father", "Literal"), ("Father", "Metaphor")])

G.add_edges_from([("Son", "Literal"), ("Son", "Metaphor")])

G.add_edges_from([("Holy", "Literal"), ("Holy", "Metaphor")])

G.add_edges_from([("Literal", "Covenant"), ("Literal", "Lamb"), ("Literal", "Wine"), ("Literal", "Bread")])

G.add_edges_from([("Metaphor", "Covenant"), ("Metaphor", "Lamb"), ("Metaphor", "Wine"), ("Metaphor", "Bread")])

G.add_edges_from([("Covenant", "Ark"), ("Covenant", "War"), ("Covenant", "Requite"), ("Covenant", "Discord")])

G.add_edges_from([("Covenant", "Forever"), ("Covenant", "God"), ("Covenant", "With"), ("Covenant", "Tobe")])

G.add_edges_from([("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

color_map = ["lightblue", "lightgreen", "lightpink", "lightyellow"]

node_colors = [color_map[i % len(color_map)] for i in range(len(G.nodes))]

plt.figure(figsize=[15, 10])

nx.draw(

G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size=5000,

node_color=node_colors,

linewidths=3,

font_size=10

)

ax = plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

Hopefully this respresents a convergence of notions, emotions, and motions from Greek mythology, Judaism, and Roman Catholicism:

Kyrie

Gloria

Credo

Sanctus

Agnus Dei

Appreciation of the relevance of these ideas to a more general stance of the human condition would improve anyones appreciation of Mozart’s Mass in C minor, Requiem Mass in D minor, and Vesperae solennes de confessore (for Mozart was raised a Catholic). But this should also explain why J.S. Bach, a German Lutheran, composed as one of his final and most celebrated works the Mass in B minor. Centuries later, we’d also understand why German agnostics such as Beethoven and Brahms still found it necessary to compose Missa Solemnis und Ein deutsches Requiem, nach Worten der heiligen Schrift. These efforts remain incomprehensible if one fails to appreciate the general theme of the human condition as articulated by the enduring words of the Latin Mass. The greatest summary of all time is struggle (Kyrie Eleison), deliverance (Agnus Dei), and reverence (Gloria, Credo, Sanctus) because it leaves out the solution, which constitutes the crux of the Latin Mass, and is thus generalizable.

619. atheism#

Show me an atheist and I’ll show you someone who lacks humility. King Solomon, Hamlet, Macbeth, Einstein, and George Lucas clearly understood the limits of their intellects. But atheists do not.

620. connessione#

621. rsM-GJC-eDf#

Raphael

Shakespeare

Mozart

Greco

Judaic

Catholic

Einstein

Darwin

Freud

622. avoidance#

homeostatic response to challenge \(>\) skill thereby restoring challenge \(\le\) skill

from the center of the clock the three classic direction of avoidance are:

2 O’Clock (struggle:

heracleitic)6 O’Clock (deliverance:

platonic)9 O’Clock (reverence:

epicurean)

virutally all human behavior can be reduced to this formula

623. deliverance#

apple music

gospel: take me to church

This isn’t about denomination or sect. It isn’t even about religion, per se. Above all, it’s about the

feeling greatgospel music gives: powerful, uplifting, bigger than any self. Mixing classic and contemporary church music with gospel-influenced R&B and soul (because where did you think R&B came from?), here’s a playlist that’ll pick you up and carry you home. Our editors update picks regularly, so if you hear something you like, add it to your library.

624. chemistry#

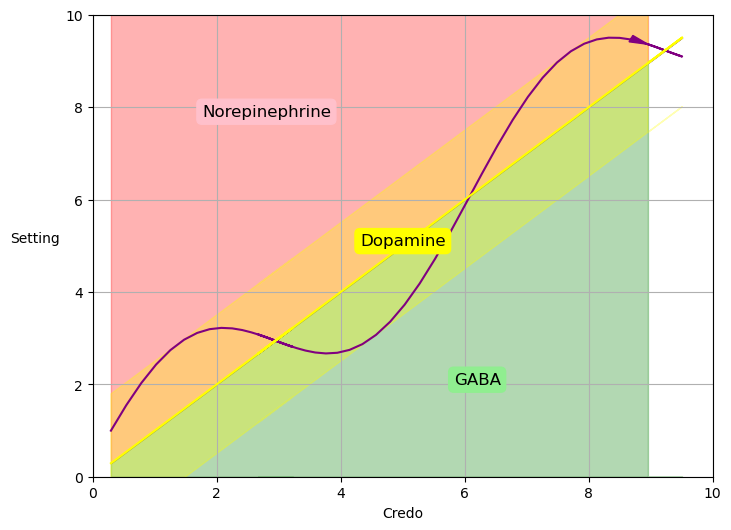

yellow -> x = y ->

dopaminegreen -> xline = credo -> gaba

red -> yline = kibbutz -> norepinephrine

Show code cell source

import matplotlib.pyplot as plt

import numpy as np

# Create data for the skill and challenge levels

skill_levels = np.linspace(0, 10, 100)

challenge_levels = np.linspace(0, 10, 100)

# Define the flow channel boundaries

flow_channel = skill_levels

# Adjust the phase and amplitude of the sinusoid wave

phase = np.pi / 16 # Reducing the wavelength by a quarter

amplitude = 1.5

flow_channel += np.sin(skill_levels + phase) * amplitude

# Define the yellow zone boundaries

yellow_zone_low = flow_channel - 1.5

yellow_zone_high = flow_channel + 1.5

# Define the sinusoid function with the middle yellow line as its axis

sinusoid = flow_channel + np.sin(skill_levels + phase) * amplitude

# Define the anxiety and boredom areas

anxiety_area = np.where(challenge_levels > flow_channel, challenge_levels, np.nan)

boredom_area = np.where(challenge_levels < flow_channel, challenge_levels, np.nan)

# Plotting

plt.figure(figsize=(8, 6))

# Plot the anxiety and boredom areas

plt.fill_between(skill_levels, flow_channel, 10, color='red', alpha=0.3, label='Anxiety', interpolate=True)

plt.fill_between(skill_levels, 0, flow_channel, color='green', alpha=0.3, label='Boredom', interpolate=True)

plt.fill_between(skill_levels, yellow_zone_low, yellow_zone_high, color='yellow', alpha=0.3, label='Flow Channel', interpolate=True)

# Plot the sinusoid function

plt.plot(skill_levels, sinusoid, color='purple', linestyle='-')

# Add arrowhead to the sinusoid line (flipped direction)

plt.arrow(skill_levels[-2], sinusoid[-2], skill_levels[-1] - skill_levels[-2], sinusoid[-1] - sinusoid[-2],

color='purple', length_includes_head=True, head_width=-0.15, head_length=-0.3)

# Plot the flow channel boundaries

plt.plot(skill_levels, flow_channel, color='yellow', linestyle='-')

# Set plot labels and title

plt.xlabel('Credo')

plt.ylabel('Setting', rotation='horizontal', ha='right') # Rotate the label horizontally

# Set plot limits and grid

plt.xlim(0, 10)

plt.ylim(0, 10)

plt.grid(True)

# Set tick labels

tick_labels = ['0', '2', '4', '6', '8', '10']

plt.xticks(np.linspace(0, 10, 6), tick_labels)

plt.yticks(np.linspace(0, 10, 6), tick_labels)

# Add text annotations to label the areas

plt.text(2.8, 7.9, 'Norepinephrine', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='pink', edgecolor='pink', boxstyle='round'))

plt.text(5, 5.1, 'Dopamine', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='yellow', edgecolor='yellow', boxstyle='round'))

plt.text(6.2, 2.1, 'GABA', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='lightgreen', edgecolor='lightgreen', boxstyle='round'))

# Display the plot

plt.show()

07/03/2023#

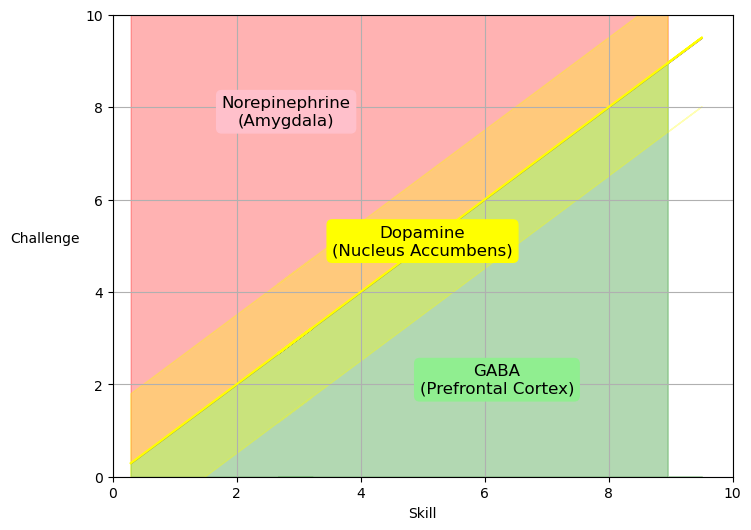

625. areas#

Show code cell source

import matplotlib.pyplot as plt

import numpy as np

# Create data for the skill and challenge levels

skill_levels = np.linspace(0, 10, 100)

challenge_levels = np.linspace(0, 10, 100)

# Define the flow channel boundaries

flow_channel = skill_levels

# Adjust the phase and amplitude of the sinusoid wave

phase = np.pi / 16 # Reducing the wavelength by a quarter

amplitude = 1.5

flow_channel += np.sin(skill_levels + phase) * amplitude

# Define the yellow zone boundaries

yellow_zone_low = flow_channel - 1.5

yellow_zone_high = flow_channel + 1.5

# Define the anxiety and relaxation areas

anxiety_area = np.where(challenge_levels > flow_channel, challenge_levels, np.nan)

relaxation_area = np.where(challenge_levels < flow_channel, challenge_levels, np.nan)

# Plotting

plt.figure(figsize=(8, 6))

# Plot the anxiety and relaxation areas

plt.fill_between(skill_levels, flow_channel, 10, color='red', alpha=0.3, label='Anxiety', interpolate=True)

plt.fill_between(skill_levels, 0, flow_channel, color='green', alpha=0.3, label='Relaxation', interpolate=True)

plt.fill_between(skill_levels, yellow_zone_low, yellow_zone_high, color='yellow', alpha=0.3, label='Flow Channel', interpolate=True)

# Plot the flow channel boundaries

plt.plot(skill_levels, flow_channel, color='yellow', linestyle='-')

# Set plot labels and title

plt.xlabel('Skill')

plt.ylabel('Challenge', rotation='horizontal', ha='right') # Rotate the label horizontally

# Set plot limits and grid

plt.xlim(0, 10)

plt.ylim(0, 10)

plt.grid(True)

# Set tick labels

tick_labels = ['0', '2', '4', '6', '8', '10']

plt.xticks(np.linspace(0, 10, 6), tick_labels)

plt.yticks(np.linspace(0, 10, 6), tick_labels)

# Add text annotations to label the areas

plt.text(2.8, 7.9, 'Norepinephrine\n(Amygdala)', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='pink', edgecolor='pink', boxstyle='round'))

plt.text(5, 5.1, 'Dopamine\n(Nucleus Accumbens)', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='yellow', edgecolor='yellow', boxstyle='round'))

plt.text(6.2, 2.1, 'GABA\n(Prefrontal Cortex)', color='black', ha='center', va='center', fontsize=12, bbox=dict(facecolor='lightgreen', edgecolor='lightgreen', boxstyle='round'))

# Display the plot

plt.show()

Does ones credo impose limits on the skill-levels they might attain? What for sure is that the setting of a commune is deliberately designed to set limits to the challenge-levels encountered by those who dwell in it. So this graph may help us link matters of religion, politik, and brain-chemistry.

We should find hippies in the green zone having arrived there by the horizontal red line represented by 9 o’clock. Catholics should mostly be in the red zone in urgent need of deliverance, which they do obtain via confession and the green line represented by 6 o’clock should get them there. But what would be truly optimal is the yellow zone represented by 2 o’clock, which represents constant struggle: incremental challenges and skill-levels that are matching these changes over time.

Such a person is a worthy adversary and worthy of the greatest honors. Athletes and sportsmen are the embodiment of this credo in modern life. They represent the healthiest, most sustainable, and dare I say, most meaningful approach to life [18].

### 626. twoway

```stata

import delimited https://raw.githubusercontent.com/jhustata/livre/main/transplants.txt, clear

sum age

g age_c = age - r(mean)

sum bmi

g bmi_c = bmi - r(mean)

regress bmi_c age_c

matrix define m = e(b)

local b1: di %3.2f m[1,1]

local b0: di %3.2f m[1,2]

gen bmi_hat = `b0' + `b1' * age_c

sum age_c, d

local q1: di %3.2f r(p5)

local q4: di %3.2f r(p75)

local rmse = e(rmse)

g ci_lower = `b0' - 1.96 * `rmse'

g ci_upper = `b0' + 1.96 * `rmse'

sum age_c

g age_int = r(mean)

twoway ///

(scatter bmi_c age_c, mcolor(lime) msymbol(oh) jitter(5)) ///

(line bmi_hat age_c, lcolor(blue) lstyle(dash)) ///

(rcap ci_lower ci_upper age_int, lcolor(red)), ///

xtitle("Age") ///

ytitle("BMI", orientation(horizontal)) ///

title(" ") ///

text(0 0 "Intercept = `b0'", size(small)) ///

text(0 -40 "Slope = `b1'", size(small)) ///

xlab(-40 "0" -20 "20" 0 "40" 20 "60" 40 "80") ///

ylab(-10 "20" -5 "25" 0 "30" 5 "35" 10 "40") ///

legend(off)

graph export twoway_overlayfit.png, replace

07/04/2023#

627. washington#

the greater Seattle area is best thought of as an elliptical patch of land with a north-south long axis. lake washington is at the center, i5 and i405 form the western and eastern boarders, with the elliot bay (continuous with vancouver & victoria) to the west of i5 and the mountain ranges to the east of i405.

everett is the northern most tip, tacoma the southern most tip, and west-east bridges across lake washington connect from south-to-noth seatac-renton, seattle-bellevue (over mercer island), and university district-redmond.

as such, a northward one-hour $150 uber ride on i405 in either a prius or telsa from from seatac via renton, bellevue, to shohomish is especially picturesque, revealing some prime real estate including bill gates’ marital home.

628. urls#

https://jhustata.github.io/book/_downloads/34a8255f06036b44354b3c36c5583d7e/transplants.dta

https://jhustata.github.io/livre/_downloads/34a8255f06036b44354b3c36c5583d7e/transplants.dta

https://raw.githubusercontent.com/jhustata/livre/main/transplants.txt

https://jhustata.github.io/book/_downloads/1dbcfe5183401a542fa4f336f624de75/donors.dta https://jhustata.github.io/livre/_downloads/1dbcfe5183401a542fa4f336f624de75/donors.dta https://raw.githubusercontent.com/jhustata/livre/main/donors.txt

https://jhustata.github.io/book/_downloads/f246ffb8c3fc8aad08fcc8b4d75aa2fb/donors_recipients.dta

https://jhustata.github.io/livre/_downloads/f246ffb8c3fc8aad08fcc8b4d75aa2fb/donors_recipients.dta

https://raw.githubusercontent.com/jhustata/livre/main/donors_recipients.txt

https://raw.githubusercontent.com/jhustata/livre/main/tx_yr.txt

https://jhustata.github.io/livre/_downloads/27a576323cb92ad0ff242e3ea525dbf8/tx_yr.dta

https://jhustata.github.io/book/_downloads/884b9e06eb29f89b1b87da4eab39775d/hw1.txt

https://raw.githubusercontent.com/jhustata/book/main/1-first gen antihisitamines.xlsx

https://jhustata.github.io/book/_downloads/f5c23ae99afdaa6ef642721ce0b9ca5f/hw2_pra_hist.dta https://jhustata.github.io/book/_downloads/5f5f5b6938a482eb2550870b996f2ea7/hw2_hosp.dta

use url.dta, clear

import delimited url.txt, clear

import excel url.xlsx, clear

629. prime#

cls

capture program drop prime

program define prime

capture syntax, n(numlist)

if _rc != 0 {

local rc1 "Let's talk about"

local rc2 "real numbers"

local rc3 "natural numbers"

di "`rc1' `rc2', `rc3', etc."

}

else if `n' <= 1 {

di "`n' is not a natural number greater than 1"

}

else if mod(`n' - 1, 2) - round(mod(`n' - 1, 2)) != 0 {

di "`n' is a fraction"

}

else if mod(`n', 2) == 0 {

di "`n' is an even number "

}

else if mod(`n' - 1, 2) == 0 {

di "`n' is a prime number "

}

else {

di in red "undocumented category"

}

end

foreach num in pwd -5 100 109 1 `c(pi)' . 999 {

prime, n(`num')

}

codify non-prime odd numbers later

630. simulate#

capture program drop _all

program define sampmean

syntax, n(numlist) mean(numlist) sd(numlist)

local id = 1

foreach num in `n' {

clear

set obs `num'

set seed 2

local mean = `mean'

gen x = rnormal(`mean',`sd')

gen id = `id'

save dat_`num', replace

local id = `id' + 1

}

clear

foreach num in 5 20 100 1000 {

append using dat_`num'

}

forvalues i = 1/4 {

sum x if id == `i'

local mean`i': di %3.2f r(mean)

}

local ytext = `mean' + 3

#delimit ;

twoway

(scatter x i,

mcolor(midblue)

jitter(5)

msize(.2)

xlab(1 "5" 2 "20" 3 "100" 4 "1000")

xscale(range(0 5))

xti("")

text(`ytext' 1 "Mean=`mean1'")

text(`ytext' 2 "Mean=`mean2'")

text(`ytext' 3 "Mean=`mean3'")

text(`ytext' 4 "Mean=`mean4'")

legend(off)

)

(lowess x i,

lwidth(1.2)

)

;

#delimit cr

end

qui sampmean, n(5 20 100 1000) mean(20) sd(1.2)

631. xlab 🚧#

qui local numlist 5 20 100 1000

local n: di wordcount("`numlist'")

forvalues i=1/`n' {

local xval: di word("`numlist'", `i')

local xlab: di `"`xlab' `i' "`xval'""'

}

di `"`xlab'"'

632. tw#

capture program drop _all

program define sampmean

syntax, n(numlist) mean(numlist) sd(numlist)

local id = 1

foreach num in `n' {

clear

set obs `num'

set seed 2

local mean = `mean'

gen x = rnormal(`mean',`sd')

gen id = `id'

save dat_`num', replace

local id = `id' + 1

}

clear

foreach num in `n' {

append using dat_`num'

}

forvalues i = 1/4 {

sum x if id == `i'

local mean`i': di %3.2f r(mean)

}

local ytext = `mean' + 3

qui local numlist `n'

local n: di wordcount("`numlist'")

forvalues i=1/`n' {

local xval: di word("`numlist'", `i')

local xlab: di `"`xlab' `i' "`xval'""'

}

di `"`xlab'"'

local tw: di `"twoway (scatter x i, mcolor(midblue) jitter(5) msize(.2) xscale(range(0 5)) xti("") text(`ytext' 1 "Mean=`mean1'") text(`ytext' 2 "Mean=`mean2'") text(`ytext' 3 "Mean=`mean3'") text(`ytext' 4 "Mean=`mean4'") legend(off)) (lowess x i,lwidth(1.2))"'

`tw'

end

qui sampmean, n(5 20 100 1000) mean(20) sd(1.2)

07/05/2023#

633. uncertainty#

\( Y_i = \beta_0 + \beta_1 X_1 + \beta_2 X_2 + \cdots + \beta_N X_N + \epsilon_{i \sim N (\mu, \sigma)} \)

sources:

\(Y_i, X_i\) ascertainment error (design)

beyond biological variation

calibration error

rounding error

duration of followup and censoring

\(\beta_i\) estimation error (analysis)

number of covariates included in models

variance inflation factors

missingness of included covariates

handling of missingness of covariates

sample size of complete dataset

\(\epsilon_i\) model error (science)

outcome distribution

model specification

linear regression for continuous

logistic for binary

mlogit for categorical with no specific relationship among outcomes

ologit for categorical with ordinal relationship among outcomes

exponential for time-to-event in absence of proportional hazards

cox for time-to-event with proportional hazards

poisson for time-to-event with constant hazards

covariate functional form

continuous

mathematical function

splines

transformation (e.g. log)

binary

categorical

interaction insufficiently modelled

human limits (i.e., unsupervised machine learning not utilized)

principal component analysis when c(k) > c(N)

autoencoders

634. j#

1824 23rd st, snohomish, wa 98290

635. phew!#

finally we can hire!

RDA 109905

afecdvi

636. bach#

Why Bach Moves Us | The New York Review

George B. Stauffer

John Eliot Gardiner’s ‘Bach: Music in the Castle of Heaven’

February 20, 2014 issue

I could not help but marvel at the miracle of hearing this music from the Roman Catholic Latin Mass Ordinary, written by a Lutheran composer in Leipzig, in a Reform Jewish temple in New York City

One of my most moving encounters with the music of Johann Sebastian Bach took place in the spring of 1997 in New York City’s Central Synagogue. I was there to pay last respects to Gabe Wiener, a talented young recording engineer who died of a brain aneurysm at age twenty-six. I had approached Gabe earlier in the year to see if his recording company, PGM Classics, would consider collaborating with the American Bach Society, which I led at the time, to produce a compact disc of previously unrecorded organ music from Bach’s circle. Gabe enthusiastically agreed to the proposal, and together we embarked on a project we called “The Uncommon Bach.” We had just settled on the repertory and the organ when I received word of his death.

There was great lamenting at the memorial service that this talented young man had been snatched away in the midst of important work, with so much promise unfulfilled. The service began with Gabe’s recording of Salamone Rossi’s Hebrew setting of the Songs of Solomon, a gorgeous yet relatively unknown Venetian masterpiece \(\cdots\)

637. wayne#

alaska airlines

1184expedia itinerary: 72599861510824

ticket number: 0278008590834

seattle (gate n4) - orange country (terminal b, gate 12)

fri, july 7

6:40pm - 9:28pm (2h 48m duration)

tsapre TT129232B (dec 04, 2025)

07/06/2023#

638. pretty-please#

Show code cell source

from tabulate import tabulate

# Define the table data as a list of lists

data = [

["studentuniverse", "", "mozart", "shaKespeare", "2023-06-15"],

["alaskan", "340733890", "mozart", "shaKespeare", "2023-02-05"],

["frontier", "90102556651", "mozart", "shaKespeare", "2023-07-06"]

]

# Define the table headers

headers = ["account", "number", "username", "password", "date"]

# Generate the table

table = tabulate(data, headers, tablefmt="")

# Print the table

print(table)

account number username password date

--------------- ----------- ---------- ----------- ----------

studentuniverse mozart shaKespeare 2023-06-15

alaskan 340733890 mozart shaKespeare 2023-02-05

frontier 90102556651 mozart shaKespeare 2023-07-06

data[0][4]

'2023-06-15'

639. seattle#

paramount

911 pine st, seattle, wa 98101

confirmation 133704514

expedia itinerary 72600817540441

thu, jul 6 4pm - fri, jul 7 noon

640. homecoming#

mon, jul 10 at 7:56pm

orange county -> baltimore

trip confirmation HFLJJA

641. tas#

spring term 2024

xujun gu

ning meng

mgb

07/07/2023#

642. apple#

alex half-life

game for apple glasses

recommended by ángel

643. fawaz#

got qualified analyst to apply for job posting

schedule an interview as soon as application received

double-check by 07/12/2023 to make sure we’re not lagging behind

644. seattle#

i5 & Boren, Convention center & sparghetti

8th & Pine, Paramount Hotel

8th & Blanchard, Amazon

1st/2nd & Blanchard (ie 115 Blanchard), Have a heart

Boca

Provoleta

Empanadas x2

Ceviche

Bodhizafa

Westcoast IPA

Georgetown Brewing

645. sound#

Two Brazilians

Look Italian

And sound too

But it’s Portuguese

646. thc#

Day after 400mg THC

Should never exceed 20mg

Perhaps always limit to 10mg

Would give you 10 days

And that is reasonable

\( Y = \beta_0 + \beta_1 X_1 + \cdots + \beta_N X_N + \epsilon_{i \sim N(\mu, \sigma)} \)

\(Y, X\) - sensory

\(\beta_i\)- processor

\(\epsilon_i\)- muscle/bone/agency

\(i\) - sampling, power, big-legs, limits

Auto-encoder

Predictions

Beta-coefficients \(b[k,1]\)

Variance-covariants \(V[k+df,b)\)

Regression (phenotype, dynamic), Survey (genotype, fixed)

647. catalog#

Python script to compile all ergot papers and create links with title & author

Later create page with hyperlinks for all the SRTR code-snippets

There’ll be a legacy repo but use that to launch a repo with built-in:

version control

collaboration in-build

gihub co-pilot

648. philosophy#

and education. with regard to regression analysis:

Why are most didactic experiences dedicated to interpreting \(\beta_i\)?

Should there be a threshold of \(\epsilon_i\) below which there’s no point interpreting \(\beta_i\)?

Virtually all clinical trials are based on \(\beta_i\).

The very selective sampling makes \(\epsilon_i\) and prediction of \(\hat Y\) quite reasonable

But the real-world application will suck: \(cf\) 95% efficacy of COVID mRNA vaccines

Let \(\epsilon_i \propto \frac {1}{1 - \beta} \)

649. poland#

stefan (Uber driver)

$100 for a night in 5-star hotel

A dollar goes a long way

So be sure to go on vacation

was imprisoned and expelled from his home-country in the 70s

present regime has compensated him for this transgression

meanwhile he has established a life for his family in the us

650. seatac#

train to downtown $\(5\)

uber is \(75\)

seatoc halfway between seattle & tacoma, near tenton

if in no mood of spending, use the light rail

651. wayne#

most g5’s ever seen in one place

also a pretty large commercial airport

santa ana, orange country, ca

07/08/2023#

652. five-o’clock#

woken me up

call to prayer at 4:20

it’s a song

653. tameth#

\(Y_i = \hat Y_i + \epsilon_i\)

07/10/2023#

654. verbs#

tameth\(1 - \frac{SSR}{SST} \sim 1 - \frac{var(\epsilon_i)}{var(Y_i)}\)whineth\(\frac{SSR}{SST} \sim \frac{var(\epsilon_i)}{var(Y_i)}\)hideth\(\hat Y_i \sim Y_i - \epsilon_i\)

655. amy#

she’s a text away from fawaz!

didn’t even so much as say bye

the deterioration is attributable to mgb

656. hangar24#

its 1 mile from john wayne aiport

has over 15 local craft beers on tap

embedded within a coorporate area

ambience, comfort, menu items, etc

my rating is 4.5/5; not a wide-range of clientelle

657. soca#

a sort of paradise: the weather, beaches, lifestyle

how may one live in this environment and achieve flow?

that is a question to be gently approached in the coming years?

07/11/2023#

658. b’more#

ewns, home is best!

659. auteurs#

chaplin

woody

perry

actors, screenwriters, and directors that demanded and attained control when they became producers

660. contest#

while The Economist was first published in September 1843 to take part in a severe contest between intelligence, which presses forward, and an unworthy, timid ignorance obstructing our progress, the more enduring contest is really between beauty, which needs no explanation, and truth, which has believers and doubters.

aesthetics is the subject focusing on beauty and ethics is the one about truth. it should be noted that while beauty is self-evident and compelling truth and ethics start off with declarations about their self-evident essence, which is outrightly rejected by many.

to speak more specifically, ethics and truth represent conscious or unconscious wishes by the more caring kinds of human beings, who wish to protect vulnerable lambs from wolves. aesthetics and beauty by contrast simply represent effects that inspire awe, wonder, and life.

so why are these two at war? can’t honest neighbors be friends? unfortunately, truth and beauty are mutually exclusive. a beautiful face might have launched a thousand war ships, but in general beauty doesn’t inspire war. it is arguments and disagreements about the truth that account for virtually all wars and conflicts in history.

beauty is never contested. even the trojan war wasn’t a contest about beauty, it was a contest for an acclaimed beauty. and often beauty is portayed as lacking in agency, vulnerable, in need of rescue. and here is precisely where truth and war creep in.

for who is the truest and worthiest knight in shinning armour? beauty always goes with the winner of the duel, battle, or war. dead shepherd, now i see thy saw of might: carefree, mocking, unconcerned, scornful, coercive, violent —so wisdom wisheth us; she is a woman, and ever loveth only a warrior.

661. understood#

why don’t i have the urge to correct a wrong impression one may have of me?

more generally, why are folks so afraid of being misunderstood?

is there really anything at stake or is it just ego?

662. wilde#

Beauty is a form of Genius–is higher, indeed, than Genius, as it needs no explanation. It is one of the great facts of the world, like sunlight, or springtime, or the reflection in the dark waters of that silver shell we call the moon. It cannot be questioned

663. actions#

a solder or warrior is never afraid of being misunderstood!

664. verbs#

07/12/2023#

665. coping#

illusions. the ideas that inspite of, and perhaps because of, their inaccuracy enable healthy coping with the human condition

delusions. ideas that interfere with our ability to relate with fellow man and to function socially

artists. creators of the most compelling illusions that have sustained motivation and the will-to-life in the delicate creature, man

scientists. that subgroup of mankind that didn’t get the memo on the absolute necessity of illusions for the general and specific wellness of man

philosophers. virtually every man and woman; more’s the pity that most haven’t bothered to study their forebears!

666. acting#

isn’t it upsetting when your hero, who always seemed to be in control of things, turns out to be merely an oustanding actor? beware of meeting your hero in person: comes with a huge risk and might precipitate cognitive dissonance at best and an existential crisis at worst.

for some their hero is the scientific enterprise. and so they dedicate a lifetime as participants, gladiators if you will, in the arena. it is here that they become acquainted with their hero and, alas, the veil of illusion is lifted.

art should celebrate life in the midst of mystery, without any inkling of despair or the illusions sought by science.

667. jhu#

president ron daniels

his vision for jhu by 2020

Johns Hopkins is a resilient, mission-driven place that refuses to wait for the ideal conditions, but instead seizes upon all opportunity for brave innovation. This is a core feature of our institutional DNA.

668. catchphrase#

my cup runneth over

ekikompe kyange kiyiika

la mia tazza straripa

669. orangecounty#

the clear quran

a thematic translation

dr. mustafa khattab

My dear brother Abi,

I wish you the best in this life and the hereafter

Fawaz

Please Note: The Quran, sometimes written as “Koran,” is a sacred book of Mulsims, who believe that God has revealed His word and message to humanity throughout the ages. For example, we believe that Moses was given the Torah, David was given the Psalms, and Jesus was given the Gospel. In the same way, we believe that Muhammad was given the Quran. Muslims therefore treat the holy books of other faiths with greate care and respect. For instance, we do not read these books in the bathroom or place them on the floor. It is our request that you treat this book with the same respect and handle it as you would your own scripture. If you do not want this copy, please do not throw it away. You may give it to any Muslim you know or your nearest Islamic center. You may also call us at 1-888-273-2754, and we will arrange to pick it up.

670. enfrançais#

Yes, there is a link between the French words “voir,” “savoir,” and “pouvoir.” These three words are part of a group of verbs known as the “irregular -oir verbs.” While they are not conjugated in the same way as regular -ir verbs, they do share some similarities.

The common link between these three verbs is their infinitive endings: -oir. This ending is characteristic of a group of verbs in French, and it often indicates the verb’s meaning or function.

Here’s a breakdown of each verb and its meaning:

“Voir” - This verb means “to see.” It is used to express the act of perceiving something visually. For example:

Je vois un oiseau. (I see a bird.)

Est-ce que tu vois la mer? (Do you see the sea?)

“Savoir” - This verb means “to know.” It is used to express knowledge or awareness of information or facts. For example:

Je sais parler français. (I know how to speak French.)

Savez-vous où se trouve la bibliothèque? (Do you know where the library is?)

“Pouvoir” - This verb means “to be able to” or “can.” It is used to express capability or possibility. For example:

Je peux te aider. (I can help you.)

Peux-tu venir demain soir? (Can you come tomorrow evening?)

While these verbs share the -oir ending and are irregular in their conjugation, they do not have any inherent etymological or semantic connection beyond being part of the same verb group.

671. verbs#

pouvoir

tamethto dosavoir

whinethto bevoir

hidethto no

The phrase commonly used to refer to “see no evil, hear no evil, speak no evil” is “les trois singes de la sagesse” in French. Literally translated, it means “the three monkeys of wisdom.” Each monkey represents a different principle: “ne rien voir de mal” (see no evil), “ne rien entendre de mal” (hear no evil), and “ne rien dire de mal” (speak no evil).

Certainly! The variant “do no evil” can be translated as “ne rien faire de mal” in French. It complements the traditional phrase “see no evil, hear no evil, speak no evil” with the additional principle of not doing any evil actions.

672. belgians#

orval

6.2%3:45PM - 5:20PMwestmalle dubbel

7.0%5:20PM - 8:37PMduvel

8.5%8:37PM - 11:04PMwestmalle tripel

9.5%00:24PM - 02:01AMst. bernardus abt 12

10.0%04:18AM - 06:02AM

673. clever#

nutrition

environment

hygiene

There is a midway condition which a man of such a destiny will not be able to recall without emotion: it is characterized by a pale, subtle happiness of light and sunshine, a feeling of bird-like freedom, bird-like altitude, bird-like exuberance, and a third thing in which curiosity is united with a tender contempt. A free-spirit, this cool expression, does one good in every condition, it’s almost warming. One lives no longer in the fetters of love and hatred, without yes, without no, near or far as one wishes, preferably slipping away, evading, flattering off, gone again, again flying aloft; one is spoilt, as everyone is who has at sometime seen a tremendous number of things beneath him — and one becomes the opposite of those who concern themselves with things that have nothing to do with them. Indeed, the free-spirit hence forth has only to do with things — and how many things! — with which he is no longer concerned...

from January 14, 2020 at 3:15AM + 8hrs

Is there any culture in human history that treats each cohort of newborns and over a lifetime as sui generis? Of course that would be impracticle; however, at a bare minimum a great culture should at every year from cradle-to-grave do the following:

present a hierachy of challenges for each year

train the cohort so that they might develop skills to match the challenge

weekly peer-driven feedback system to align appropriate challenge-levels with skill-levels for all in the cohort

07/13/2023#

674. today#

simeon

homerun

journaling

675. friends#

develop a system of staying in touch with the wider network of friends

with our aging parents there is just a lot going on for everyone

at times just the humble reassurance about diagnosis, prognosis, and expectations is helpful

some unsolicited advice about avoiding hospitalization at all costs will be offered

but just simply play role of care coordinator, a neglected role across the globe

676. three-peat#

soul, t

\

mind, x --> verb, n --> change, o

/

body, s

677. cluster#

definitive guide to accessing the cluster

07/14/2023#

678. learning#

3. parametric

\

—> 0. humans —> 1. twoway 4. model —> 5. data -> 6. likelihood -> 7. inference

/

2. nonparametric

3e.ἄσκησις,μ

\

—> 0g.κοσμογονία,γ —> 1f.ἡἔρις,κ 4d.∫δυσφορία.dt,ψ —> 5c.φάρμακον,δ -> 6b.ομορφιά,β -> 7a.τάξη,α

/

2e.πρᾶξις,σ

3. supervised

\

—> 0. machines —> 1. networks: adversarial 4. pca —> 5. autoencorder -> 6. deep -> 7. adaptive

/

2. unsupervised

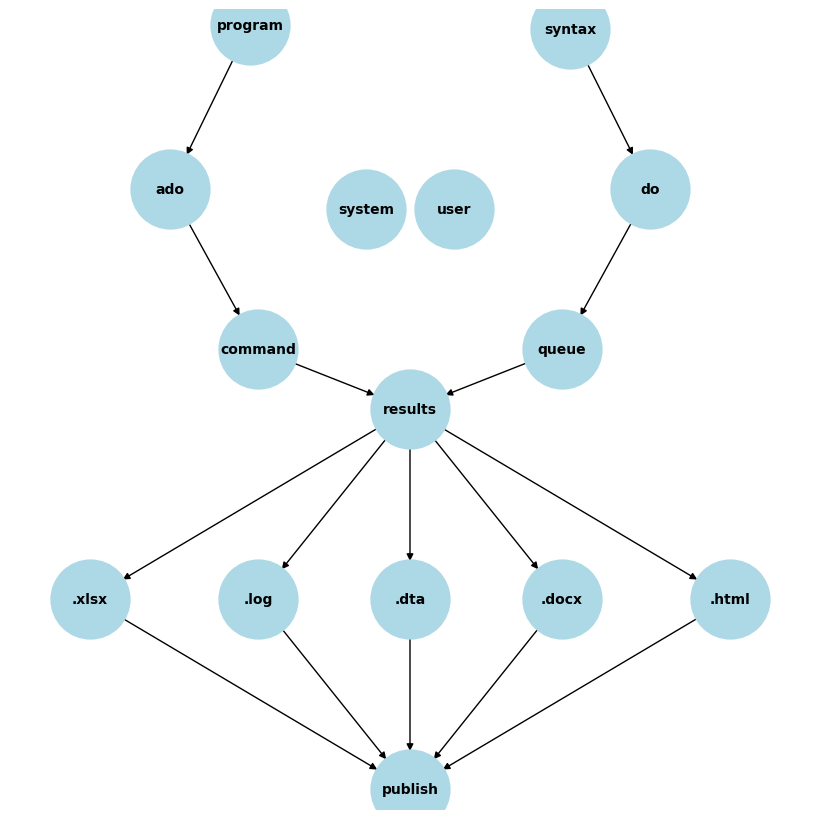

679. publishing#

markup your document and save it as .txt, .do, .md, or .do

<<dd_version: 2>>

'''

<<dd_do>>

webuse lifeexp, clear

encode country, gen(Country)

twoway scatter lexp Country, xscale(off)

graph export lexp_bycountry.png, replace

qui sum lexp

<</dd_do>>

'''

<<dd_graph>>

Here's a summary of the data: The total number of countries is

<<dd_display: r(N)>>, mean life expectancy is <<dd_display: r(mean)>>, and the

standard deviation is <<dd_display: r(sd)>>

type the following command into the stata command window:

dyndoc publish.do, saving(publish.html)

inspect your output in the stata monitor

. dyndoc publish.do, saving(publish.html)

. webuse lifeexp, clear

(Life expectancy, 1998)

. encode country, gen(Country)

. twoway scatter lexp Country, xscale(off)

. graph export lexp_bycountry.png, replace

file /Users/d/Desktop/lexp_bycountry.png saved as PNG format

. qui sum lexp

.

successfully converted "/Users/d/Desktop/publish.html"

.

now inspect what is outputed to the workfolder

ls -l

. ls -l

total 456

-rw-r--r- 1 d staff 59500 Jul 14 03:37 Graph.svg

-rw-r--r- 1 d staff 59500 Jul 14 03:38 Graph1.svg

-rw-r--r- 1 d staff 96154 Jul 14 03:38 lexp_bycountry.png

-rw-r--r- @ 1 d staff 401 Jul 14 03:37 publish.do

-rw-r--r- @ 1 d staff 461 Jul 14 03:38 publish.html

-rw------ 1 d staff 402 Jul 14 03:37 ~publish.do.stswp

.

what is publishing?

handing in homework as .pdf or .html which unlike a .log file can have text and output intermingled and with code and output as options

sharing in-house exploratory data analyses with collaborators

conference abstract

original research submitted to a peer-review journal

brochures by a pharmaceutical company describing results from a clinical trial of their latest drug

etc.

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

G = nx.DiGraph()

G.add_node("user", pos=(550, 500))

G.add_node("system", pos=(-550, 500))

G.add_node("program", pos=(-2000, 960))

G.add_node("syntax", pos=(2000, 950))

G.add_node("ado", pos=(-3000, 550))

G.add_node("do", pos=(3000, 550))

G.add_node("command", pos=(-1900, 150))

G.add_node("queue", pos=(1900, 150))

G.add_node("results", pos=(0, 0))

G.add_node("dta", pos=(0, -475))

G.add_node("log", pos=(-1900, -475))

G.add_node("excel", pos=(-4000, -475))

G.add_node("word", pos=(1900, -475))

G.add_node("html", pos=(4000, -475))

G.add_node("publish", pos=(0, -950))

G.add_edges_from([("program", "ado"), ("syntax", "do")])

G.add_edges_from([("ado", "command"), ("do", "queue")])

G.add_edges_from([("command", "results"), ("queue", "results"), ("results", "excel"), ("results", "word"), ("results", "html")])

G.add_edges_from([("results", "dta"), ("results", "log")])

G.add_edges_from([("dta", "publish"), ("log", "publish"), ("excel", "publish"), ("word", "publish"), ("html", "publish")])

pos = nx.get_node_attributes(G, 'pos')

labels = {"user": "user",

"system": "system",

"program": "program",

"syntax": "syntax",

"ado": "ado",

"do": "do",

"command": "command",

"queue": "queue",

"results": "results",

"dta": ".dta",

"log": ".log",

"excel": ".xlsx",

"word": ".docx",

"html": ".html",

"publish": "publish"}

plt.figure(figsize=(8, 8))

nx.draw(G, pos, with_labels=False, node_size=3000, node_color="lightblue", linewidths=3)

nx.draw_networkx_labels(G, pos, labels, font_size=10, font_weight='bold')

plt.xlim(-5000, 5000)

plt.ylim(-1000, 1000)

plt.axis("off")

plt.show()

do the pat hack

cp Graph.svg _build/html/Graph1.svg

cp publish.html _build/html/publish.html

680. time#

active

magic

passive

Let’s explore the contrast between active and passive processes, which occur over time, and miracles, which are not bound by cause-effect relationships or the dimension of time.

680.1 active#

Active processes involve a series of intentional and directed actions that take place over a period of time. They are characterized by cause-and-effect relationships and a sense of agency or deliberate effort. Here are some key attributes of active processes:

Intentionality: Active processes are initiated and driven by conscious decisions or actions.

Cause and Effect: They follow a logical sequence of steps, where each action has a direct impact on the subsequent outcome.

Time-bound: Active processes unfold over a specific duration, with a beginning, middle, and end.

Human Agency: They involve human intervention, decision-making, and effort to achieve a desired outcome.

Measurability: Active processes can often be measured or assessed based on predefined metrics or milestones.

Examples of active processes include project management, problem-solving methodologies, skill development through practice and training, or any task that requires deliberate action and progresses over time. [18]

680.2 passive#

Passive processes, also known as natural or inherent processes, occur over time without conscious or deliberate human intervention. They are driven by natural laws, environmental factors, or inherent properties of a system. Here are some key attributes of passive processes:

Natural Laws: Passive processes operate according to predetermined rules or laws of nature.

Cause and Effect (Indirect): While passive processes still exhibit cause-and-effect relationships, the cause is often indirect and less influenced by human agency.

Time-bound: Passive processes also unfold over time, but their progression may be slower or less perceivable.

Environmental Factors: They are influenced by external conditions, such as climate, geology, or biological systems.

Self-Organization: Passive processes often involve self-regulating or self-organizing systems that maintain stability or equilibrium.

Examples of passive processes include erosion, weathering, ecological succession, natural healing or recovery processes, or the growth of plants in response to environmental factors. While these processes can be influenced by external factors, they primarily occur without conscious human intervention.

680.3 miracle#

Miracles, as mentioned earlier, are extraordinary events or phenomena that defy the laws of nature and cause-effect relationships. Here are the contrasting aspects between active/passive processes and miracles:

Cause and Effect: Active and passive processes are governed by cause and effect, where specific actions or natural laws drive the progression of events over time. In contrast, miracles are seen as exceptions to cause-effect relationships, often defying rational explanation.

Time Dimension: Active and passive processes occur over time, with a sense of progression, while miracles are not bound by the dimension of time. Miracles are considered spontaneous, exceptional events that transcend the normal flow of cause-effect relationships.

Human Agency: Active processes involve deliberate human intervention and effort, whereas passive processes occur naturally without direct human influence. In contrast, miracles are often attributed to supernatural or divine forces beyond human control.

Predictability: Active and passive processes are generally predictable to some extent, with expected outcomes based on known factors or laws. Miracles, however, are viewed as unpredictable and extraordinary, defying expectations and logical explanations.

In summary, active and passive processes involve cause-effect relationships and occur over time, with active processes driven by deliberate human action and passive processes occurring naturally. Miracles, on the other hand, transcend cause-effect relationships, defy rational explanation, and are not bound by the dimension of time.

681. joshua#

new resident portal as of 07/01

password: shaKespeare

but use app

682. yay!#

jhu offer of employment

recruitor system@successfactors.com

View/Accept Offer ...

683. chordstack#

Professor Vaughn Brathwaite - The Gospel University

His Piano system will take you to another level

Berklee College of Music

I’ve signed up & should remain committed for 10y

684. gratitude 🙏#

andrew

vincent

fawaz

07/15/2023#

685. flow#

i guess i’ve been in flow this past week, since returning from the west coast

686. newlove#

victoria monét is a dionysian girl per excellence

her art form is beyond good & evil: even beyoncé can’t say that

but its really her reverence for tradition that wins me

and yet its not mere ancestral worship: she draws blood at other times

smoke is probably the best proof of this assertion

687. r&b#

her - gotta move on

sir - nothing even matters

689. tonibraxton#

sui generis

690. ye#

father stretch my hands, pt. 1

691. stata#

grading heuristic

c - visualize observed data (enhance basic stuff from epi)

b - compare observed to expected (r-class: o/e test-statistics, e(N)/r(N))

a - decision about quality or meaning of data (p-values, credibility intervals)

timeline

oneway

twoway

multivariable

hierarchical

r, python, & ai

692. tutor#

Hey Abimereki, it's Vaughn J Brathwaite from Gospel University. Our ZOOM call is on Jul 15 @ 04:00 PM . Here's the ZOOM link: https://us06web.zoom.us/j/88924354455?pwd=VDhvd21LTDlwTUF2WHB4NGpUVGg2dz09 . Also, Vaughn wants you to watch this video before our Zoom call - join.gospelu.com/pre-call-hw

Sent by OnceHub.

693. veranolibro#

start experimenting

for the 340.600

have #691 above in mind

694. annuŋŋamya#

continue building variations on the original theme

recruit the chord-stack system

and lets see how that workrs

695. organ#

gospel university

online gospel music academy

vaughn (pronounced von) brathwaite

berklee college of music

chord stack system

Your upcoming appointment is starting in 1 hour

Dear Abimereki,

Your appointment is starting in approximately 1 hour. Please see below for more information.

Booking details

Subject

Chord Stack System - Consultation

Booking page

Vaughn Brathwaite

Time

Sat, Jul 15, 2023, 04:00 PM - 05:00 PM

United States; Eastern time (GMT-4:00) [DST]

Cancel/Reschedule

Additional guests

Conferencing information

When it's time, join the meeting from PC, Mac, Linux, iOS or Android:

https://us06web.zoom.us/j/88924354455?pwd=VDhvd21LTDlwTUF2WHB4NGpUVGg2dz09

Passcode: piano

Access Information:

Where: Zoom

Meeting ID: 88924354455

Meeting passcode: piano

Booking ID

BKNG-AFCY906BGZHP

consulation session

united credit (855) 503 1800 of irvine, california

please upload photo id (did passport)

lending point aggreement signed

Hi Abimereki,

Please sign this Non-Disclosure agreement (which confirms that you will NOT share any information from the GMC program with the public) to continue your enrollment process for the Gospel Musician Coaching program.

After this document has been completed, you will receive instructions on how to setup and access ALL the materials within the Gospel Musician Coaching program.

Congratulations on joining the program!

Cheers,

Customer Success Team - Gospel University

Hi Abimereki,

I'm Lorenzo and I'll be your piano coach and mentor over the next 6 months!

I'm excited to meet you and get started.

Here is the link where you can book your onboarding call with me - CLICK HERE

On our first meeting we'll go through your playing video that you submitted and I'll give you some feedback and guidance on where to go from there.

Looking forward to meeting you!

- Lorenzo

The Gospel University, 1545 VFW PKWY, West Roxbury, MA 02132, United States, 8888743930

Unsubscribe Manage preferences

signed gospel music coaching agreement

gospel music coaching program

moZart1234

Hey Abimereki,

Welcome to Gospel Musician Coaching!

I’m looking forward to our journey together.

Follow these IMPORTANT Steps:

1. Find your Login Link Instructions (this was sent to you in a separate email), then....

2. Login here - https://academy.thegospeluniversity.com/users/sign_in

3. Complete your ONBOARDING process here - CLICK HERE

It'll walk you through the entire Gospel University learning platform and show you where everything is.

4. Make sure you introduce yourself in the GMC Student Community - CLICK HERE

OTHER IMPORTANT STUFF:

REMEMBER! Do NOT share any links or any information provided within the GMC program with anyone OUTSIDE the program. Doing so VOIDS your signed agreement with us.

Welcome again, and if you have any questions feel free to email us at: support@thegospeluniversity.com

Welcome to the GMC family.

- Vaughn

Your upcoming appointment is starting in 10 minutes

Dear Abimereki,

Your appointment is starting in approximately 10 minutes. Please see below for more information.

Booking details

Subject

Chord Stack System - Consultation

Booking page

Vaughn Brathwaite

Time

Sat, Jul 15, 2023, 02:30 PM - 03:30 PM

United States; Eastern time (GMT-4:00) [DST]

Cancel/Reschedule

Additional guests

Conferencing information

When it's time, join the meeting from PC, Mac, Linux, iOS or Android:

https://us06web.zoom.us/j/88924354455?pwd=VDhvd21LTDlwTUF2WHB4NGpUVGg2dz09

Passcode: piano

Access Information:

Where: Zoom

Meeting ID: 88924354455

Meeting passcode: piano

Booking ID

BKNG-AFCY906BGZHP

696. stata#

i unconsciously seperated the conceptual (sessions 1-5) from the technical (hub notes 1-27)

but lets formalize this and capitalize on it to advance our cause even more efficiently

should help develop the grading rubric so that weights are meaningfully assigned

697. recap#

fingers = voices

so.. voicing

confused me for a min

of course musics origins

are entirely vocal

a capella sistina

for worship & praise

well my voicing is

going to advance

from octave to more

nuanced in left hand

698. zone#

been in it lately!

699. migration#

~/dropbox/1f.ἡἔρις,κ/1.ontology

ai, python, r, and stata workflows

careful deployment of naming conventions

jupyter-book folders will be seasons

github-repo clones will be books

lets compile the language variants