06/01/2023#

356. jhnexus#

k08 accounts

reconciliation

approval

357. bwv232#

kyrie eleison lord, have mercy

christe eleison christ, have mercy

kyrie eleison christ, have mercy

gloria in excelsis deo glory to god in the highest

et in terra pax and on earth, peace

laudamus te we praise you

gratias agimus tibi we give thanks to you

domine deus, rex coelestis lord god, heavenly king

qui tollis peccata mundi you who take away the sins of the world

qui sedes ad dexteram patrix who sits at the right hand of the father

quoniam tu solus sanctus for you alone are the holy one

cum sancto spiritu with the holy spirit

credo in unum deum i believe in one god

patrem omnipotentum the father almight

et in unum dominum and in one lord

et incarnatus est and was incarnate

crucifixus crucified

et resurrexit and rose again

et in spiritum sanctum and in the holy spirit

confiteor in unum baptisma i confess one baptism

et expecto and i await

sanctus dominus deus sabaoth holy lord god of hosts

osanna in excelsis hosanna in the highest

benedictus qui venit blessed is he who comes

osanna in excelsis hosanna in the highest

agnus dei, qui tollis peccata mundi lamb of god, who takes away the sins of the world

dona nobis pacem grant us peace

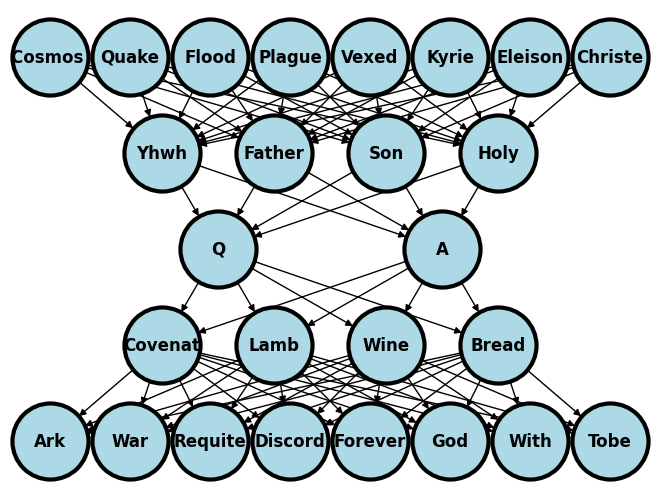

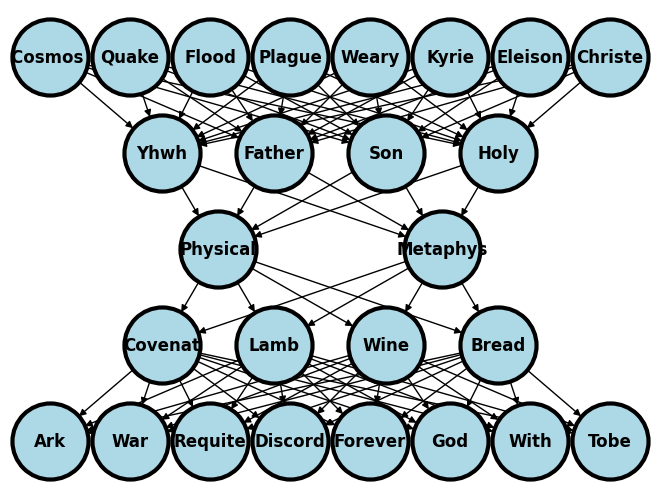

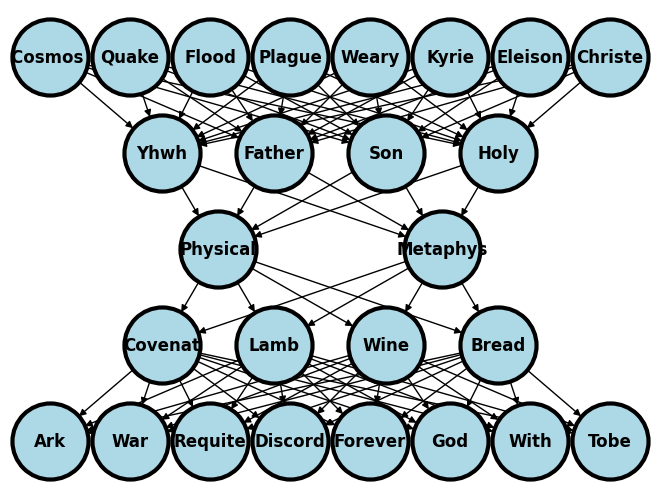

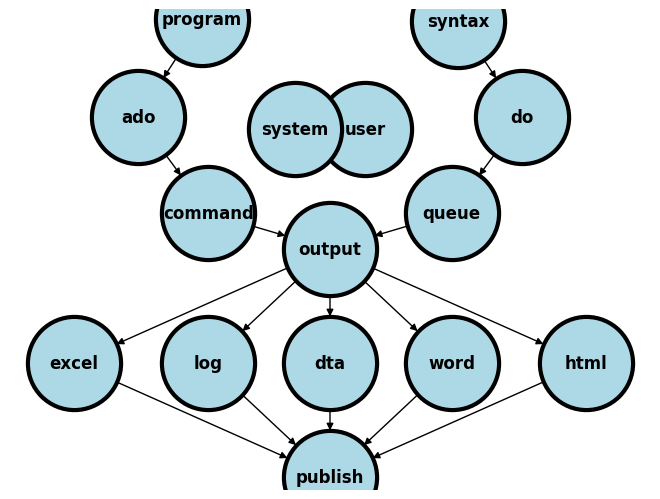

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Vexed", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Literal", pos = (2.1, 3) )

G.add_node("Methaph", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Vexed", "Yhwh"), ("Vexed", "Father"), ("Vexed", "Son"), ("Vexed", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Literal"), ("Yhwh", "Methaph")])

G.add_edges_from([ ("Father", "Literal"), ("Father", "Methaph")])

G.add_edges_from([ ("Son", "Literal"), ("Son", "Methaph")])

G.add_edges_from([ ("Holy", "Literal"), ("Holy", "Methaph")])

G.add_edges_from([ ("Literal", "Covenat"), ("Literal", "Lamb"), ("Literal", "Wine"), ("Literal", "Bread")])

G.add_edges_from([ ("Methaph", "Covenat"), ("Methaph", "Lamb"), ("Methaph", "Wine"), ("Methaph", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 1

----> 1 import networkx as nx

2 import matplotlib.pyplot as plt

3 import numpy as np

ModuleNotFoundError: No module named 'networkx'

359. mozart#

domino deus is my fave from mass in c

but the kyrie-christe-kyrie might be a close second

or perhaps they tie? granted, the credo brings some memories!!

were i more firm in my credo those painful memories might have been of victory!!!

just realized that i’ve to distinguish

credo: credo in unum deumfromcredo: et incarnatus estoh boy. mozart b winning. bach & ludwig stand no chance if we are to go by

stealing the heart, zero competitionanyways:

otherwise; i wish to discuss with you — much later — the possibility of having a listening session for musical interpretations of Latin mass by Bach, Mozart & Beethoven. I’ve been doing this by myself (pretty lonely) for the last 17 years. But if there are enthusiasts for Latin mass like you, it would open up the chance to share this very rich pedigree from the 17th & 18th century Prussia

360. !chronological#

senses: mozart

mind: bach

flex: beethoven

361. vanguard#

its taken them this long to go live?

find time to investigate why

anyways, they’re here…

362. große messe#

372. unfinished#

mozarts mass

schuberts symphony in b minor

i don’t care what the composers themselves say

these are complete works

absolutely nothing is unfinished

only the view of

genreimpossed this title

große messe is the only mass that draws blood

bachs and ludwigs are masterpieces but

only from a cerebral or flex stance

373. gloria#

mozarts gloria: qui tollis

obviously written while he studied handel

recalls handel’s messiah: overture & more

374. autoencoding#

works of art

representation

same idea but for algorithms

to imitate is to interpret, world meaningfully

375. autoencode#

encode

encyclopedia

western

styles, forms, procedures

-

musical offering

the art of fugue

b minor mass

decode

we know of no occasion for which bach could have written the b-minor mass, nor any patron who might have commissioned it, nor any performance of the complete work before 1750

376. schubert#

how stand i then?

06/02/2023#

377. esot#

plan

go

378. service#

full of vexation come i, with complaint

kyrielauded service provider

gloriaessence of offering

credothe quality of service

sanctusour goal is to have a happy customer!

agnus dei

379. vivaldi#

what does it say of him that his best liturgical work is

gloria in d?do any of his descendants surpass the spirit of praise in

domine fili unigenite?his

domine deus, rex coelestismight as well be by bach, his descendant!can’t fault him for range: the two gloria pieces above straddle a wide range of emotions

i think vivaldi & handel were the last bastilon of light, tight instrumentation

those were the days when instumentation was nothing more than support for voice

380. laudate#

mozart’s vesperae solennes de confessore in c, k.339

psalm 116/117

o praise the lord, all ye nations

praise him, all ye people

from time to time god must feel mercy on humanity

and so he sent an angel: wolfgang ama-

deusmozart. and …now another heavenly voice: barbara hendricks

there is no other explanation for such wonder of sound, the direct key to our soul! – youtuber comment

381. automation#

how autoencoding is basis for all of mankinds progress

encode essence of human services (e.g., bank teller)

code to replicate process (e.g., SAS, etc)

decode service delivery (e.g. atm)

witnessed similar process at ups store today:

place order online

showup to print label

bypass those in line

just dropoff

voila!

382. workflow#

autoencode with vincent

extract very essence

then iterate!!!

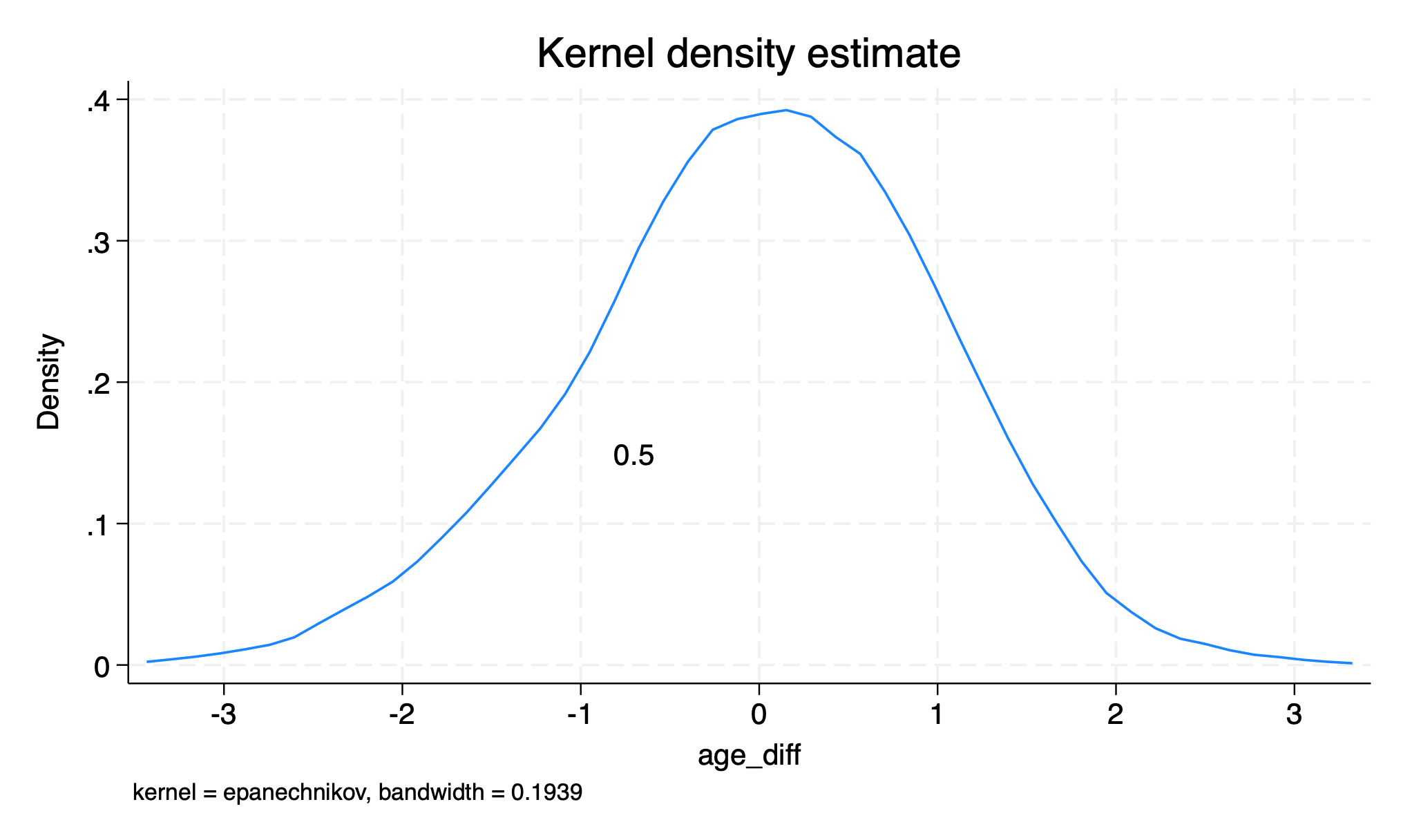

383. drugs&aging#

384. atc#

june 5

arrive

june 6

amy talk

june 7

depart

385. feedback#

most detailed feedback

from department of ih

its very rich & a+

Hi Xxx,

This is very detailed and extremely helpful feedback, thanks!

Will also be confirming on Monday whether I’ll be able to have you as TA this summer.

But for next spring, you are already booked if you’re still interested!

Yo

From: Xxx Xxx xxx123@jhmi.edu

Date: Friday, June 2, 2023 at 7:20 AM

To: Yours Truly truly@jhmi.edu

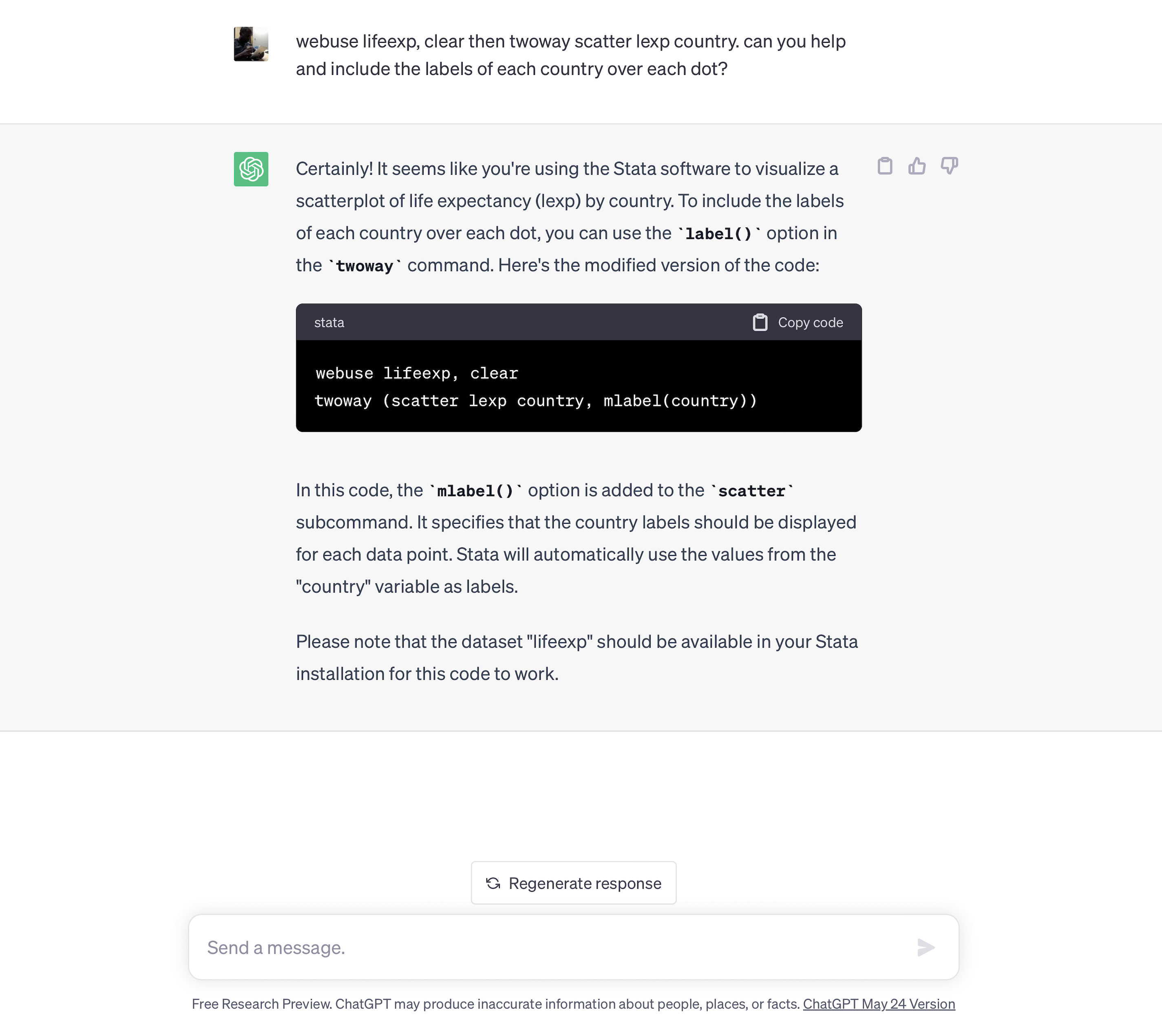

Subject: Feedback on STATA programming_xxxxx

Hi Yo,

I hope this message finds you well. As a student in your recent STATA programming course, I am taking this opportunity to share my feedback. Please understand this is offered constructively, and I truly appreciate your dedication to teaching this course. It has been a valuable learning experience for me.

Also if you still have time, you can send me specific questions and I can answer them again.

Course Introduction for Novices:

The first lecture appeared to be quite advanced, and this proved to be a challenging starting point for those new to STATA. I still remember on the first day of the class, a bunch of “horrible” coding has been shown, and one of my classmates told me that she would quit the course as she cannot understand most of the knowledge of the first lecture. I believe the course could benefit from a simpler code introduction in the first class, to ease new users into the platform and set them up for future success.Code Complexity:

There were times when the complexity of the code made comprehension difficult for some students. As I think the primary goal is to help students understand the logic of commands, it might be beneficial to break down complex coding sequences into simpler steps, or to provide more detailed explanations of complicated code. The complicated codes and example can be introduced if most students understand the logic.Github Organization: The Github repository for the course could potentially be more intuitively organized, which would make it easier for students to locate and utilize course resources (e.g: sometimes it can be difficult to find the corresponding codes just based on the content table).

Syllabus Structure: While the syllabus provided an overview of the course, a more detailed, organized structure would help students prepare better for each session and understand the progression of the course (What will be done in this week? What is the goal of today’s session? Which commands will be taught today? These can be listed on Github)

TA Availability: The class could greatly benefit from additional TA support, particularly during live in-person sessions. This would allow for more immediate responses to student queries and might enhance the overall learning experience.

STATA Logic Session: Incorporating a session dedicated to explaining the logic of STATA could help students grasp the reasoning behind certain programming methods and better understand how to apply them in their own work (e.g: when STATA is more convenient than other statistical language? How to find STATA resources if meet problems? How to manage the database of STATA?)

Practice Questions: The inclusion of ungraded practice problems after each session could be a useful way for students to apply the newly learned knowledge and skills. This could also help reinforce the key takeaways from each lesson.

Overall, your course has been immensely insightful and provided me with an array of new techniques and a deeper understanding of STATA. I believe these suggestions might help to further refine the course for future cohorts.

Thank you for your understanding, and I appreciate your open-mindedness to student feedback. I look forward to continuing to learn from your expertise in future courses.

Best regards,

Xxx Xx

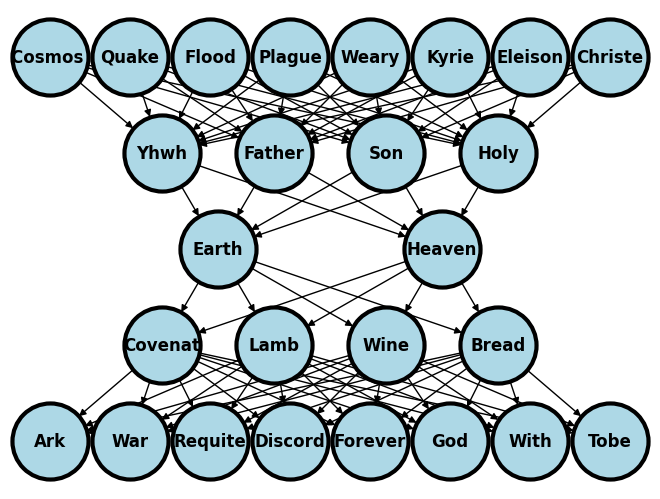

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Vexed", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Literal", pos = (2.1, 3) )

G.add_node("Methaph", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Vexed", "Yhwh"), ("Vexed", "Father"), ("Vexed", "Son"), ("Vexed", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Literal"), ("Yhwh", "Methaph")])

G.add_edges_from([ ("Father", "Literal"), ("Father", "Methaph")])

G.add_edges_from([ ("Son", "Literal"), ("Son", "Methaph")])

G.add_edges_from([ ("Holy", "Literal"), ("Holy", "Methaph")])

G.add_edges_from([ ("Literal", "Covenat"), ("Literal", "Lamb"), ("Literal", "Wine"), ("Literal", "Bread")])

G.add_edges_from([ ("Methaph", "Covenat"), ("Methaph", "Lamb"), ("Methaph", "Wine"), ("Methaph", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

plague, vexation

service, evaluation

codify, basics

decode, telemedicine

happyness, pondereplay

387. badgyalriri#

now i’ve just watched pon de replay video and was struck at 2:30/3:37

does beyoncé have any formation-style choreography like this before 2005?

she certainly has

a lotof it later; if none before 2005, then she totally stole the idea from badgalririof course we are not suggesting that originality is a thing; we’re merely tracking the creative process

388. teach!#

man/data

artist/encode

work/code

critic/decode

spectator/knowledge

path

maze

labyrinth

darkness

forward

light

guide

shepherd

annuŋŋamya

decisions

policy

guidelines

rx

389. beyoncé#

she codifies

a lotfeminism

black power

empowerment

will-to-power

kulture

slay ⚔

390. scalability#

code -> decode

that is what is scalable

and is the very essence of progress

391. growth#

autoencode

scale

grow

dominate

legacy

392. funny#

might aphrodite

essence of great art:

we suffer and perish so that poets may not lack material

393. stata#

vs @code

code editing

redefined?

even4stata?

investigate!

394. encode#

what do the following encode?

clearly they

dogiven their growth marketsand their presence in my mature life beyond 35yo

weinestaphaner hefe weissbier

westmalle dubel, tripel

duvel

orval

bozal

glenfiddich 15yo solera

lagavulin 16yo

395. kyrie#

k. 626

a whole ‘nother mozart!

the gods can’t let such a fella into old age

396. instrumentation#

how light the instrumention of the requem is!

mozart only presents the essence

its all encoded here

06/03/2023#

397. air#

no coincidence

year of

23film release

398. conventions#

These have been imposed on me by an apparent restraint. When I attempted systematized workdir (340y23s) and repo (600y23s) names it just didn’t work – process was arrested at git push or ghp-import. But simple non-numeric names (workdir: summer & repo: livre) worked seamlessly. And so, just like Apple names its MacOSX versions after some aspect of Californias natural resources (mountains, parks, wildlife), I’ll name my workdir and repo versions each year after some theme and variation (workdir: season & repo: book).

usernames

jhustata

jhutrc

jhusurgery

workdir

seasons

english

spanish

italian

french

german

zulu

yoruba

lusoga

repo

book

english

spanish

italian

french

german

zulu

yoruba

lusoga

399. wayne#

wed june 7 is last day of atc

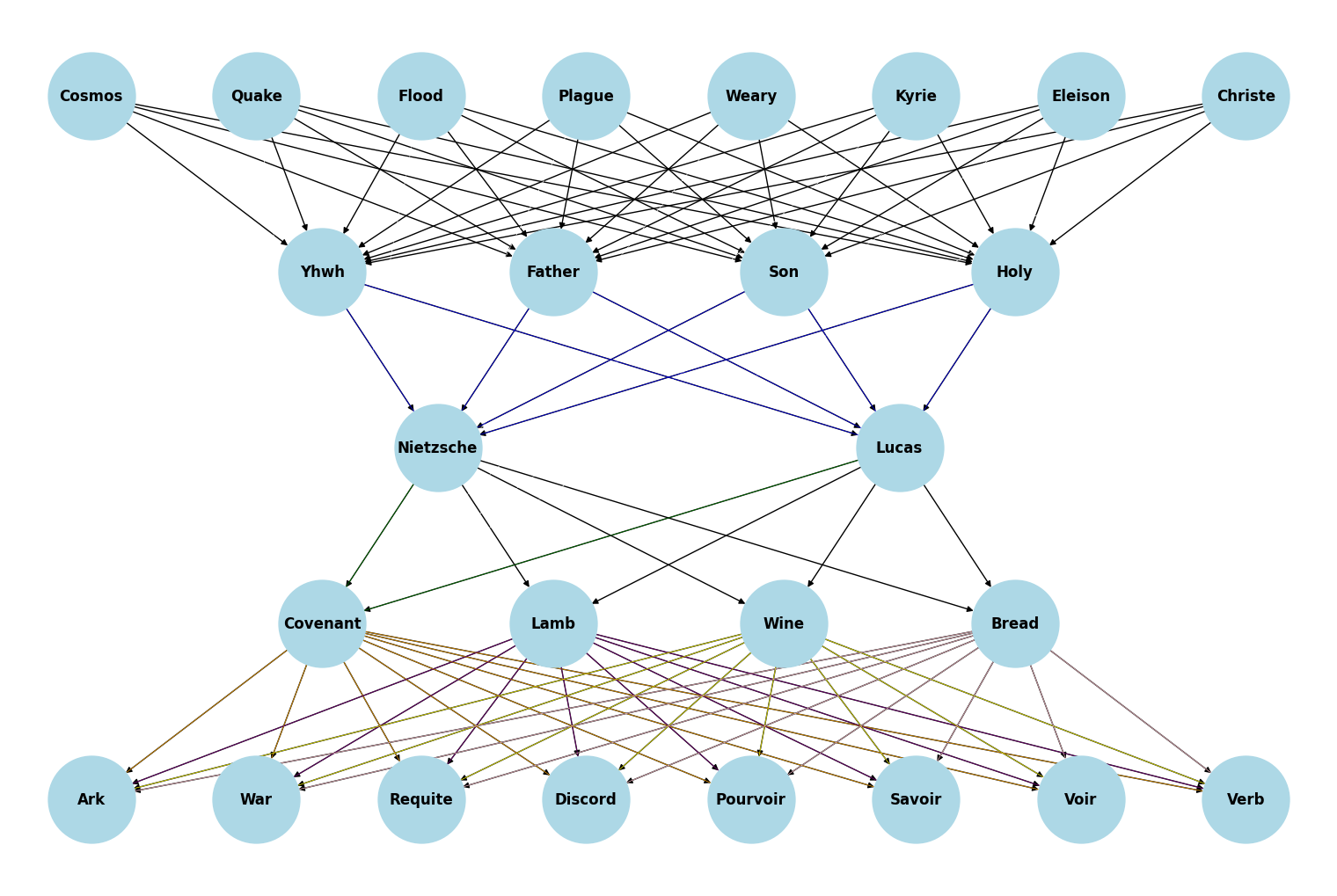

fly out of lax on thursday

or perhaps out of john wayne?

fa parents visiting currently

but apparently they’ll love me

then tue june 6 is for amy: 18:00-18:10

and mon june 5 is for tijuana

so last sunday flight out west?

400. paulo#

must leave nj june 8th

so may leave ca june 7th

but really tijuana is june 5th

and so ok to leave june 6th

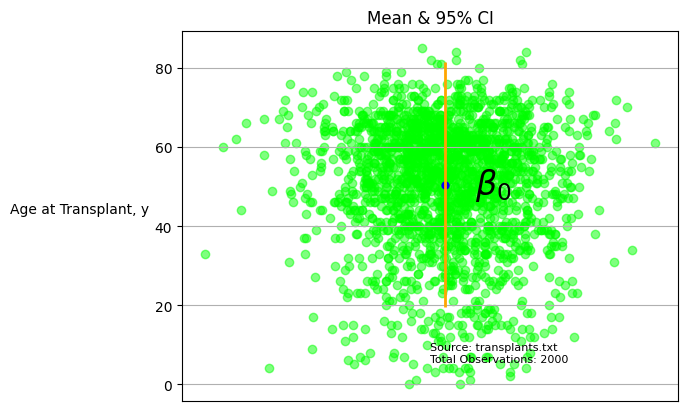

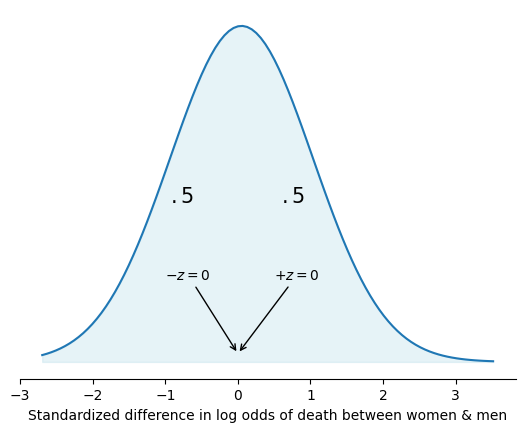

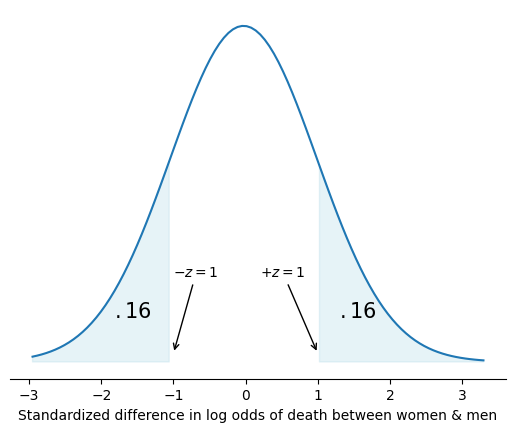

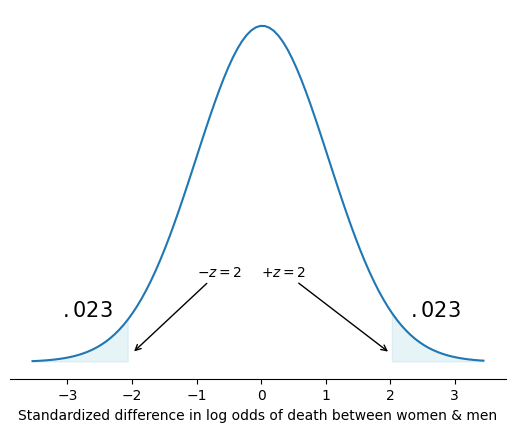

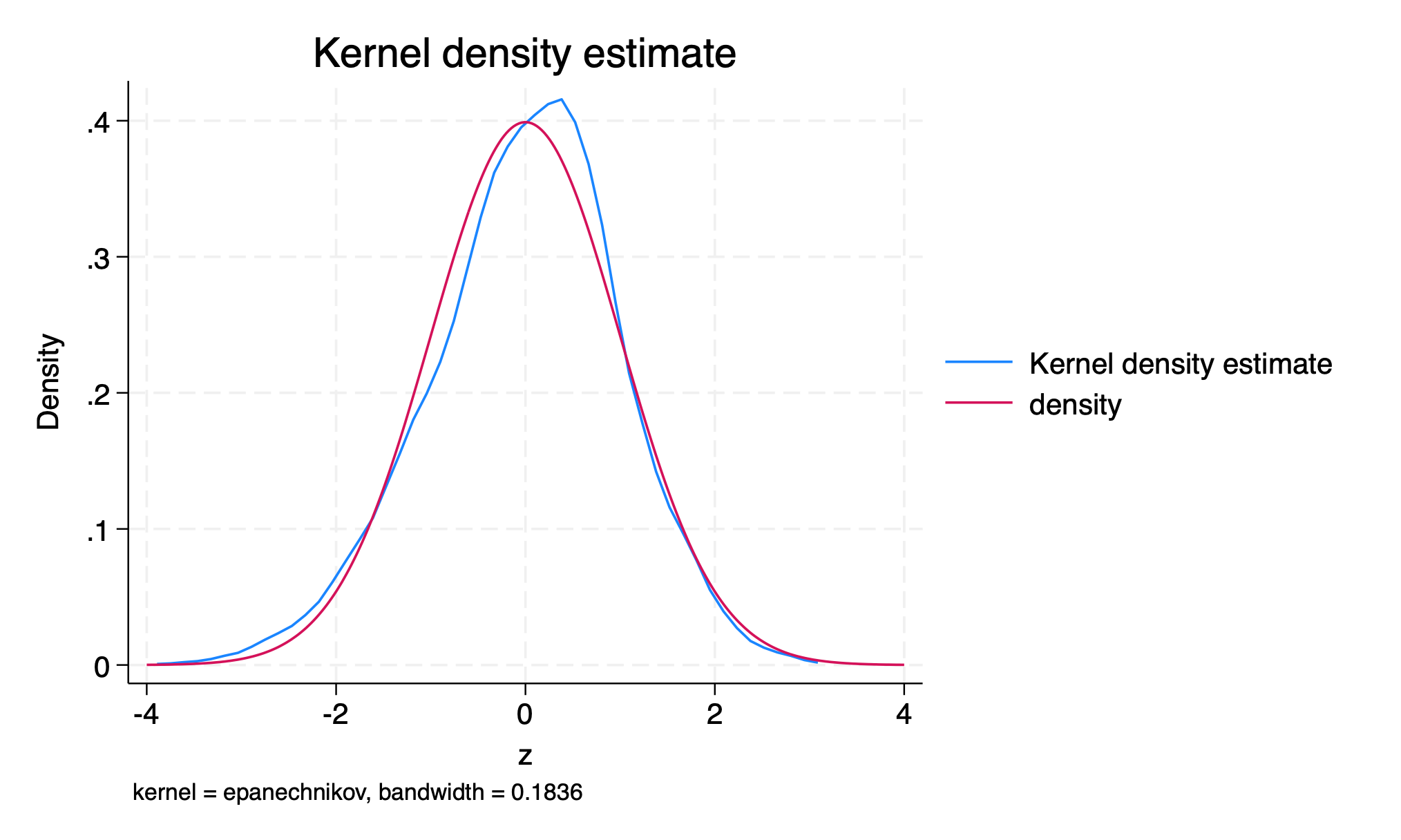

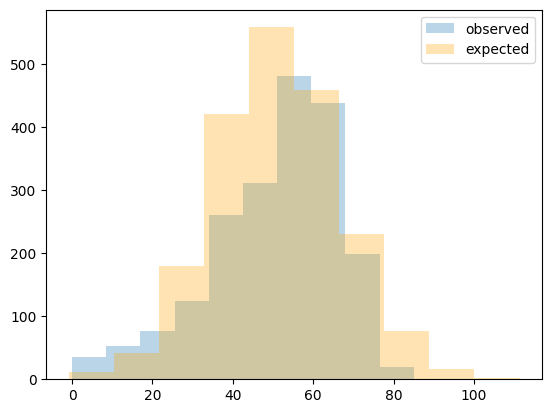

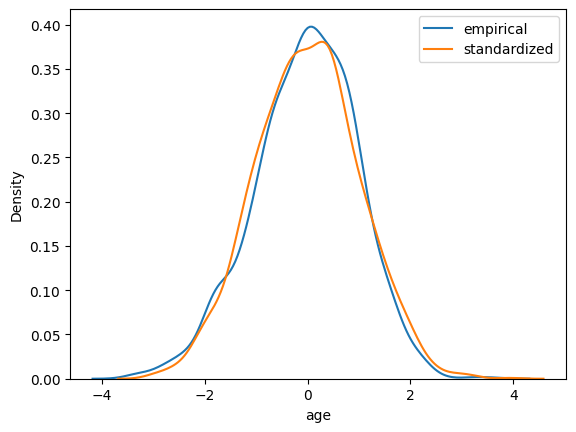

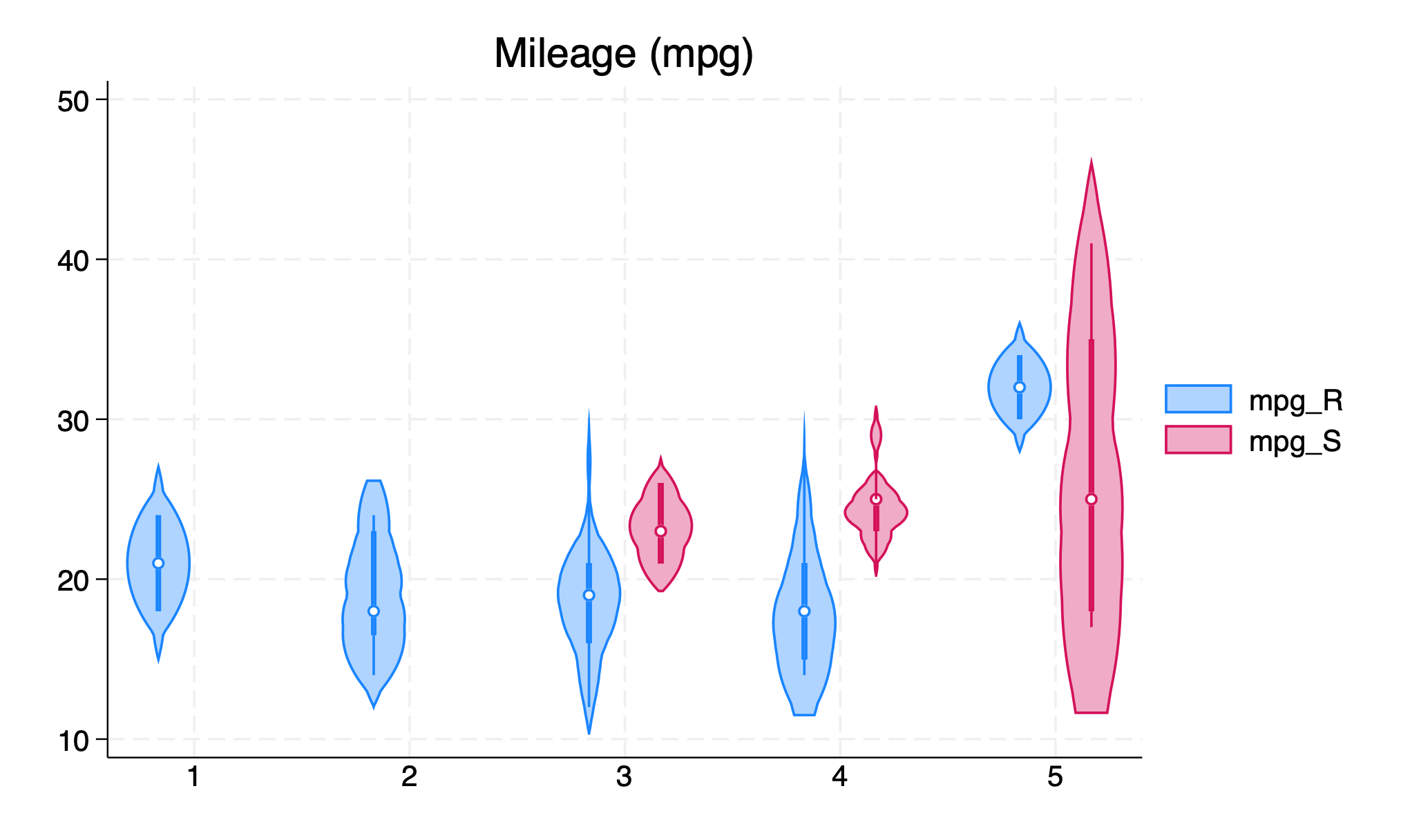

lets spend nites of june 4th, 5th

departure on 6th for both of us to md/n

wild card: stay 6th + john wayne departure

401. trip#

delta #F6JLMG

indigo #9140649956387

402. sq-foot#

indigo

350

waverly

700

other

1500

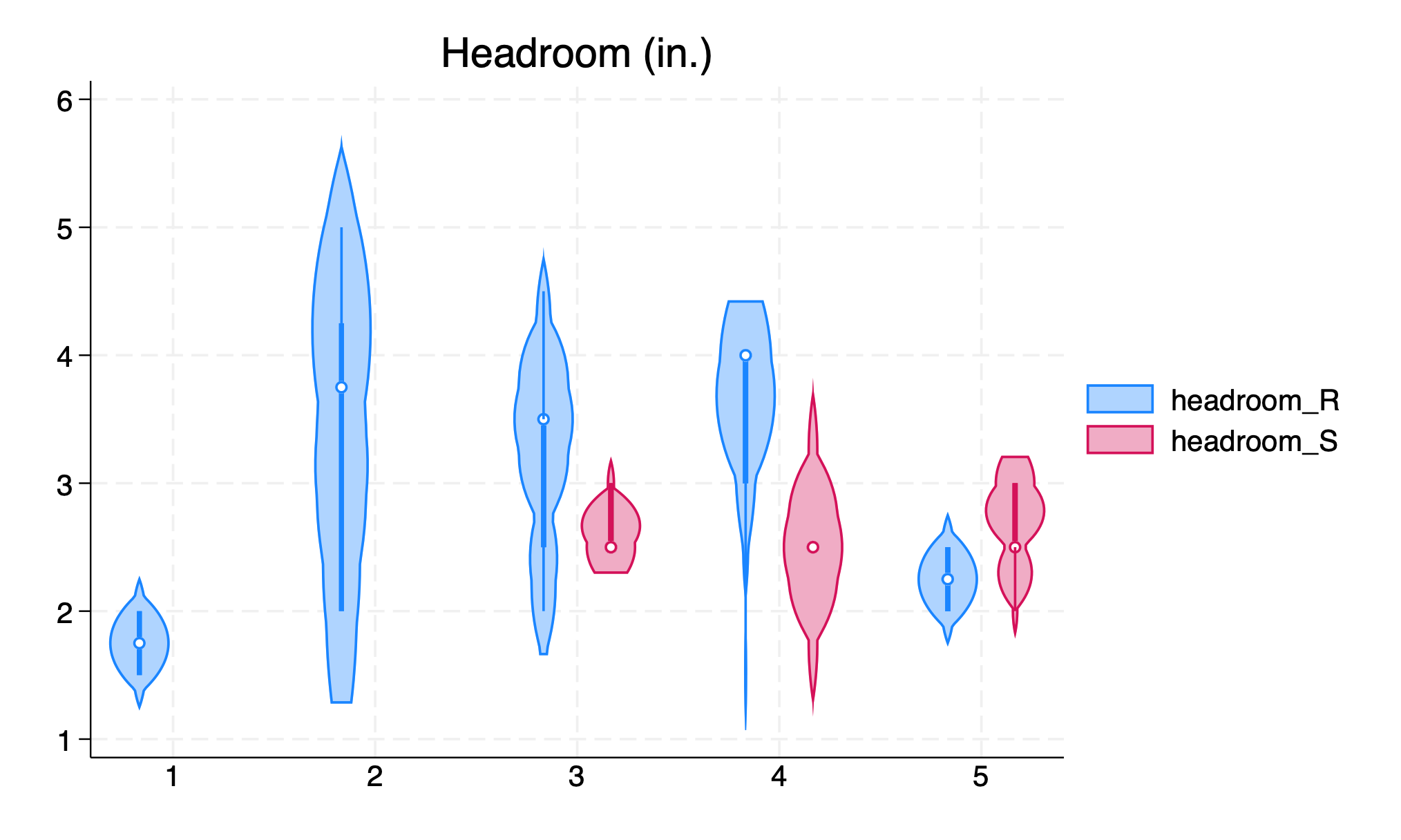

403. woody allen#

cacophony

ensemble cast of a-listers

one might codify the essence of human life

he’s still a type-i artist:

federico fellini’s catholicism

ingmar bergman’s nordic agnosticism

judaism: ancient, modern, new yorker

greek mythology, philosophy, talmud

uses these moulds for dramatis personnae

as a template for neurotic nyc types

of course his greatest success is navel-gazing

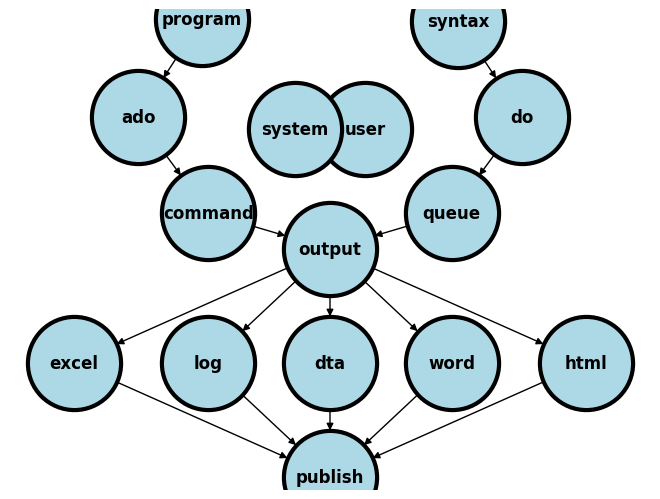

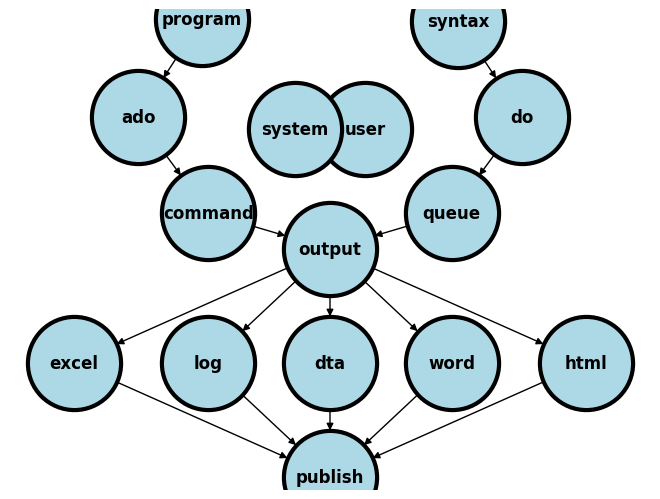

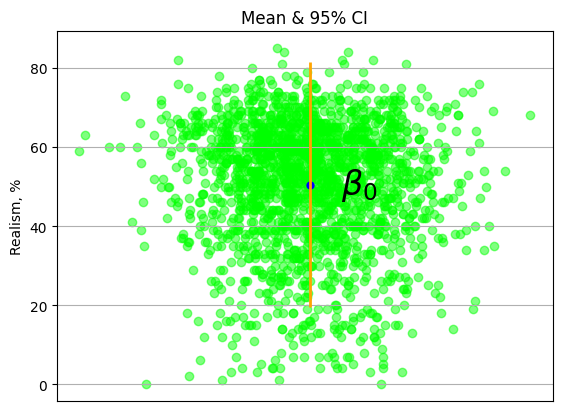

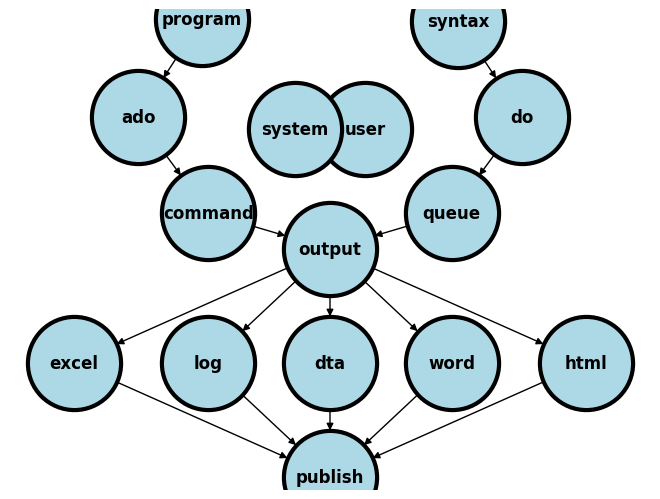

because he’s lots of material from his own existential pangs

404. ghp-import#

when stuck at

git pushofghp-importdiagnose cause: often corrupted image

so get rid of it and empty cloned repo

you’d

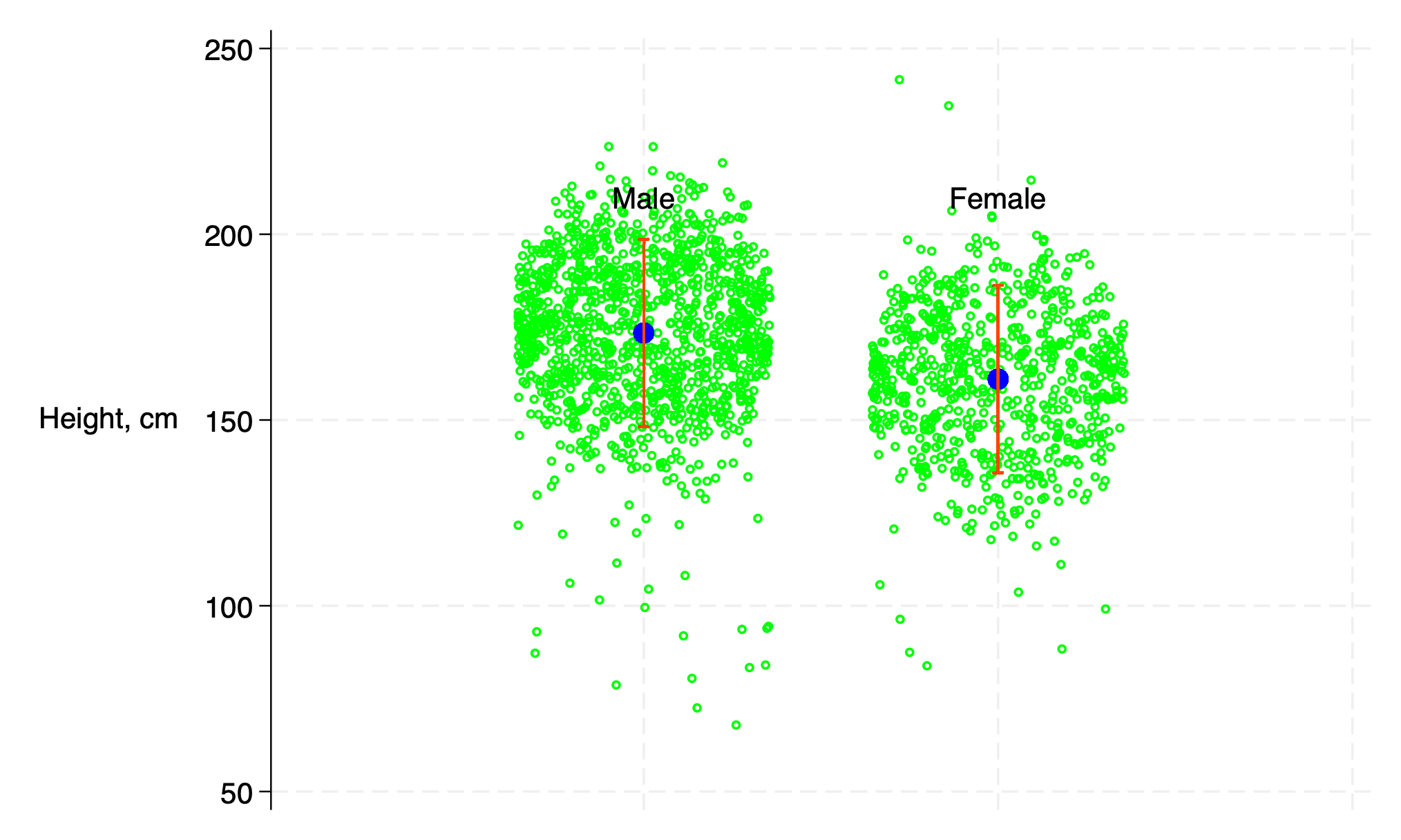

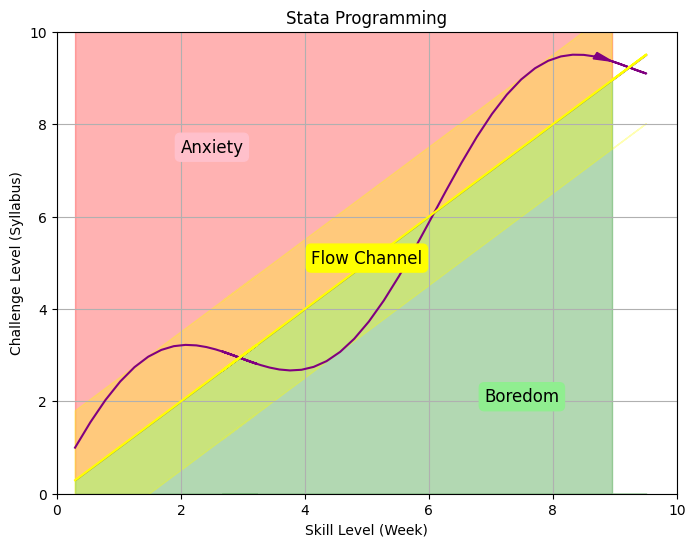

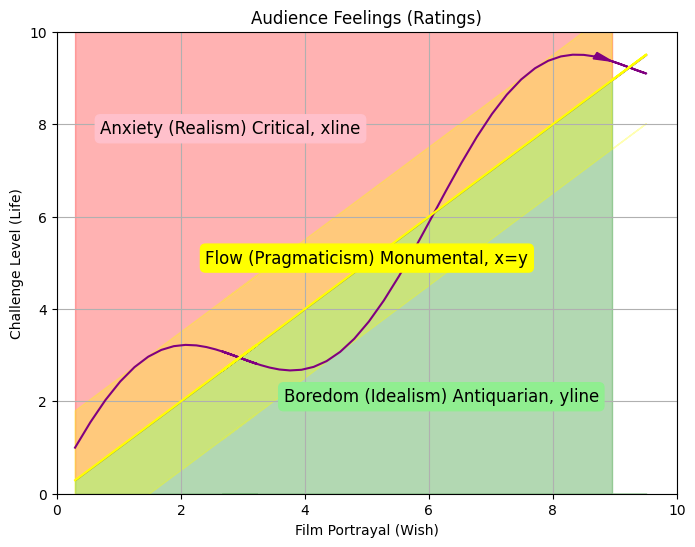

cp -r jupyter-book/* repoanywaysthis may spare you untold misery

405. love#

who can i run to?

xscape ask the question!

bewildering array of emotions

and potential guarantor

of peace, calm

what else is this but dimensionality reduction?

from \(\infty\) -> X=1?

dull!

06/04/2023#

406. annuŋŋamya#

original version

by ayf’s

jessica mirembe

407. neat#

we know

fifth harmony

not too shabby

but wrong reason

to get entire album!

408. r.kelly#

if you dream

clearly inspired

by storm is over by the r in r&b

probably written by tank and j valentine

tank

tyrese

toni braxton

jordin sparks

omario

faith evans

jojo

charlie wilson

tamar braxton

steve russell

rather beautiful song

sound track like its inspiration

06/07/2023#

409. feedback#

be sure to analyse it

i didn’t know how to access it

now that i see the 2021/2022 feedback

clear that i perpetuated the issues and added more

this has been all too little, too late for the graduates

however, i’ll be responsive even as early as this summer

gentle learning curve

organized content

relevant to homework

early feedback

competent teaching assistants

avoid humor since its really mockery

difference between tragedy & comedy 🎭?

we don’t empathize with the victim in comedy

yet we do with the one in tragedy

a student is the victim

students recommend that i get more professional

one resource for that

410. summary#

“Like all people who try to exhaust a subject, he exhausted his listeners.”

― Oscar Wilde, The Picture of Dorian Gray

411. boring#

in-class tasks

write program

that does …

assistants present

use python figure

412. engaging#

introduce essence of idea in 10min

practice that in-class

then link that to homework

perhaps reverse engineer process

start with homework & think: what skill

class is about providing technical skill to do hw

413. man#

data - man delights, not me

encode - invariable progression to death

code - solomon, hamlet, macbeth, elementary particles

decode - detraction will not suffer it (delights)

represent - and so i end my catechism

414. ysidro#

uber to san ysidro

walk across boarder

will fillout a brief form

option: mercado m. hidalgo

then uber to rosarito

maybe do revolucion at nite

spend additional nite?

caesars restaurant next day

415. catechism#

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Vexed", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Q", pos = (2.1, 3) )

G.add_node("A", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Vexed", "Yhwh"), ("Vexed", "Father"), ("Vexed", "Son"), ("Vexed", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Q"), ("Yhwh", "A")])

G.add_edges_from([ ("Father", "Q"), ("Father", "A")])

G.add_edges_from([ ("Son", "Q"), ("Son", "A")])

G.add_edges_from([ ("Holy", "Q"), ("Holy", "A")])

G.add_edges_from([ ("Q", "Covenat"), ("Q", "Lamb"), ("Q", "Wine"), ("Q", "Bread")])

G.add_edges_from([ ("A", "Covenat"), ("A", "Lamb"), ("A", "Wine"), ("A", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

416. autoencoder#

nietzsche — he misunderstood the effects of “autoencoding” when he said this: sebastian bach.—in so far as we do not hear bach’s music as perfect and experienced connoisseurs of counterpoint and all the varieties of the fugal style (and accordingly must dispense with real artistic enjoyment), we shall feel in listening to his music—in goethe’s magnificent phrase—as if “we were present at god’s creation of the world.” in other words, we feel here that something great is in the making but not yet made—our mighty modern music, which by conquering nationalities, the church, and counterpoint has conquered the world. in bach there is still too much crude christianity, crude germanism, crude scholasticism. he stands on the threshold of modern european music, but turns from thence to look at the middle ages.

bach is code, essence, from whence the well-tempered clavier, all keys, and all music may be derived. even he occasionally wrote sweet decoded music, as we see in his arias

that said, goethe & nietzsche are generally right in their assessment

417. jesus#

messianic complex

when you figure out

essence of man’s predicament

and offer yourself as palliative

call it altruism, it’s sweet

variants?

parent

spouse

teacher

employer

provider (service)

firm

government

culture

religion

cause

king caesar

how do artists fit in? they teach us

precedence

convention

restraint

teach us dimensionality dedication

lead us towards

?X=1

and that’s how music is the greatest of man’s inventions: how man reduces all cosmic noise to 12 relative frequencies! or to one diatonic key (wherein a chromatic scale of 24 frequencies fit), on a well-tempered clavier!!!

note: one can derive 23 of the 24 relative frequencies given only one of them (typically the tonic). so it’s really one degree of freedom: X=1 (without the ?)

418. crave#

dimensionality reduction

because we are low energy

frailty symptom of human race

419. antithesis#

dionysus visited his uncle hades

found achilles regretting his heroism

looks like earthly achievements are not

currency in hades (no time-varying weight neither)

420. tribe#

strength of a tribe

deduced from number of gods

in their mythology

by extension, the decline

of a tribe may be deduced from

a trend towards fewer explanatory factors

here monotheisim represents the very weakest

most decadent state; by extension, the most intelligent, resentful

using this formula one may productively launch into genealogy of morality

421. s&p#

sobrero

poncho

422. autoencode#

life

bible

mass

magesterium

catholicism

why romans enduringly more successful than greco-judaism

going purely by numbers, territory, and calendar

dimensionality reduction

something every [weak] man craves

thus power to collect indulgences

conquered hearts are easier to manage

otherwise stronger armies necessary, which romans also had

but found this a more efficient system

423. sandiego#

market & 9th

is my kinda joint

so many reasons

424. stream#

of consciousness

sensory impressions

incomplete ideas

unusual syntax

rough grammar

my style for 340.600

according to one student

this was no complement

425. impressions#

sensory

data

input

system

feedback

negative

cripples

if not regulated

but ultimately corrects

output users find unhelpful

how to do it without depressing system!

be more sympathetic to the prudent stance:

hideth

426. hotel#

350 sq foot with city views

750 sq foot < my waverly joint

900 sq foot apt at waverly?

427. piers#

an unlikely topic

but great discussion

highlighting summary statistics

data

encoded

coded (\(\mu, \sigma\))

decoded

inference

anecdotes

misleading

tails of distribution

nonetheless critical info

very skewed distribution say

customer tastes have little variation

perhaps outcome of the capitalistic process

of mass producing, advertizing, scale, autoencoding

428. desensitization#

childhood exposures

result in robust immunity

thus treatment of offensive messengers?

even more free, at times offensive speeach!

my starting poing when it comes to the consideration of any issue relating to free speech is my passionate belief that the second most precious thing in life is hte right to express yourself freely.

the most precious thing in life, i think, is food in your mouth and teh third most precious is a roof oer your head, but a fixture for me in the number 2 slot is free expression, just below the need to sustain life itself. that is because i have enjoyed free expression in this country all my professional life

\(\vdots\)

you might call the new intolerance, a new but intense desire to gag uncomfortable voices of dissent

replacement of one kind of intolerance with another \(\cdots\)

underlying prejudices, injustices or resentments are not addressed by arresting people. they are addressed by the issues being being aired, argued and dealth with preferably outside the legal process. for me, the best way to increase society’s resistance to insulting or offensive speech is to allow a lot more of it.

as with childhood diseases, you can better resist those germs to which you have been exposed. we need to build our immunity to taking offence, so that we can deal with the issues that perfectly justified criticism can raise. our priority should be to deal with message, not the messenger.

president obama said in an address to the united nations, ‘laudable efforts to restrict speech can become a tool to silence critics (goal of an oppossing credo, fyi) or oppress minorities (which is contextual). the strongest weapon against hateful speech is not oppression, it is more speech.]

429. teaching#

no different than art

create boundaries

limit the material

don’t be exhaustive:

dr.more restraint than predecessors!

have constant feedback from students

don’t wait for course eval

thats too little, too late

06/08/2023#

430. monica#

new york directly south of ottawa

started off with banter

about where she is from & school

feedback on the event at which i attended

wants to give me broader strokes on indexes

two-day event: the american dream experience

in terms of added value to my life

covering the last 30 years of my life

$15m in ug gov bonds

$250k in ml playground: controlled experiment

$XX? no clue

she’s set me on calendar for june 2024

431. analysts#

woody allen’s treatment of pyscholanalysts is similar to his treatment of god: willing but not able

or perhaps that god is uninterested, maybe because also not able

but he never questions the existence of analyts: he visited one weekly for 15 years

432. tasks#

program define

syntax varlist

exit 340

display

quietly

433. veritas#

tom hanks at harvard: 19:34/22:16

one of 3-types of americans

embrace liberty & freedom for all

whineth(muscle, efferent blockage)those who won’t

tameth(strong, unconscious type with little cognitive development)and those who are indifferent

hideth(sensory, afferent blockade)

codified the essence of the life tom & i have lived: a sort of external validation

434. liberabit#

and there’s three types of students:

those who leverage innovation, warts & all

tamethcan enumerate instances wherein they were maligned

whinethprudent who are trying to meet the requirements of their program

hideth

cater to all three and give them maximum value

my original preference for type-1 was an unconscious bias

435. generalize#

input

hidethkeyboard

mouse

voice

touch

processor

whinethmainframe

desktop

laptop

cellphone

etc

output

tamethmusic

pictures

documents

etc

436. versus#

437. apple#

dream it

chase it

code it

438. suigeneris#

hardware

software

services

something only apple could do

439. mac#

mac studio

plus apple monitor

incredible performance

connectivity with m2 max

developing new versions of apps

before they go live

hosting after they go live

exploring those options from ds4ph

taking demanding workflows to the next levelm-dimensional simulation is faster than ever

and may use 7 input feeds & encode them

decode and simulate in record time

440. stata#

1

tamethworkflow2

whinethprogram define3

hidethadvanced syntax

these could represent three classes 340.600, 340.700, 340.800

one class sticks to stata programming & the program define command, syntax varlist, etc.

another class introduces advanced syntax that contibutes to quality of the programs from the basic class

finally there is a class that emphasizes workflow, github, collaboration, self-publication

while i may not have time to write up three syllabuses, i could use markdown header-levels to denote these

06/09/2023#

441. apple#

lots of ai/ml lingua

only notice since ds4ph

what luck, my gtpci phd!

442. not-for-profit#

efficiency not prized

increased expenditure is the thing

this is show-cased to win yet more grants

443. psychology#

stable

erratic

pattern

444. program#

stata programming at jhu

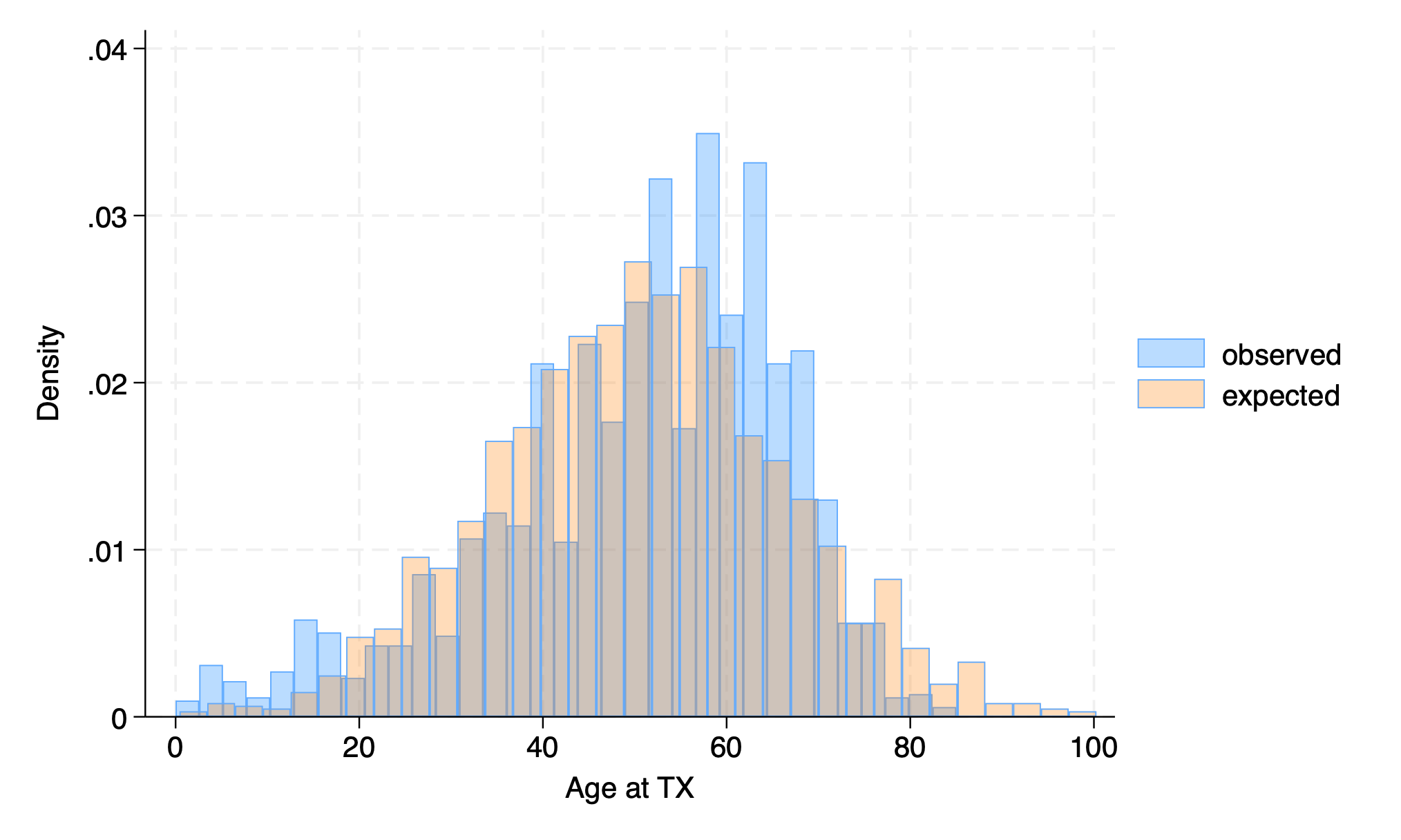

444.1 stata i (basic stata 340.600)#

program define myfirst

di "my first program"

end

myfirst

. program define myfirst

1. di "my first program"

2. end

.

. myfirst

my first program

.

end of do-file

.

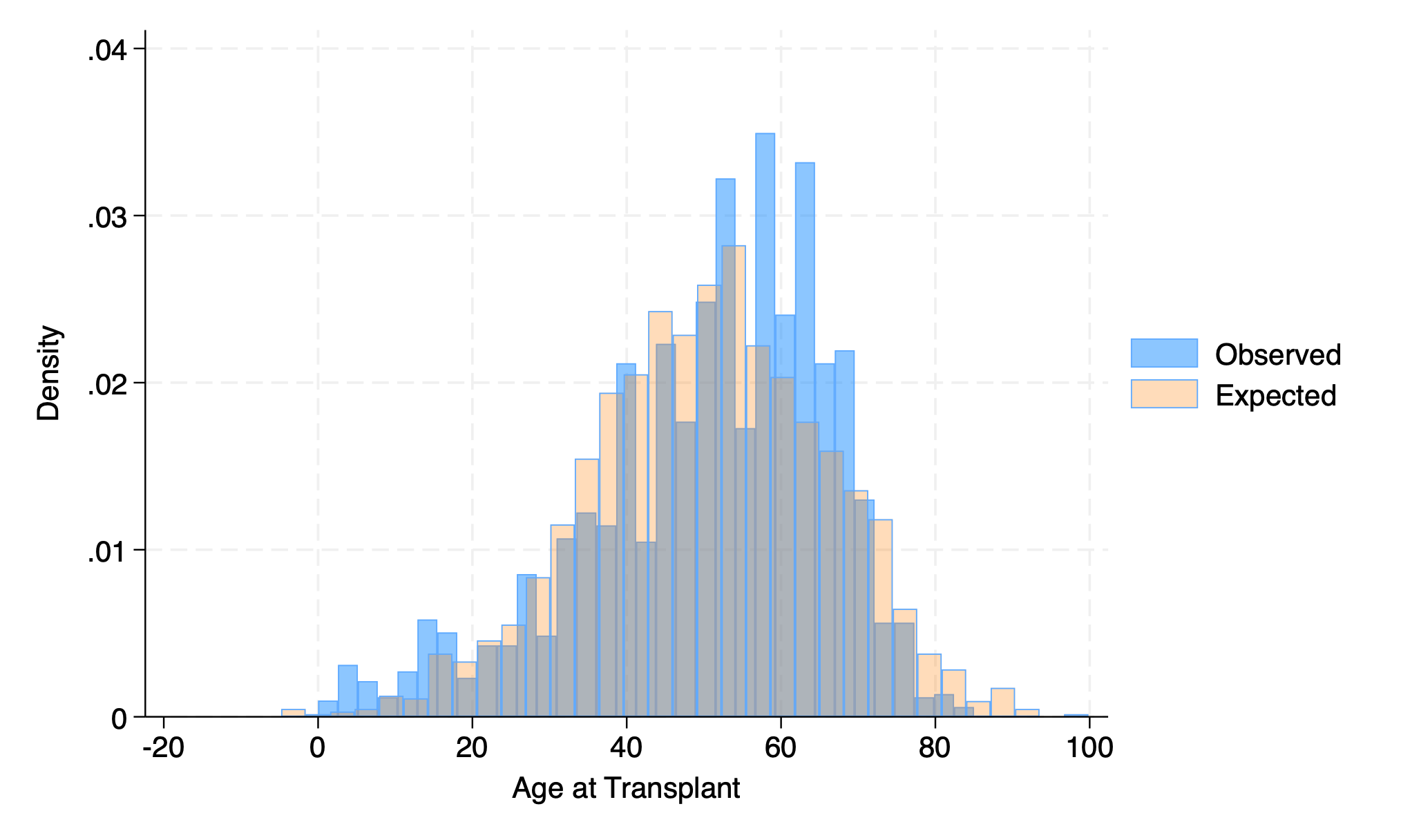

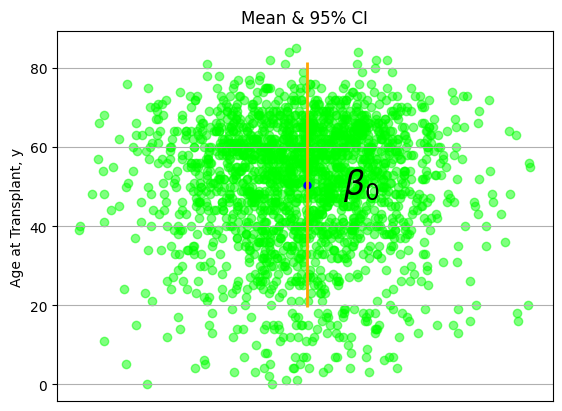

444.2 stata ii (intermediate stata 340.700)#

program define mysecond

foreach v of varlist init_age peak_pra prev female receiv {

qui sum `v', d

di r(mean) "(" r(sd) ")" ";" r(p50) "(" r(p25) "-" r(p75) ")"

}

end

clear

import delimited https://raw.githubusercontent.com/jhustata/book/main/hw1.txt

mysecond

. program define mysecond

1. foreach v of varlist init_age peak_pra prev female receiv {

2. qui sum `v', d

3. di r(mean) "(" r(sd) ")" ";" r(p50) "(" r(p25) "-" r(p75) ")"

4. }

5. end

.

.

.

. clear

. import delimited https://raw.githubusercontent.com/jhustata/book/main/hw1.txt

(encoding automatically selected: ISO-8859-1)

(8 vars, 1,525 obs)

. mysecond

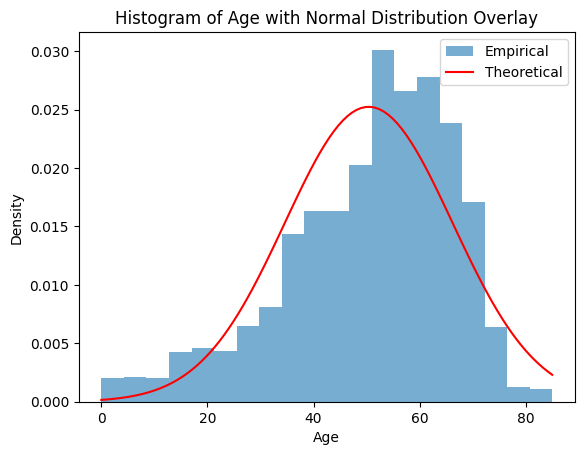

50.575743(14.524256);52.950001(40.549999-61.85)

20.011155(33.867173);0(0-28.5)

.11737705(.32197462);0(0-0)

.38688525(.48719678);0(0-1)

.35147541(.4775877);0(0-1)

.

end of do-file

.

444.3 stata iii (advanced stata 340.800)#

qui do https://raw.githubusercontent.com/muzaale/book/main/mysecond.ado

import delimited https://raw.githubusercontent.com/jhustata/book/main/hw1.txt

mysecond

. clear

. import delimited https://raw.githubusercontent.com/jhustata/book/main/hw1.txt

(encoding automatically selected: ISO-8859-1)

(8 vars, 1,525 obs)

. mysecond

50.575743(14.524256);52.950001(40.549999-61.85)

20.011155(33.867173);0(0-28.5)

.11737705(.32197462);0(0-0)

.38688525(.48719678);0(0-1)

.35147541(.4775877);0(0-1)

.

end of do-file

.

445. in-person#

selective feedback from the in-person students (offered me realtime, but biased feedback)

most complaints from the students who relied on recorded lectures (offered feedback that was too little, too late)

but at the end of the day there are three-types of students & i must recognize that (maybe go beyond .01 and consider…)

446. will-to-power#

is the code of life; everything else represents decoded “consequences” of this fact

there are:

worthy adversaries (type-i)

tameth;others that whine about unfairness of competition (type-ii)

whineth; and,many who are indifferent (type-iii)

hideth

adults will have to choose their lot at some point

447. in-other-words#

unworthy adversaries are the lot of many modern types who consider worthy types as evil incarnate (type-i)

they’re passionate about some cause & have the

will-to-whineon behalf of the school of resentment (type-ii)make no mistake: they consider indifferent types as a subtle but perhaps more problematic enemy of the two (type-iii)

448. autoencode-cipher#

human condition

shakespeare

nietzsche

philosophy

why so hard!

449. requite#

type-i people can requite (

tameth, worthy adversaries, strong)type-ii people resort to whining (

whineth, weary, weak, meek, damsels-in-distress)type-iii people are indifferent (

hideth, prudent, epicurus, god, psychoanalysts, hedonists, etc.)

450. hanks#

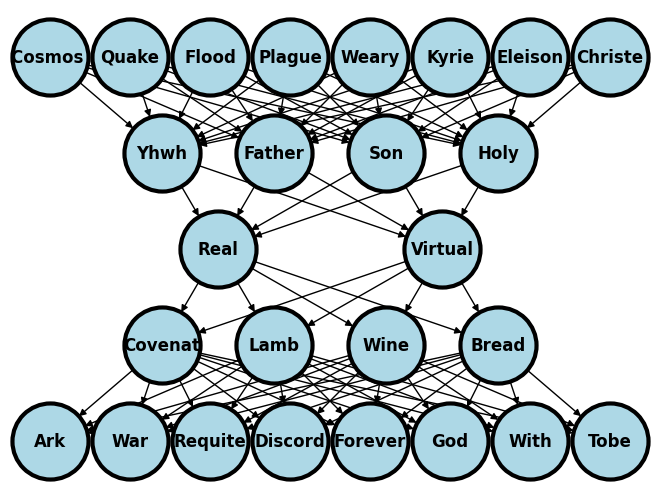

those who have grown weary become indifferent and resort to fantasy, superheroes, video games, and other mostly hedonistic distractions such as alcohol, women, and sports

451. weary#

matt 11:28

come unto me, all ye that labor and are heavy laden, and i will give you rest

psalm 23

the lord is my shepherd; i shall not want.

he maketh me to lie down in green pastures: he leadeth me beside the still waters.

he restoreth my soul: he leadeth me in the paths of righteousness for his name's sake.

yea, though I walk through the valley of the shadow of death, i will fear no evil: for thou art with me; thy rod and thy staff they comfort me.

thou preparest a table before me in the presence of mine enemies: thou anointest my head with oil; my cup runneth over.

surely goodness and mercy shall follow me all the days of my life: and I will dwell in the house of the Lord for ever.

tom hanks closed with these words:

may goodness and mercy follow you all the days, all the days, of your lives. godspeed!

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Weary", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Q", pos = (2.1, 3) )

G.add_node("A", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Weary", "Yhwh"), ("Weary", "Father"), ("Weary", "Son"), ("Weary", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Q"), ("Yhwh", "A")])

G.add_edges_from([ ("Father", "Q"), ("Father", "A")])

G.add_edges_from([ ("Son", "Q"), ("Son", "A")])

G.add_edges_from([ ("Holy", "Q"), ("Holy", "A")])

G.add_edges_from([ ("Q", "Covenat"), ("Q", "Lamb"), ("Q", "Wine"), ("Q", "Bread")])

G.add_edges_from([ ("A", "Covenat"), ("A", "Lamb"), ("A", "Wine"), ("A", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

452. cathecism#

what does it take to produce a table, figure, or abstract like

thisone?output of choice (stata monitor, logfile, excel, word, figure, markdown, html, online)

text (abstract, manuscript, website, catalog)

macros (summaries, estimates, formats, names, embedding)

command to produce output (summary, count, regress)

so, today we are going to focus on

thisaspect!stata monitor

logfile

excel

wordcp

figure

markdown

html

online

later we’ll develop this idea till \(\cdots\)

peer-review ready

beta-testing

etc

453. buzzwords#

thanks to the neuroengine in apple silicon, we now …

api

454. visionpro#

augmented reality

digital world

physical space

computer

+ 3d interface: visionpro

+ 2d interface: mac, iphoneoutput

look through it (a first in our products)

input

eyes

voice

hands

use your apps

immersively

on infinite canvas

no longer limited by display

make apps any size you want

revolutions/input

mac -> personal computing/mouse

iphones -> mobile computing/touch-multi

visionpro -> spatial computing/eyes, hands, voice

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Weary", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Real", pos = (2.1, 3) )

G.add_node("Virtual", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Weary", "Yhwh"), ("Weary", "Father"), ("Weary", "Son"), ("Weary", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Real"), ("Yhwh", "Virtual")])

G.add_edges_from([ ("Father", "Real"), ("Father", "Virtual")])

G.add_edges_from([ ("Son", "Real"), ("Son", "Virtual")])

G.add_edges_from([ ("Holy", "Real"), ("Holy", "Virtual")])

G.add_edges_from([ ("Real", "Covenat"), ("Real", "Lamb"), ("Real", "Wine"), ("Real", "Bread")])

G.add_edges_from([ ("Virtual", "Covenat"), ("Virtual", "Lamb"), ("Virtual", "Wine"), ("Virtual", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

455. minority report#

has arrived

3d camera

with audio

one relives memories

456. visionOS#

creates authentic representation of

whole body& gesturesneural networks; no video camera looking at you

using most advanced machine learning

encoder-decoder neuronetwork trained on lots of data

your persona has video and depth for developers

design of car

teaching 3d human anatomy

everyday productivity: eg microsoft apps

optic id distinguishes identical twins using retina signature

patents: 5000; thus, most advanced mobile devide ever created by apple

starts at $3499 next year in the us; will rollout to other countries

457. quan#

and for my soldiers that pass’d over, no longer living, that couldn’t run whenever the reaper came to get them

can we please pour out some liquor symbolizing \(\cdots\)

06/10/2023#

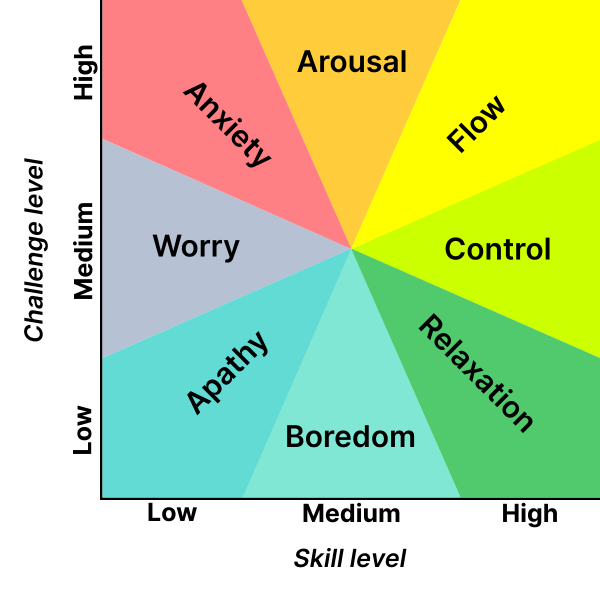

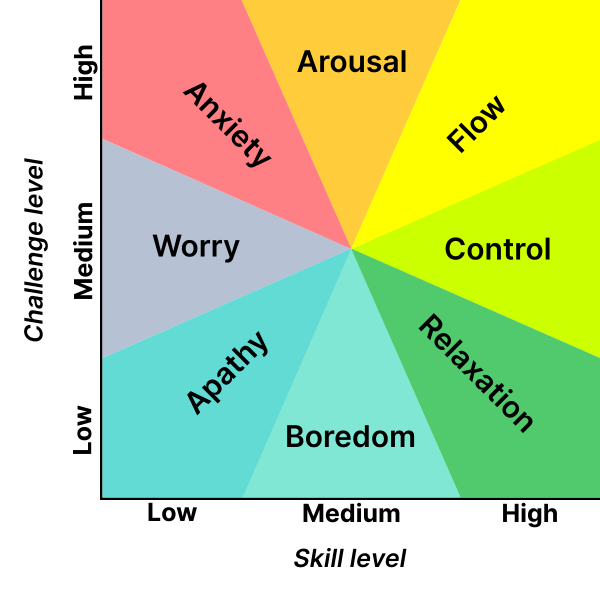

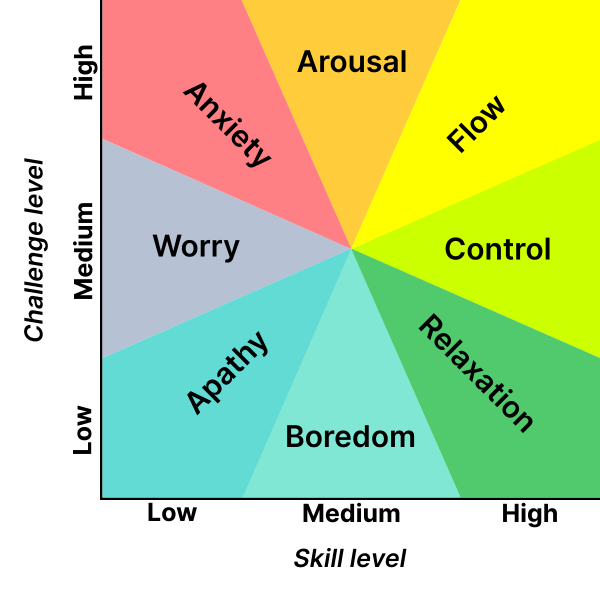

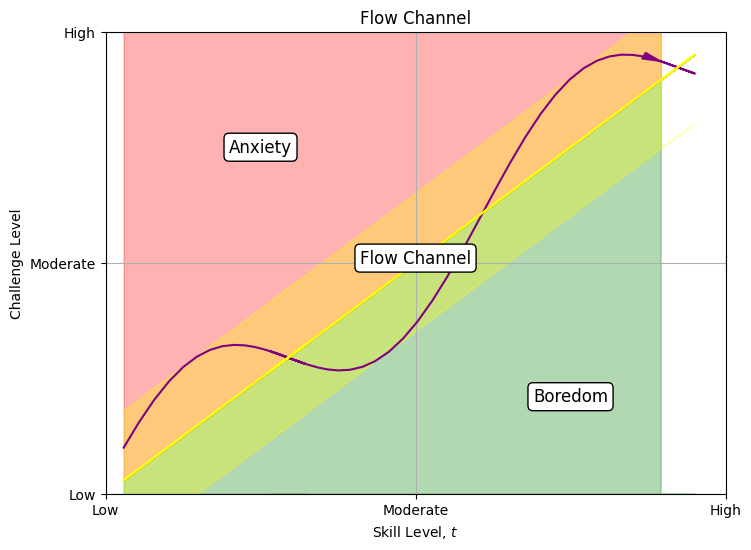

458. flow#

four quadrants

two factors

challenge

skill

discordance

indifference, apathy,learned helplessnessapathy (low skill, low challenge)

anxiety (low skill, high challenge)

boredom (high skill, low challenge)

flow (high skill, high challenge)

of course this prescriptive from positive psychology is tidy but only applies to a kind environment where factors can be kept under control.

what truly counts in a wicked environment is hardness, that staying strength that allows one to learn anew without getting overwhelmed.

many attribute this to adaptability; however, hardness is the necessary means by which one might flourish in a wicked, unpredictable environment. adaptability is the consequence.

459. children#

why do children really love me? [28]

does it explain why adults find me intolerable?

lets attempt to decode this:

i’ve been told by nephews and nieces that i always treat them like adults and they like it

meanwhile adults find me unprofessional, lacking in empathy, mocking, disrespectful, proud, of low emotional intelligence, too critical, generally unpleasant

now lets explore these terms using csikszentmihalyi’s flow model: apathy, boredom, relaxation, control, flow, arousal, anxiety, and worry

children experience arousal in my company as contrasted with boredom in their typical environments (with their parents, other adults and fellow children); so i present them with high challenge levels, which their

medium skill-levelscan cope withadults including my parents, siblings, friends, girl friends, workmates, colleagues, mentors, trainees, and graduate students (never my undergrad students!) have in various ways described how i disrupt the flow they typically achieve with other colleagues or instructors and instead precipitate anxiety and worry. none of these adults has ever called me incompetent, but rather they’ve used terms such as “no comment” (a parent), hard to work with (colleagues), mockery (students), this should be an advanced class (students), didn’t equip us with the skills for the homework, took way more time than deserving for a 2cr class, disorganized/

wicked(student)so it looks like i indiscriminately present the highest level of challenges for both children and adults. but because children are stronger, more resilient, and often bored by adults, they find me refreshing. and because adults are weary, exhausted by life, perhaps also by their own children (infants or teenage), they could do with something a little more relaxing than yours truly!

460. cognitive#

dissonance resolved by the elaborate schema outlined above!

now i can understand why some adults embrace the challenges i’ve presented them while others have been upset

think of those very enthusiastic ta’s who wish to work with me even without pay

461. distortion#

play it, once, sam

play it, sam

play ‘as time goes by’

sing it, sam

462. art#

pep guardiola has spent £1.075 billion to bring a #UCL title & treble to man city

and its in similar opulence that handel, bach, mozart, ludwig, and chopin were possible

the same can be said of michelangelo & raphael, whose achievements trump those of any

knownpainter & sculptist

463. cassablanca#

464. superwoman#

why was a nine-year old boy so profoundly moved by this song? 4:20/29.42

thirty-four years later this boy is justified: seems like the song was a turning point in his heroes, the song writers, career

insofar as it is a heart-rending depiction of the mind of a young black woman, it remains a bit of a puzzle:

a man wrote it

a boy grew possessed by it

and thats how the spirit of music took over him

the rest is literally history

465. gospel#

miracle by marvin sapp

written by jonathan dunn

produced by kevin bond

revolution in gospel harmony

arpeggios that introduce song

466. university#

gospel university

online music academy

check it out on youtube

467. bonded#

kevin bond’s music

ultra-clean

innovative

sophisticated piano

hawkins music umbrella afforded him the opportunity to hone his

skillsin a privileged \(\cdots\)yet

challengingenvironment. that environment served as a major foundation for his ultimate musicaldestiny!flourish

flow

follow the leading of the wind

468. self-criticism#

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Weary", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Physical", pos = (2.1, 3) )

G.add_node("Metaphys", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Weary", "Yhwh"), ("Weary", "Father"), ("Weary", "Son"), ("Weary", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Physical"), ("Yhwh", "Metaphys")])

G.add_edges_from([ ("Father", "Physical"), ("Father", "Metaphys")])

G.add_edges_from([ ("Son", "Physical"), ("Son", "Metaphys")])

G.add_edges_from([ ("Holy", "Physical"), ("Holy", "Metaphys")])

G.add_edges_from([ ("Physical", "Covenat"), ("Physical", "Lamb"), ("Physical", "Wine"), ("Physical", "Bread")])

G.add_edges_from([ ("Metaphys", "Covenat"), ("Metaphys", "Lamb"), ("Metaphys", "Wine"), ("Metaphys", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

Come unto me, all ye that labour and are heavy laden, and I will give you rest

– Matthew 11:28

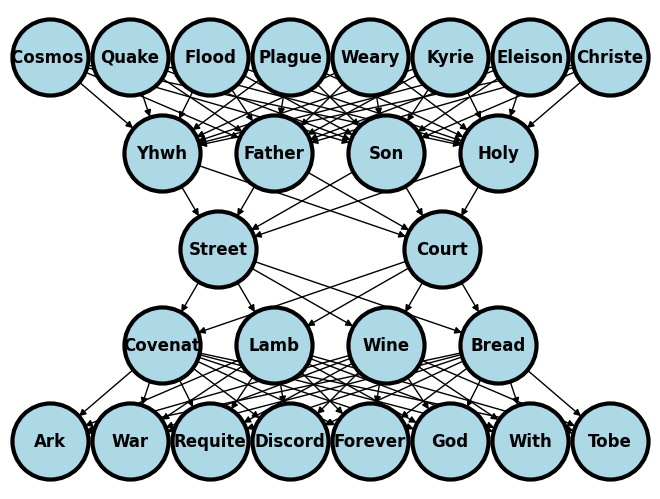

467. babyface#

babyface

mmmhh

468. 2pac#

street 6:28/12:27

court

forgive?

i was like let’s have a charity match and give it to the kids.

and they were like no, we want him to do jail-time (deny the kids an opportunity, because… vindictive). that’s what they told the judge.

sort of echo’s a trump vs. letterman exchange

Show code cell source

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

import sklearn as skl

#plt.figure(figsize=[2, 2])

G = nx.DiGraph()

G.add_node("Cosmos ", pos = (0, 5) )

G.add_node("Quake", pos = (1, 5) )

G.add_node("Flood", pos = (2, 5) )

G.add_node("Plague", pos = (3, 5) )

G.add_node("Weary", pos = (4, 5) )

G.add_node("Kyrie", pos = (5, 5) )

G.add_node("Eleison", pos = (6, 5) )

G.add_node("Christe", pos = (7, 5) )

G.add_node("Yhwh", pos = (1.4, 4) )

G.add_node("Father", pos = (2.8, 4) )

G.add_node("Son", pos = (4.2, 4) )

G.add_node("Holy", pos = (5.6, 4) )

G.add_node("Street", pos = (2.1, 3) )

G.add_node("Court", pos = (4.9, 3) )

G.add_node("Covenat", pos = (1.4, 2) )

G.add_node("Lamb", pos = (2.8, 2) )

G.add_node("Wine", pos = (4.2, 2) )

G.add_node("Bread", pos = (5.6, 2) )

G.add_node("Ark", pos = (0, 1) )

G.add_node("War", pos = (1, 1) )

G.add_node("Requite", pos = (2, 1) )

G.add_node("Discord", pos = (3, 1) )

G.add_node("Forever", pos = (4, 1) )

G.add_node("God", pos = (5, 1) )

G.add_node("With", pos = (6, 1) )

G.add_node("Tobe", pos = (7, 1) )

G.add_edges_from([ ("Cosmos ", "Yhwh"), ("Cosmos ", "Father"), ("Cosmos ", "Son"), ("Cosmos ", "Holy")])

G.add_edges_from([ ("Quake", "Yhwh"), ("Quake", "Father"), ("Quake", "Son"), ("Quake", "Holy")])

G.add_edges_from([ ("Flood", "Yhwh"), ("Flood", "Father"), ("Flood", "Son"), ("Flood", "Holy")])

G.add_edges_from([ ("Plague", "Yhwh"), ("Plague", "Father"), ("Plague", "Son"), ("Plague", "Holy")])

G.add_edges_from([ ("Weary", "Yhwh"), ("Weary", "Father"), ("Weary", "Son"), ("Weary", "Holy")])

G.add_edges_from([ ("Kyrie", "Yhwh"), ("Kyrie", "Father"), ("Kyrie", "Son"), ("Kyrie", "Holy")])

G.add_edges_from([ ("Eleison", "Yhwh"), ("Eleison", "Father"), ("Eleison", "Son"), ("Eleison", "Holy")])

G.add_edges_from([ ("Christe", "Yhwh"), ("Christe", "Father"), ("Christe", "Son"), ("Christe", "Holy")])

G.add_edges_from([ ("Yhwh", "Street"), ("Yhwh", "Court")])

G.add_edges_from([ ("Father", "Street"), ("Father", "Court")])

G.add_edges_from([ ("Son", "Street"), ("Son", "Court")])

G.add_edges_from([ ("Holy", "Street"), ("Holy", "Court")])

G.add_edges_from([ ("Street", "Covenat"), ("Street", "Lamb"), ("Street", "Wine"), ("Street", "Bread")])

G.add_edges_from([ ("Court", "Covenat"), ("Court", "Lamb"), ("Court", "Wine"), ("Court", "Bread")])

G.add_edges_from([ ("Covenat", "Ark"), ("Covenat", "War"), ("Covenat", "Requite"), ("Covenat", "Discord")])

G.add_edges_from([ ("Covenat", "Forever"), ("Covenat", "God"), ("Covenat", "With"), ("Covenat", "Tobe")])

G.add_edges_from([ ("Lamb", "Ark"), ("Lamb", "War"), ("Lamb", "Requite"), ("Lamb", "Discord")])

G.add_edges_from([ ("Lamb", "Forever"), ("Lamb", "God"), ("Lamb", "With"), ("Lamb", "Tobe")])

G.add_edges_from([ ("Wine", "Ark"), ("Wine", "War"), ("Wine", "Requite"), ("Wine", "Discord")])

G.add_edges_from([ ("Wine", "Forever"), ("Wine", "God"), ("Wine", "With"), ("Wine", "Tobe")])

G.add_edges_from([ ("Bread", "Ark"), ("Bread", "War"), ("Bread", "Requite"), ("Bread", "Discord")])

G.add_edges_from([ ("Bread", "Forever"), ("Bread", "God"), ("Bread", "With"), ("Bread", "Tobe")])

#G.add_edges_from([("H11", "H21"), ("H11", "H22"), ("H12", "H21"), ("H12", "H22")])

#G.add_edges_from([("H21", "Y"), ("H22", "Y")])

nx.draw(G,

nx.get_node_attributes(G, 'pos'),

with_labels=True,

font_weight='bold',

node_size = 3000,

node_color = "lightblue",

linewidths = 3)

ax= plt.gca()

ax.collections[0].set_edgecolor("#000000")

ax.set_xlim([-.5, 7.5])

ax.set_ylim([.5, 5.5])

plt.show()

469. brando#

life/art

we’re all actors

470. bebe&cece#

i’m lost without you

back up singers!

angie, debbie, whitney

471. autoencoder#

dream it

encode/chase it

tame it

reproduce it

472. arsenio#

probably what destroyed his career

this very specific interview

which brings to mind a couple of things:

supervised learning (fellow humans label what is good & bad: genealogy of morality)

unsupervised learning (human-designed algorithm uncovers, clusters, heretofore unbeknownst relationships)

adaptive learning (an algorithm that may keep on shocking its creators, since it goes beyond what was dreamed of by the engineers who wrote it)

473. vanilla#

ice

was

i’d like to give a shout out to my …

474. doppelgänger#

victoria monét

betty ndagijimana

there’s a je ne sais quoi

06/11/2023#

475. adversarial#

networks

challenge vs. skill

to infinity & beyond work-/lifeflow

476. linda#

ko, he said to the woman in labor

her baby was crowning at this point

but he was, gloved, examining another in labor

likewise, he told anxious graduate students:

don’t worry about grades and just focus on learning

where’s the empathy in all of this?

yes, i was trying to assure them that this adversarial network, wherein i’m the challenger, will present low-to-mid level challenges; just enough to nurture their budding skills

but what does that even mean? how is a challenge calibrated? well, the students didn’t buy it and so they wrote scathing reviews - courseeval is a necessary prerequisite for access to their grades

school response rate was 84%, epidemiology 89%, my course 92% (never at any point did i mention course eval). anxious, upset people are quite motivated to write reviews; its the negative emotion that drives us and our memories beyond

477. todolistspecial#

get reviews from 2021-2023

respond to each + & - in updates

iterate this summer, overhaul next spring

478. notes#

here are a few highlights. but first, remember that difficult classes do not always produce low student satisfaction. there is evidence that students value a challenge if they feel they have been given the tools to meet that challenge

478.1#

this was 600.71 with 110 taking it for a grade. generally, course and school term mean were about the same for all metrics. response rates were 95% (course), 91% (epi), 88% (school)

strengths

volume of material can’t be taught anywhere else

resources: lecture, do file (well annotated), lab

feedback on hw was extremely important

assignments were

challengingbut rewarding, leveraged in other classes

weaknesses

not

engaging: just commandsconcepts not developed

a lot covered in a short time

too much time answering students (should do this in office hours)

students watch videos of coding: then class is problem solving

grading timeliness could be improved

provide answer keys!

discordance between lectures and hws

feels like a 3cr class

boring to teach coding, and ta’s often lacked knowledge

two hours is way too much and dull if command after command

opportunities

change to 3cr

or easier hws

split 2hr: in-class ex

transparency

+ early release of grading rubrics + describe subjective & objective elements of rubric

threats

students who had a bad experience and will strongly advise against this class

it comes in the fourth term; too little, too late?

random

478.2#

this was 700.71 with 10 students all for a grade. response rates were 100%, 89%, 84%

👎

course organization

assessment of learning

spent 10hrs/week vs. 6 for epi/school

👍

expanded knowledge

achieved objectives

478.3#

and the 600.01 class had a relative response rate of 92% (course n=60), 89% (epi n=703), and 84% (school n=8492)

👎

course organization

assessment of learning

primary instructor

👍

achieved objectives

expanded knowledge

improved

skills

details

poorly designed website

important items intentionally hiddenlectures felt like a stream of consciousness

mockery of students concerned about grades

no transparency in grading or rubric

chapters with plain titles and objectives

a complaint about 10% off for

if 0 {conditionaldescribed as meaningless annotation in hw1

the emphasis on formatting in grading hw was too much

professor needs remediation on learning thoery & professionalism

the class felt very disorganized, but i know this is due to the reorganization of the course. i like the idea of using git hub and think it will be useful once the pages are more organized - maybe having a centralized page with all the necessary links and schedules. the grading was also a little confusing. I think a rubric up front would be so helpful so students know what to prioritize

478.4#

600.79 had 11/18 (61%) response rate compared with 75% (epi n=493) & 76% (school n=2077)

👎

below average for feedback

ta’s

👍

course above school-term mean for:

instructor

organization

assessment of learning

expanded my knowledge

improved my skill

focused & organized instruction & content

skills taught exactly what you need in the real world work place

dofiles, lecture notes, annotation will be great resources even in the future

very focused on high yield topics. great course resources

i think it helps to have a basic statistics background and maybe some basic knowledge of how stata operates (what a do file is, how to import a dataset or use a data set, etc).

i think the most helpful thing about the course was that dr. xxx went over student’s code and their challenges during the class. it helped us know what the fault in our codes was at the spot. i think it helped the whole class. there was also a lot of repetition in class which consolidated the topic.

for course content, it might be helpful to have messy data to work with - in the real world, datasets and everyday functions aren’t as clear cut as those introduced in class.

Feedback on assignments could have been a little faster but there was plenty of flexibility built in to account for this.

479. negative#

is mnemonic

positive less so

how to weigh each!

481. brando#

all the worlds a stage…

marlo brando said it best:

we are all actors:

when someone asks how are you?

if you see someone you wish to criticize!

our responses are a mode of acting

because we sort of know at least something about our audience, even when they’re strangers:

skilllevel of our audience comes to mindare they motivated to advance their skills?

or tolerate our

challenges?so, we titrate the challenges we bring along in every encounter

from the outset we recognize and offer a hierarchy of challenges based on 1, 2, 3 above

but its an idea to initially focus on the lowest challenge and show pathway to higher challenges

that is, if our audience are “game”, if they’re a good sport

06/13/2023#

482. sinusitis#

last 15 years

exacerbated in 03/2016

and worst-ever from 2022-2023

these were periods of daily swimming

usually 1-2 miles (close to 2 hours a day)

i’d call it swimmers sinusitis

sounds like i have a cold to those i speak with on the phone

time to schedule an appointment with ent surgeon

483. stata#

system

native (stata application & support files, e.g. ado files)

third-party (support files, typically ado files)

your future role (as students in this class; i.e., ado files that you’ll write and install)

user

known

you

me

teaching assistants

collaborators

unknown

anticipate (i.e., empathize with different kinds of users)

share code (e.g. on github)

care (i.e., user-frienly code with annotation)

installation

local

MacOSX

Unix

Windows

remote

Desktop

Windows

Cluster

Unix/Terminal

local

-

file

edit

data

graphics

statistics

user

window

command ⌘ 1

results ⌘ 2

history ⌘ 3

variables ⌘ 4

properties ⌘ 5

graph ⌘ 6

viewer ⌘ 7

editor ⌘ 8

do-file ⌘ 9

manager ⌘ 10

help

command

the very first

legitimateword you type into the command window or on a line of code in a do fileon my computer it is always rendered blue in color if its a native Stata command

if developed by a third-party then it is white and may not work if you share your do file with others

your collaborators, ta’s, and instructors must be warned to first install the third-party program

syntax

the arrangement of words after a stata command

create well-formed instructions in stata (i.e.,

the syntax of Stata)other terms or synonyms include

code,stata code,code snippet

input

menu (outputs command and syntax in results)

do files (script with a series of commands)

ado files (script with a program or series of programs)

output

numeric:

byte

double

long

string

plain text

file paths

urls

embed

consol window

log file

excel file

word doc

html doc

publish

self (e.g. github)

journal (e.g. jama)

-

remote

desktop: a few of you may use this, which presents unique challenges for this class

terminal: unlikely that any of you will be using this, since its for advanced programmers

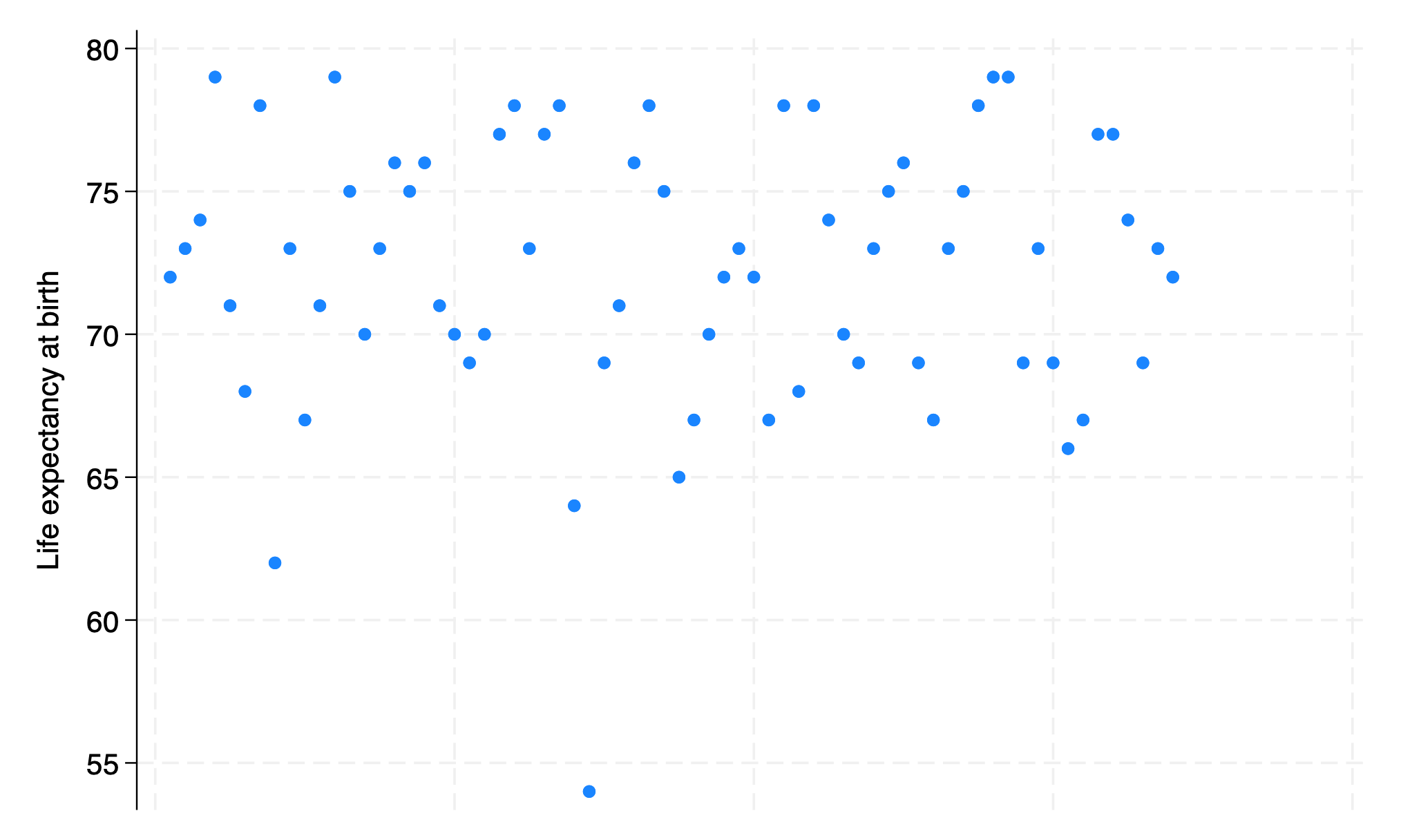

menu

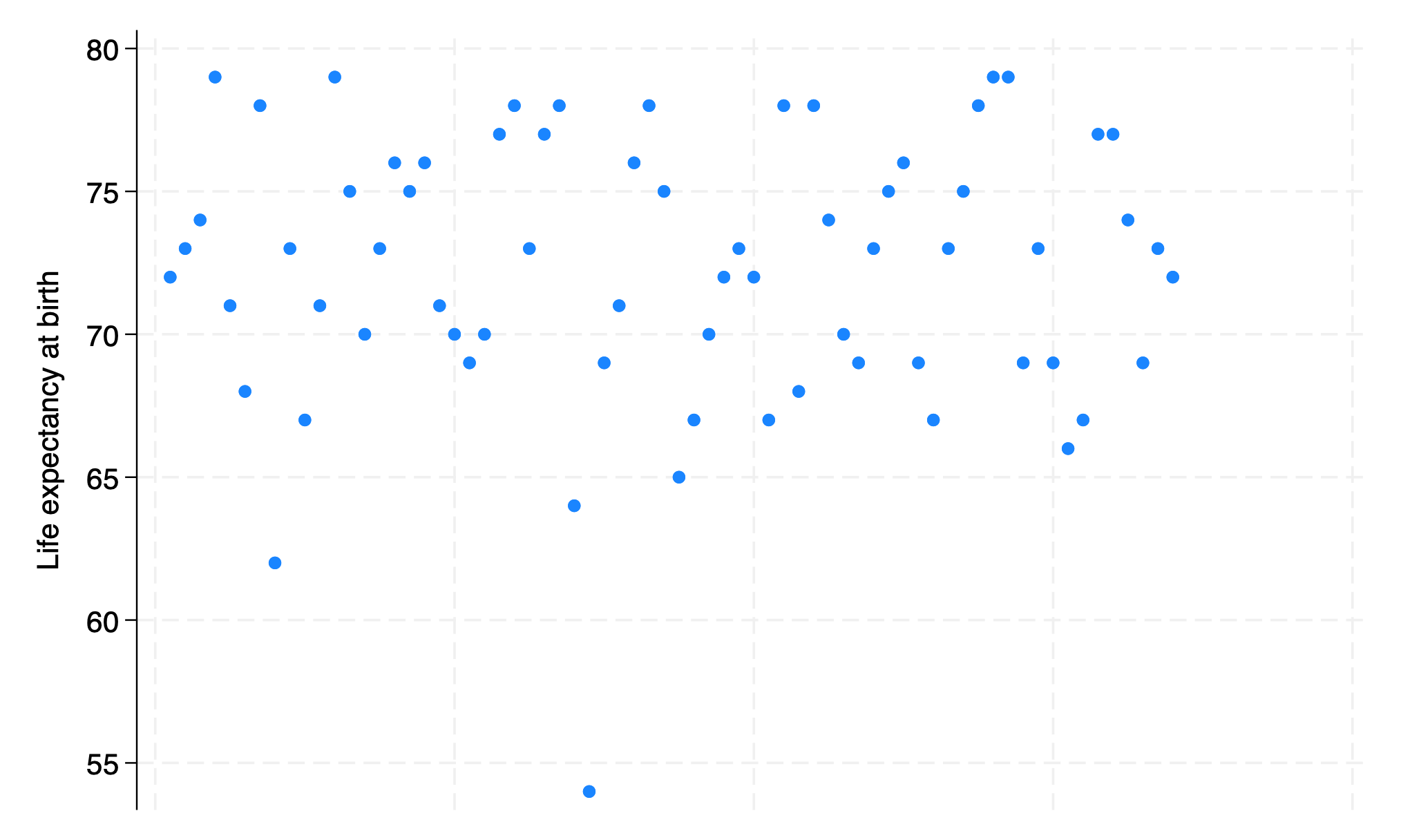

file > example datasets > lifeexp.dta > use

sysuse lifeexp.dta

webuse sysuse lifeexp.dta

command

import data into stata

webuse lifeexp, clear

. webuse lifeexp, clear

(Life expectancy, 1998)

.

explore the imported data

display c(N)

display c(k)

describe

. display c(N)

68

. display c(k)

6

. describe

Contains data from https://www.stata-press.com/data/r18/lifeexp.dta

Observations: 68 Life expectancy, 1998

Variables: 6 26 Mar 2022 09:40

(_dta has notes)

Variable Storage Display Value

name type format label Variable label

region byte %16.0g region Region

country str28 %28s Country

popgrowth float %9.0g * Avg. annual % growth

lexp byte %9.0g * Life expectancy at birth

gnppc float %9.0g * GNP per capita

safewater byte %9.0g * Safe water

* indicated variables have notes

Sorted by:

.

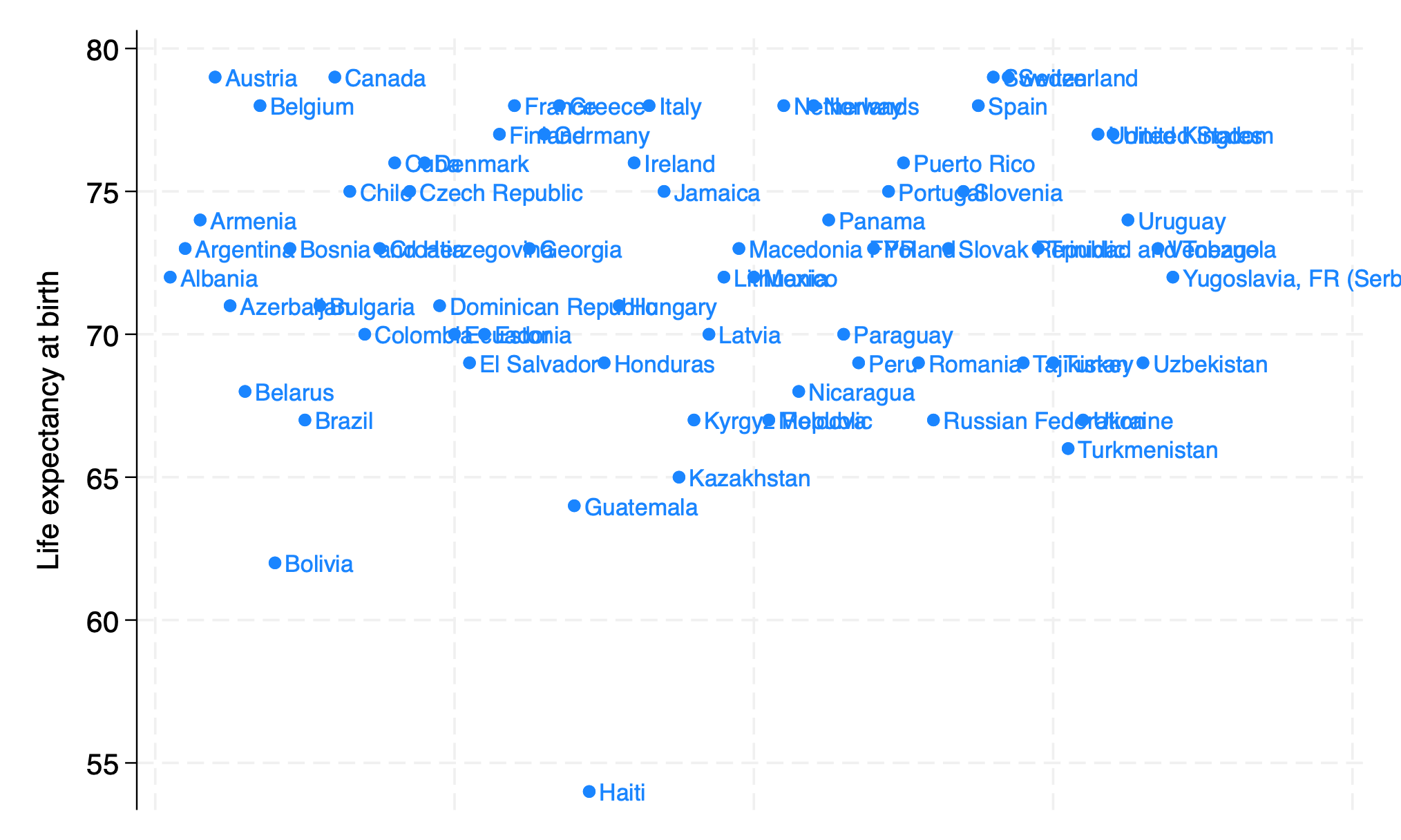

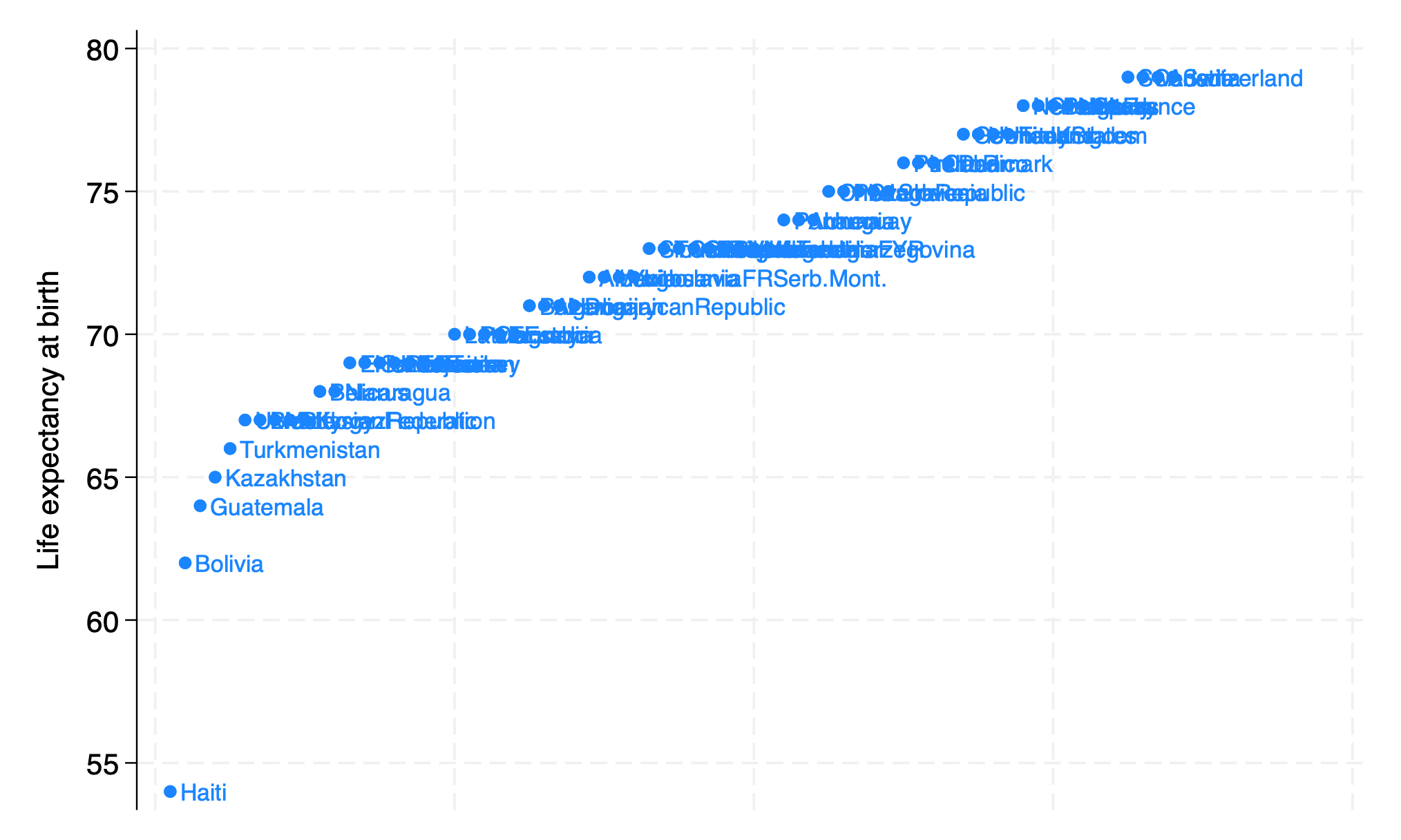

perform basic analysis

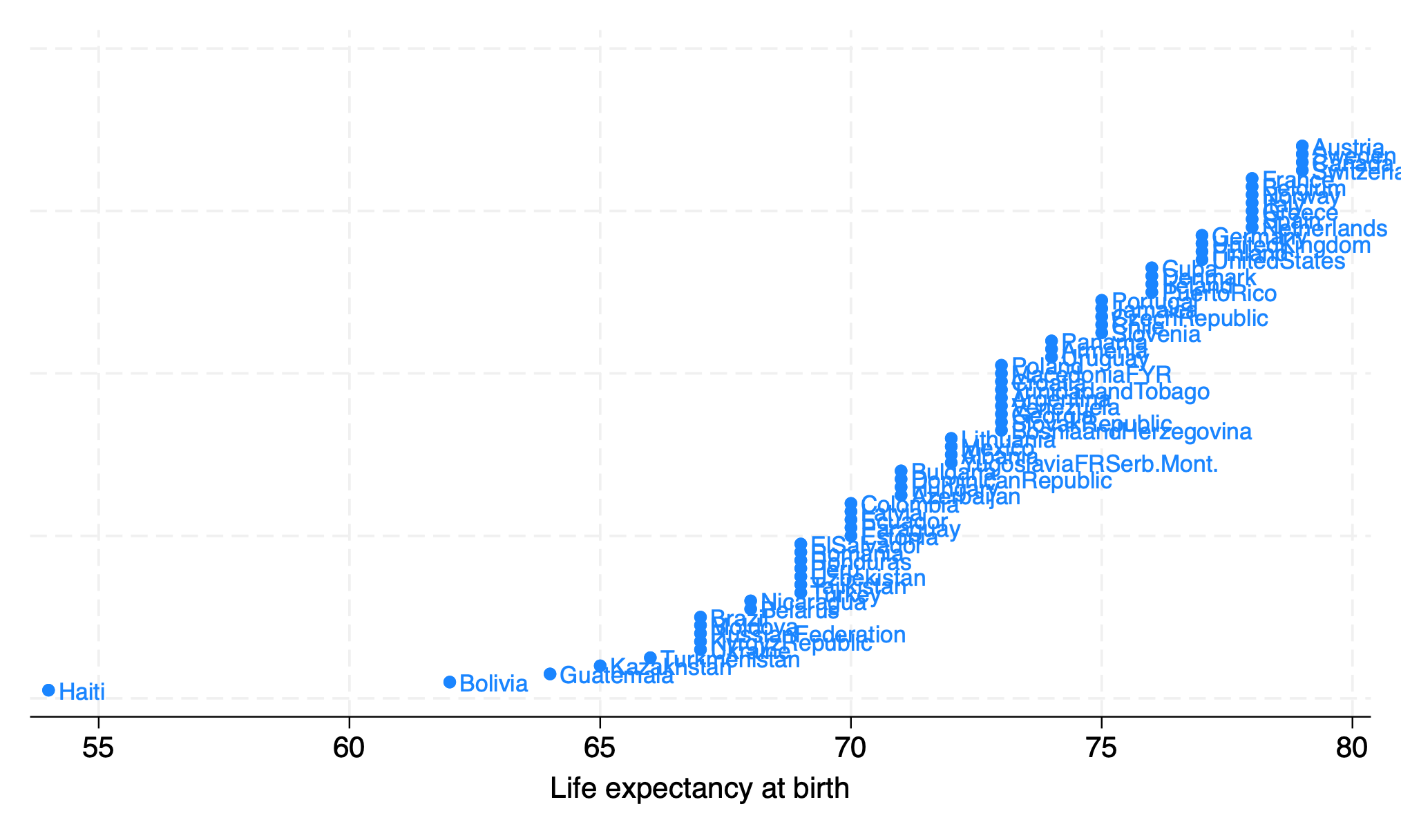

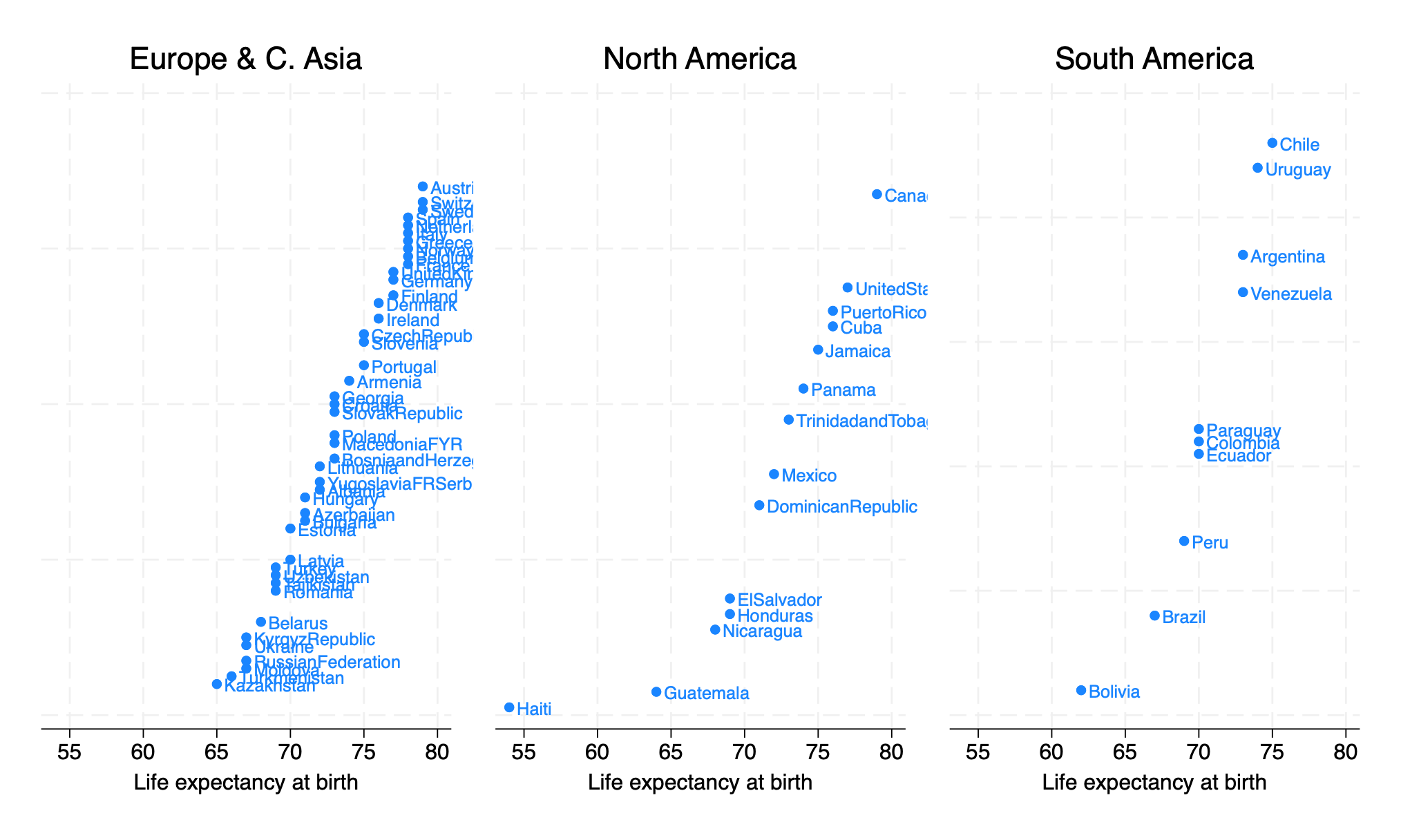

webuse lifeexp, clear

describe

encode country, gen(Country)

twoway scatter lexp Country, xscale(off)

graph export lexp_bycountry.png, replace

. webuse lifeexp, clear

(Life expectancy, 1998)

. describe

Contains data from https://www.stata-press.com/data/r18/lifeexp.dta

Observations: 68 Life expectancy, 1998

Variables: 6 26 Mar 2022 09:40

(_dta has notes)

Variable Storage Display Value

name type format label Variable label

region byte %16.0g region Region

country str28 %28s Country

popgrowth float %9.0g * Avg. annual % growth

lexp byte %9.0g * Life expectancy at birth

gnppc float %9.0g * GNP per capita

safewater byte %9.0g * Safe water

* indicated variables have notes

Sorted by:

. encode country, gen(Country)

. twoway scatter lexp Country, xscale(off)

. graph export lexp_bycountry.png, replace

file /Users/d/Desktop/lexp_bycountry.png saved as PNG format

.

end of do-file

.

do file

importing data

exploring data

analyzing data

outputing results

ado file

basis of stata commands

innate or third-party

we shall be learning to write basic programs (i.e.

stata programming)

etc

gradually build these concepts beginning with sys/user:

system-defined

constantsor c-class commands

creturn list

. creturn list

System values

c(current_date) = "13 Jun 2023"

c(current_time) = "10:43:51"

c(rmsg_time) = 0 (seconds, from set rmsg)

c(stata_version) = 18

c(version) = 18 (version)

c(userversion) = 18 (version)

c(dyndoc_version) = 2 (dyndoc)

c(born_date) = "15 May 2023"

c(edition) = "BE"